Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Getting "Job cancelled because SparkContext wa...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Getting "Job cancelled because SparkContext was shut down" when running a Job using YARN

- Labels:

-

Apache Spark

-

Apache YARN

Created on

11-18-2014

10:51 AM

- last edited on

12-10-2019

09:04 PM

by

ask_bill_brooks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm following this example using Java:

http://blog.cloudera.com/blog/2014/04/how-to-run-a-simple-apache-spark-app-in-cdh-5/

Everything works fine when using "-master local" option, but I'm getting error when trying to run on YARN ("-master yarn").

I'm running a CDH 5.2.0 cluster with 4 VMs (8GB RAM in master node and 2GB on the others).

Am I running the Job in correct way to have it running over the four nodes? What's the differece to use ("-master spark://IP:Port") and what is the default port to run this way?

Here is the full console output when trying to run on YARN:

root@hadoop1:~# spark-submit --class testesVitor.JavaWordCounter --master yarn sparkwordcount-0.0.1-SNAPSHOT.jar /user/vitor/Posts.xml 2 > output.txt

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/spark/assembly/lib/spark-assembly-1.1.0-cdh5.2.0-hadoop2.5.0-cdh5.2.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

14/11/18 16:26:49 INFO SecurityManager: Changing view acls to: root

14/11/18 16:26:49 INFO SecurityManager: Changing modify acls to: root

14/11/18 16:26:49 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

14/11/18 16:26:51 INFO Slf4jLogger: Slf4jLogger started

14/11/18 16:26:51 INFO Remoting: Starting remoting

14/11/18 16:26:52 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@hadoop1.example.com:58545]

14/11/18 16:26:52 INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkDriver@hadoop1.example.com:58545]

14/11/18 16:26:52 INFO Utils: Successfully started service 'sparkDriver' on port 58545.

14/11/18 16:26:52 INFO SparkEnv: Registering MapOutputTracker

14/11/18 16:26:52 INFO SparkEnv: Registering BlockManagerMaster

14/11/18 16:26:52 INFO DiskBlockManager: Created local directory at /tmp/spark-local-20141118162652-0ff3

14/11/18 16:26:52 INFO Utils: Successfully started service 'Connection manager for block manager' on port 46763.

14/11/18 16:26:52 INFO ConnectionManager: Bound socket to port 46763 with id = ConnectionManagerId(hadoop1.example.com,46763)

14/11/18 16:26:52 INFO MemoryStore: MemoryStore started with capacity 267.3 MB

14/11/18 16:26:52 INFO BlockManagerMaster: Trying to register BlockManager

14/11/18 16:26:52 INFO BlockManagerMasterActor: Registering block manager hadoop1.example.com:46763 with 267.3 MB RAM

14/11/18 16:26:52 INFO BlockManagerMaster: Registered BlockManager

14/11/18 16:26:52 INFO HttpFileServer: HTTP File server directory is /tmp/spark-cfde3cf0-024a-47db-b97d-374710b989fc

14/11/18 16:26:52 INFO HttpServer: Starting HTTP Server

14/11/18 16:26:52 INFO Utils: Successfully started service 'HTTP file server' on port 40252.

14/11/18 16:26:54 INFO Utils: Successfully started service 'SparkUI' on port 4040.

14/11/18 16:26:54 INFO SparkUI: Started SparkUI at http://hadoop1.example.com:4040

14/11/18 16:27:00 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/11/18 16:27:00 INFO EventLoggingListener: Logging events to hdfs://hadoop1.example.com:8020/user/spark/applicationHistory/spark-count-1416335217999

14/11/18 16:27:01 INFO SparkContext: Added JAR file:/root/sparkwordcount-0.0.1-SNAPSHOT.jar at http://192.168.56.101:40252/jars/sparkwordcount-0.0.1-SNAPSHOT.jar with timestamp 1416335221103

14/11/18 16:27:01 INFO RMProxy: Connecting to ResourceManager at hadoop1.example.com/192.168.56.101:8032

14/11/18 16:27:02 INFO Client: Got cluster metric info from ResourceManager, number of NodeManagers: 3

14/11/18 16:27:02 INFO Client: Max mem capabililty of a single resource in this cluster 1029

14/11/18 16:27:02 INFO Client: Preparing Local resources

14/11/18 16:27:02 INFO Client: Uploading file:/usr/lib/spark/assembly/lib/spark-assembly-1.1.0-cdh5.2.0-hadoop2.5.0-cdh5.2.0.jar to hdfs://hadoop1.example.com:8020/user/root/.sparkStaging/application_1415718283355_0004/spark-assembly-1.1.0-cdh5.2.0-hadoop2.5.0-cdh5.2.0.jar

14/11/18 16:27:08 INFO Client: Prepared Local resources Map(__spark__.jar -> resource { scheme: "hdfs" host: "hadoop1.example.com" port: 8020 file: "/user/root/.sparkStaging/application_1415718283355_0004/spark-assembly-1.1.0-cdh5.2.0-hadoop2.5.0-cdh5.2.0.jar" } size: 95567637 timestamp: 1416335228534 type: FILE visibility: PRIVATE)

14/11/18 16:27:08 INFO Client: Setting up the launch environment

14/11/18 16:27:08 INFO Client: Setting up container launch context

14/11/18 16:27:08 INFO Client: Yarn AM launch context:

14/11/18 16:27:08 INFO Client: class: org.apache.spark.deploy.yarn.ExecutorLauncher

14/11/18 16:27:08 INFO Client: env: Map(CLASSPATH -> $PWD:$PWD/__spark__.jar:$HADOOP_CLIENT_CONF_DIR:$HADOOP_CONF_DIR:$HADOOP_COMMON_HOME/*:$HADOOP_COMMON_HOME/lib/*:$HADOOP_HDFS_HOME/*:$HADOOP_HDFS_HOME/lib/*:$HADOOP_YARN_HOME/*:$HADOOP_YARN_HOME/lib/*:$HADOOP_MAPRED_HOME/*:$HADOOP_MAPRED_HOME/lib/*:$MR2_CLASSPATH:$PWD/__app__.jar:$PWD/*, SPARK_YARN_CACHE_FILES_FILE_SIZES -> 95567637, SPARK_YARN_STAGING_DIR -> .sparkStaging/application_1415718283355_0004/, SPARK_YARN_CACHE_FILES_VISIBILITIES -> PRIVATE, SPARK_USER -> root, SPARK_YARN_MODE -> true, SPARK_YARN_CACHE_FILES_TIME_STAMPS -> 1416335228534, SPARK_YARN_CACHE_FILES -> hdfs://hadoop1.example.com:8020/user/root/.sparkStaging/application_1415718283355_0004/spark-assembly-1.1.0-cdh5.2.0-hadoop2.5.0-cdh5.2.0.jar#__spark__.jar)

14/11/18 16:27:08 INFO Client: command: $JAVA_HOME/bin/java -server -Xmx512m -Djava.io.tmpdir=$PWD/tmp '-Dspark.tachyonStore.folderName=spark-ea602029-5871-4097-b72f-d2bd46c74054' '-Dspark.yarn.historyServer.address=http://hadoop1.example.com:18088' '-Dspark.eventLog.enabled=true' '-Dspark.yarn.secondary.jars=' '-Dspark.driver.host=hadoop1.example.com' '-Dspark.driver.appUIHistoryAddress=http://hadoop1.example.com:18088/history/spark-count-1416335217999' '-Dspark.app.name=Spark Count' '-Dspark.driver.appUIAddress=hadoop1.example.com:4040' '-Dspark.jars=file:/root/sparkwordcount-0.0.1-SNAPSHOT.jar' '-Dspark.fileserver.uri=http://192.168.56.101:40252' '-Dspark.eventLog.dir=hdfs://hadoop1.example.com:8020/user/spark/applicationHistory' '-Dspark.master=yarn-client' '-Dspark.driver.port=58545' org.apache.spark.deploy.yarn.ExecutorLauncher --class 'notused' --jar null --arg 'hadoop1.example.com:58545' --executor-memory 1024 --executor-cores 1 --num-executors 2 1> <LOG_DIR>/stdout 2> <LOG_DIR>/stderr

14/11/18 16:27:08 INFO SecurityManager: Changing view acls to: root

14/11/18 16:27:08 INFO SecurityManager: Changing modify acls to: root

14/11/18 16:27:08 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

14/11/18 16:27:08 INFO Client: Submitting application to ResourceManager

14/11/18 16:27:08 INFO YarnClientImpl: Submitted application application_1415718283355_0004

14/11/18 16:27:09 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:10 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:11 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:12 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:13 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:14 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:15 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:16 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:17 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:18 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:19 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:20 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:21 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: -1

appStartTime: 1416335228936

yarnAppState: ACCEPTED

14/11/18 16:27:22 INFO YarnClientSchedulerBackend: Application report from ASM:

appMasterRpcPort: 0

appStartTime: 1416335228936

yarnAppState: RUNNING

14/11/18 16:27:31 INFO YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after waiting maxRegisteredResourcesWaitingTime: 30000(ms)

14/11/18 16:27:31 INFO MemoryStore: ensureFreeSpace(258371) called with curMem=0, maxMem=280248975

14/11/18 16:27:31 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 252.3 KB, free 267.0 MB)

14/11/18 16:27:31 INFO MemoryStore: ensureFreeSpace(20625) called with curMem=258371, maxMem=280248975

14/11/18 16:27:31 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 20.1 KB, free 267.0 MB)

14/11/18 16:27:31 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop1.example.com:46763 (size: 20.1 KB, free: 267.2 MB)

14/11/18 16:27:31 INFO BlockManagerMaster: Updated info of block broadcast_0_piece0

14/11/18 16:27:31 INFO FileInputFormat: Total input paths to process : 1

14/11/18 16:27:31 INFO NetworkTopology: Adding a new node: /default/192.168.56.104:50010

14/11/18 16:27:31 INFO NetworkTopology: Adding a new node: /default/192.168.56.103:50010

14/11/18 16:27:31 INFO NetworkTopology: Adding a new node: /default/192.168.56.102:50010

14/11/18 16:27:32 INFO SparkContext: Starting job: collect at JavaWordCounter.java:84

14/11/18 16:27:32 INFO DAGScheduler: Registering RDD 3 (mapToPair at JavaWordCounter.java:30)

14/11/18 16:27:32 INFO DAGScheduler: Registering RDD 7 (mapToPair at JavaWordCounter.java:68)

14/11/18 16:27:32 INFO DAGScheduler: Got job 0 (collect at JavaWordCounter.java:84) with 228 output partitions (allowLocal=false)

14/11/18 16:27:32 INFO DAGScheduler: Final stage: Stage 0(collect at JavaWordCounter.java:84)

14/11/18 16:27:32 INFO DAGScheduler: Parents of final stage: List(Stage 2)

14/11/18 16:27:32 INFO DAGScheduler: Missing parents: List(Stage 2)

14/11/18 16:27:32 INFO DAGScheduler: Submitting Stage 1 (MappedRDD[3] at mapToPair at JavaWordCounter.java:30), which has no missing parents

14/11/18 16:27:32 INFO MemoryStore: ensureFreeSpace(4096) called with curMem=278996, maxMem=280248975

14/11/18 16:27:32 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.0 KB, free 267.0 MB)

14/11/18 16:27:32 INFO MemoryStore: ensureFreeSpace(2457) called with curMem=283092, maxMem=280248975

14/11/18 16:27:32 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.4 KB, free 267.0 MB)

14/11/18 16:27:32 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on hadoop1.example.com:46763 (size: 2.4 KB, free: 267.2 MB)

14/11/18 16:27:32 INFO BlockManagerMaster: Updated info of block broadcast_1_piece0

14/11/18 16:27:32 INFO DAGScheduler: Submitting 228 missing tasks from Stage 1 (MappedRDD[3] at mapToPair at JavaWordCounter.java:30)

14/11/18 16:27:32 INFO YarnClientClusterScheduler: Adding task set 1.0 with 228 tasks

14/11/18 16:27:32 INFO RackResolver: Resolved 192.168.56.104 to /default

14/11/18 16:27:32 INFO RackResolver: Resolved 192.168.56.103 to /default

14/11/18 16:27:32 INFO RackResolver: Resolved 192.168.56.102 to /default

14/11/18 16:27:32 INFO RackResolver: Resolved hadoop2.example.com to /default

14/11/18 16:27:32 INFO RackResolver: Resolved hadoop3.example.com to /default

14/11/18 16:27:32 INFO RackResolver: Resolved hadoop4.example.com to /default

14/11/18 16:27:36 ERROR YarnClientSchedulerBackend: Yarn application already ended: FAILED

14/11/18 16:27:36 INFO SparkUI: Stopped Spark web UI at http://hadoop1.example.com:4040

14/11/18 16:27:36 INFO DAGScheduler: Stopping DAGScheduler

14/11/18 16:27:36 INFO YarnClientSchedulerBackend: Shutting down all executors

14/11/18 16:27:36 INFO YarnClientSchedulerBackend: Asking each executor to shut down

14/11/18 16:27:36 INFO YarnClientSchedulerBackend: Stopped

14/11/18 16:27:36 INFO DAGScheduler: Failed to run collect at JavaWordCounter.java:84

Exception in thread "main" org.apache.spark.SparkException: Job cancelled because SparkContext was shut down

at org.apache.spark.scheduler.DAGScheduler$$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:694)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:693)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:79)

at org.apache.spark.scheduler.DAGScheduler.cleanUpAfterSchedulerStop(DAGScheduler.scala:693)

at org.apache.spark.scheduler.DAGSchedulerEventProcessActor.postStop(DAGScheduler.scala:1399)

at akka.actor.dungeon.FaultHandling$class.akka$actor$dungeon$FaultHandling$$finishTerminate(FaultHandling.scala:201)

at akka.actor.dungeon.FaultHandling$class.terminate(FaultHandling.scala:163)

at akka.actor.ActorCell.terminate(ActorCell.scala:338)

at akka.actor.ActorCell.invokeAll$1(ActorCell.scala:431)

at akka.actor.ActorCell.systemInvoke(ActorCell.scala:447)

at akka.dispatch.Mailbox.processAllSystemMessages(Mailbox.scala:262)

at akka.dispatch.Mailbox.run(Mailbox.scala:218)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(AbstractDispatcher.scala:386)

at scala.concurrent.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at scala.concurrent.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at scala.concurrent.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

root@hadoop1:~#

Created 11-18-2014 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This isn't the problem. It's just a symptom of the app failing for another reason:

14/11/18 16:27:36 ERROR YarnClientSchedulerBackend: Yarn application already ended: FAILED

You'd have to look at the actual app worker logs to see why it's failing.

Created 11-18-2014 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This isn't the problem. It's just a symptom of the app failing for another reason:

14/11/18 16:27:36 ERROR YarnClientSchedulerBackend: Yarn application already ended: FAILED

You'd have to look at the actual app worker logs to see why it's failing.

Created 11-18-2014 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where can I get this log?

Created 11-18-2014 12:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're running on YARN, so you should see the application as a "FAILED" application in the Resource Manager UI. Click through and you can find the logs of individual containers, which should show some failure.

Created 11-19-2014 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Srowen, thanks for the reply. I discovered the problem and its was related with memory limits in YARN configuration. Now I can run my job, but I still having a doult:

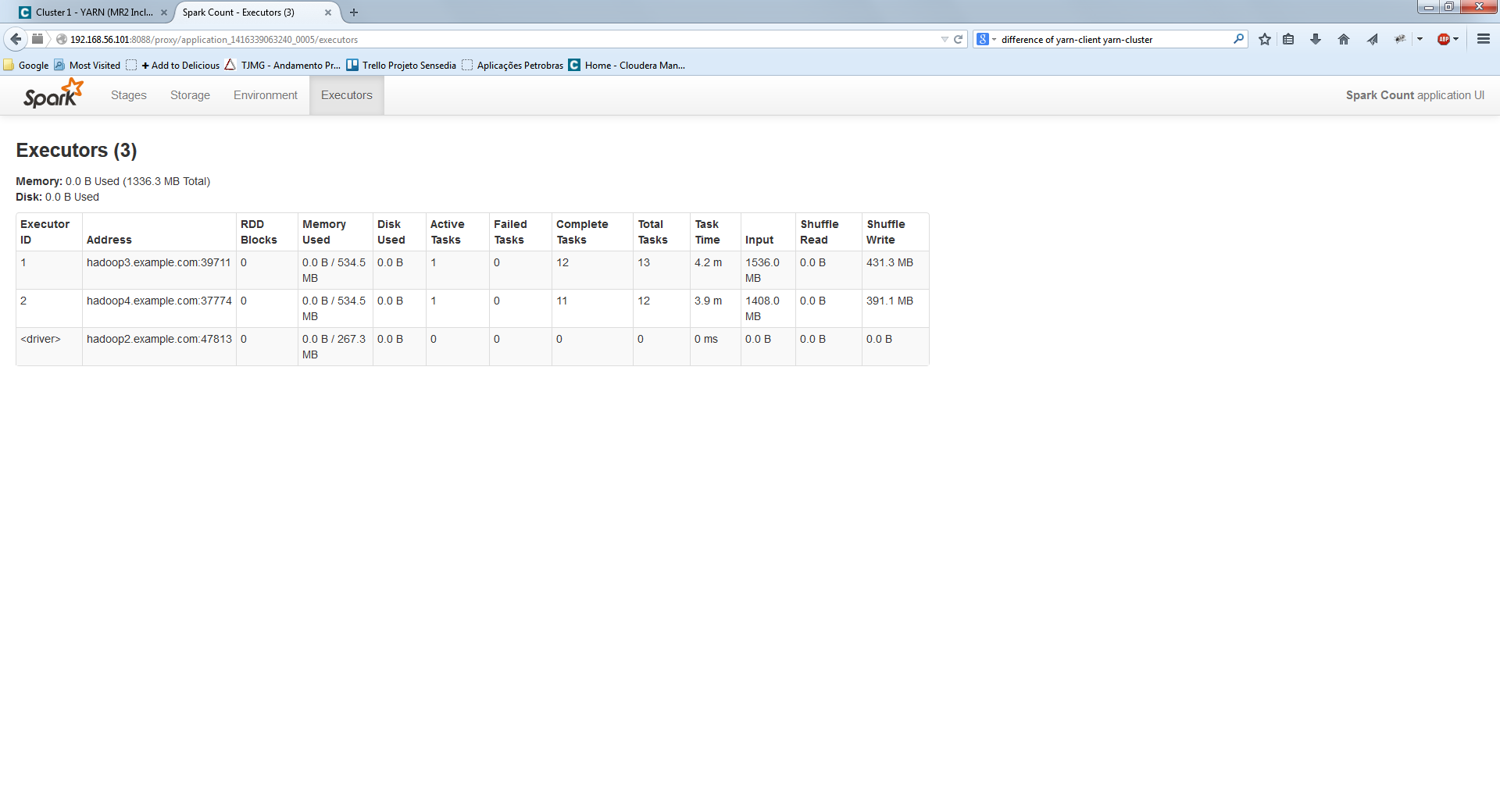

I have four nodes on the cluster, but the job is running in only two of them. Why is that? I'm firing the job from hadoop1 shell and the job is running only with nodes 3 and 4. Node 2 became the driver. How can I better use my resources?

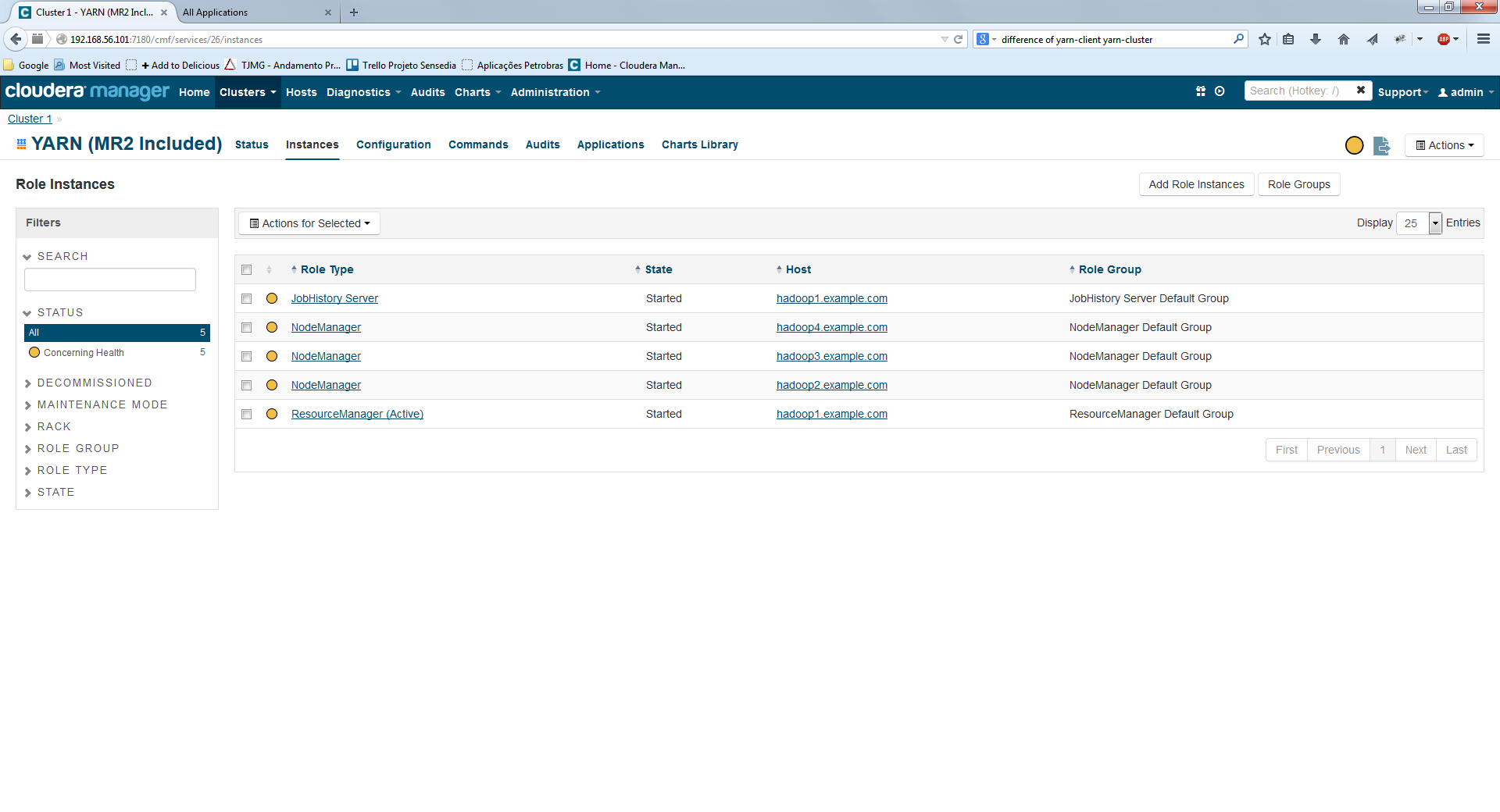

Here is my YARN instances:

And here is my Job running:

Created 11-19-2014 04:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll post this in different thread.

Created 12-10-2019 08:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What were the memory limits you changed in YARN configuration? Please post them.. It will be helpful for me solving similar issue in my application..