Support Questions

- Cloudera Community

- Support

- Support Questions

- cloudera director bootstrap failure: Cloudera Mana...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

cloudera director bootstrap failure: Cloudera Manager 'First Run' command execution faile

- Labels:

-

Cloudera Manager

Created on 06-08-2018 04:35 PM - edited 09-16-2022 06:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am new to Cloudera and am trying to use the Cloudera director to spin up an AWS cluster to test Apache Spot in accordance with the instructions given here -

https://blog.cloudera.com/blog/2018/02/apache-spot-incubating-and-cloudera-on-aws-in-60-minutes/

Having installed the Cloudera director according to the instructions mentioned above, the bootstrap command

"cloudera-director bootstrap spot-director.conf" fails to go through

Snapshot of the logs -

* Requesting 5 instance(s) in 3 group(s) .................................... done

* Preparing instances in parallel (20 at a time) ......................................................................................................

....................... done

* Waiting for Cloudera Manager installation to complete .... done

* Installing Cloudera Manager agents on all instances in parallel (20 at a time) .............. done

* Waiting for new external database servers to start running ... done

* Creating CDH5 cluster using the new instances .... done

* Creating cluster: apache-spot ..... done

* Downloading parcels: SPARK2-2.2.0.cloudera2-1.cdh5.12.0.p0.232957,KAFKA-2.2.0-1.2.2.0.p0.68,CDH-5.12.2-1.cdh5.12.2.p0.4 .... done

* Distributing parcels: KAFKA-2.2.0-1.2.2.0.p0.68,SPARK2-2.2.0.cloudera2-1.cdh5.12.0.p0.232957,CDH-5.12.2-1.cdh5.12.2.p0.4 .... done

* Switching parcel distribution rate limits back to defaults: 51200KB/s with 25 concurrent uploads ... done

* Activating parcels: KAFKA-2.2.0-1.2.2.0.p0.68,SPARK2-2.2.0.cloudera2-1.cdh5.12.0.p0.232957,CDH-5.12.2-1.cdh5.12.2.p0.4 ......... done

* Creating cluster services ... done

* Assigning roles to instances ... done

* Automatically configuring services and roles ... done

* Configuring HIVE database ... done

* Configuring OOZIE database .... done

* Creating Hive Metastore Database ... done

* Calling firstRun on cluster apache-spot ... done

* Waiting for firstRun on cluster apache-spot .... done

* Starting ... done

* Collecting diagnostic data .... done

* Cloudera Manager 'First Run' command execution failed: Failed to perform First Run of services. ...

There are no relevant error messages in the director.log (except the one that says that first-run failed). This are some of the configurations in the environment file used by the cloudera director -

------

cloudera-manager {

instance: ${instances.m44x} {

tags {

application: "CM5"

group: cm

}

}

csds: [

"http://archive.cloudera.com/spark2/csd/SPARK2_ON_YARN-2.2.0.cloudera1.jar",

]

javaInstallationStrategy: NONE

#

# Automatically activate 60-Day Cloudera Enterprise Trial

#

enableEnterpriseTrial: true

}

#

# Cluster description

#

cluster {

# List the products and their versions that need to be installed.

# These products must have a corresponding parcel in the parcelRepositories

# configured above. The specified version will be used to find a suitable

# parcel. Specifying a version that points to more than one parcel among

# those available will result in a configuration error. Specify more granular

# versions to avoid conflicts.

products {

CDH: 5.12,

SPARK2: 2.2,

KAFKA: 2

}

parcelRepositories: ["http://archive.cloudera.com/cdh5/parcels/5.12/",

"http://archive.cloudera.com/kafka/parcels/2.2/",

"http://archive.cloudera.com/spark2/parcels/2.2.0/"]

services: [HDFS, YARN, ZOOKEEPER, HIVE, IMPALA, KAFKA, SPARK2_ON_YARN, HUE, OOZIE]

masters {

count: 1

instance: ${instances.m44x} {

tags {

group: master

}

}

roles {

HDFS: [NAMENODE, SECONDARYNAMENODE]

YARN: [RESOURCEMANAGER, JOBHISTORY]

ZOOKEEPER: [SERVER]

HIVE: [HIVESERVER2, HIVEMETASTORE]

IMPALA: [CATALOGSERVER, STATESTORE]

SPARK2_ON_YARN: [SPARK2_YARN_HISTORY_SERVER]

HUE: [HUE_SERVER]

OOZIE: [OOZIE_SERVER]

KAFKA: [KAFKA_BROKER]

}

}

--------

Would someone be able to tell me how to debug this issue further? Are there any logs in the cloudera manager server node that I should look at to figure what went wrong?

Thanks

Created 06-19-2018 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sg321,

I've followed the instructions you linked through cluster bootstrap and successfully created a cluster using ami-2051294a in us-east-1.

I did have to make 1 modification to get the spark2 csd to match the spark2 parcel repo. You can do this by either bumping the csd up to http://archive.cloudera.com/spark2/csd/SPARK2_ON_YARN-2.2.0.cloudera2.jar or pinning the parcel repo to http://archive.cloudera.com/spark2/parcels/2.2.0.cloudera2/

Is this reliably/repeatably failing first run for you?

Created 06-10-2018 02:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Found this message in the scm-server-log -

2018-06-10 15:26:45,324 INFO CommandPusher:com.cloudera.cmf.model.DbCommand: Command 66(First Run) has completed. finalstate:FINISHED, success:false, msg:Failed to perform First Run of services

In the scm-agent-log -

[10/Jun/2018 15:22:36 +0000] 12790 MainThread agent ERROR Failed to connect to previous supervisor.

Traceback (most recent call last):

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/cmf-5.14.3-py2.7.egg/cmf/agent.py", line 2136, in find_or_start_supervisor

self.configure_supervisor_clients()

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/cmf-5.14.3-py2.7.egg/cmf/agent.py", line 2317, in configure_supervisor_clients

supervisor_options.realize(args=["-c", os.path.join(self.supervisor_dir, "supervisord.conf")])

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/supervisor-3.0-py2.7.egg/supervisor/options.py", line 1599, in realize

Options.realize(self, *arg, **kw)

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/supervisor-3.0-py2.7.egg/supervisor/options.py", line 333, in realize

self.process_config()

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/supervisor-3.0-py2.7.egg/supervisor/options.py", line 341, in process_config

self.process_config_file(do_usage)

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/supervisor-3.0-py2.7.egg/supervisor/options.py", line 376, in process_config_file

self.usage(str(msg))

File "/usr/lib64/cmf/agent/build/env/lib/python2.7/site-packages/supervisor-3.0-py2.7.egg/supervisor/options.py", line 164, in usage

self.exit(2)

SystemExit: 2

Also -

[10/Jun/2018 15:22:37 +0000] 12790 MainThread downloader ERROR Failed rack peer update: [Errno 111] Connection refused

Created 06-12-2018 08:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sg321,

You do need to look in CM to find the root cause of the First Run failure. The CM UI has a tab "All Recent Commands".

First Run is composed of a series of steps. You can determine which step failed through the UI.

You can also find this information in the scm-server-log. Information about the step that failed should appear somewhere above the scm-server-log message that you posted.

David

Created 06-14-2018 12:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks David for the info!

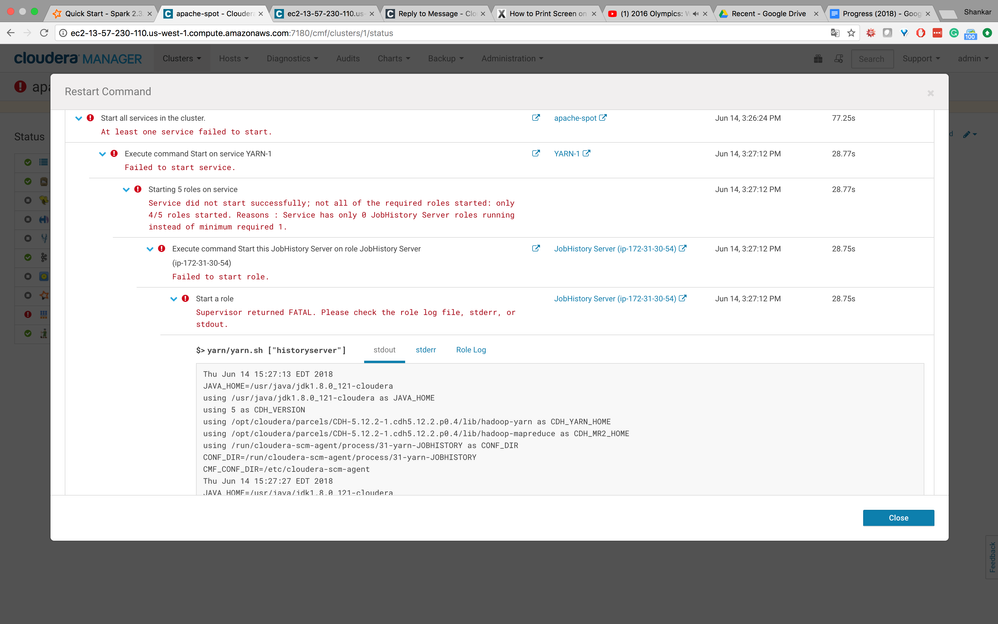

I logged into the CM UI and tried to start the cluster again (to diagnose what was wrong). It appears that the job history (of service YARN-1) failed to start. Attaching a snapshot.

In the role file, I see this message -

Service org.apache.hadoop.mapreduce.v2.hs.HistoryFileManager failed in state INITED; cause: org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Error creating done directory: [hdfs://ip-172-31-30-54.us-west-1.compute.internal:8020/user/history/done] org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Error creating done directory: [hdfs://ip-172-31-30-54.us-west-1.compute.internal:8020/user/history/done] at org.apache.hadoop.mapreduce.v2.hs.HistoryFileManager.tryCreatingHistoryDirs(HistoryFileManager.java:682) at org.apache.hadoop.mapreduce.v2.hs.HistoryFileManager.createHistoryDirs(HistoryFileManager.java:618) at org.apache.hadoop.mapreduce.v2.hs.HistoryFileManager.serviceInit(HistoryFileManager.java:579) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:163) at org.apache.hadoop.mapreduce.v2.hs.JobHistory.serviceInit(JobHistory.java:95) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:163) at org.apache.hadoop.service.CompositeService.serviceInit(CompositeService.java:107) at org.apache.hadoop.mapreduce.v2.hs.JobHistoryServer.serviceInit(JobHistoryServer.java:154) at org.apache.hadoop.service.AbstractService.init(AbstractService.java:163) at org.apache.hadoop.mapreduce.v2.hs.JobHistoryServer.launchJobHistoryServer(JobHistoryServer.java:229) at org.apache.hadoop.mapreduce.v2.hs.JobHistoryServer.main(JobHistoryServer.java:239) Caused by: org.apache.hadoop.security.AccessControlException: Permission denied: user=mapred, access=WRITE, inode="/":hdfs:supergroup:drwxr-xr-x at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:162) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3634) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3617) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:3599) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6659) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4424) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4394) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4367) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:873) at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:323) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:618) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2211) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106) at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73) at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:3115) at org.apache.hadoop.fs.Hdfs.mkdir(Hdfs.java:311) at org.apache.hadoop.fs.FileContext$4.next(FileContext.java:747) at org.apache.hadoop.fs.FileContext$4.next(FileContext.java:743) at org.apache.hadoop.fs.FSLinkResolver.resolve(FSLinkResolver.java:90) at org.apache.hadoop.fs.FileContext.mkdir(FileContext.java:743) at org.apache.hadoop.mapreduce.v2.hs.HistoryFileManager.mkdir(HistoryFileManager.java:737) at org.apache.hadoop.mapreduce.v2.hs.HistoryFileManager.tryCreatingHistoryDirs(HistoryFileManager.java:665) ... 10 more Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=mapred, access=WRITE, inode="/":hdfs:supergroup:drwxr-xr-x at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240) at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:162) at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3634) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3617) at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:3599) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6659) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4424) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4394) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4367) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:873) at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:323) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:618) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2211)

Any recommendations on how to debug further?

Thanks

Created 06-18-2018 08:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sg321,

Please look at this article.

I find it strange that you are getting this error on a clean cluster bootstrap. Can you share your conf file? Please redact any secrets (e.g., aws keys). What version of Director are you using?

David

Created 06-18-2018 02:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David,

Will go through the link that you mentioned.

I believe I am using version 2.7.x. Where can I find which version I am using? I logged into the UI and am unable to locate this version number anywhere. Below is the director.conf file -

#

# Copyright (c) 2014 Cloudera, Inc. All rights reserved.

#

#

# Simple AWS Cloudera Director configuration file with automatic role assignments

# that works as expected if you use a single instance type for all cluster nodes

#

#

# Cluster name

#

name: apache-spot

#

# Cloud provider configuration (credentials, region or zone and optional default image)

#

provider {

type: aws

#

# Get AWS credentials from the OS environment

# See http://docs.aws.amazon.com/general/latest/gr/aws-security-credentials.html

#

# If specifying the access keys directly and not through variables, make sure to enclose

# them in double quotes.

#

# Leave the accessKeyId and secretAccessKey fields blank when running on an instance

# launched with an IAM role.

accessKeyId: ${?AWS_ACCESS_KEY_ID}

secretAccessKey: ${?AWS_SECRET_ACCESS_KEY}

#

# ID of the Amazon AWS region to use

# See: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-regions-availability-zones.html

#

region: ${?aws_region}

#

# Region endpoint (if you are using one of the Gov. regions)

#

# regionEndpoint: ec2.us-gov-west-1.amazonaws.com

#

# ID of the VPC subnet

# See: http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_Subnets.html

#

subnetId: ${?aws_subnet}

#

# Comma separated list of security group IDs

# See: http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_SecurityGroups.html

#

# Default security group

securityGroupsIds: ${?aws_security_group}

#

# A prefix that Cloudera Director should use when naming the instances (this is not part of the hostname)

#

instanceNamePrefix: spot-cluster

#

# Specify a size for the root volume (in GBs). Cloudera Director will automatically expand the

# filesystem so that you can use all the available disk space for your application

# See: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/storage_expand_partition.html

#

# rootVolumeSizeGB: 100 # defaults to 50 GB if not specified

#

# Specify the type of the EBS volume used for the root partition. Defaults to gp2

# See: http://aws.amazon.com/ebs/details/

#

# rootVolumeType: gp2 # OR standard (for EBS magnetic)

#

# Whether to associate a public IP address with instances or not. If this is false

# we expect instances to be able to access the internet using a NAT instance

#

# Currently the only way to get optimal S3 data transfer performance is to assign

# public IP addresses to your instances and not use NAT (public subnet type of setup)

#

# See: http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpc-ip-addressing.html

#

# associatePublicIpAddresses: true

}

#

# SSH credentials to use to connect to the instances

#

ssh {

username: ec2-user # for RHEL image

privateKey: ${?path_to_private_key} # with an absolute path to .pem file

}

#

# A list of instance types to use for group of nodes or management services

#

common-instance-properties {

# hvm | RHEL-7.2_HVM_GA-20151112-x86_64-1-Hourly2-GP2 | ami-2051294a - original

# hvm | RHEL-7.3_HVM_GA-20161026-x86_64-1-Hourly2-GP2 | ami-b63769a1

# hvm | RHEL-7.3_HVM-20170613-x86_64-4-Hourly2-GP2 | ami-9e2f0988

image: ${?aws_ami}

tags {

owner: ${?aws_owner}

}

# this script needs to already be downloaded on the director host

bootstrapScriptPath: "./java8.sh"

}

instances {

m42x : ${common-instance-properties} {

type: m4.2xlarge

}

m44x : ${common-instance-properties} {

type: m4.4xlarge

}

i22x : ${common-instance-properties} {

type: i2.2xlarge

}

d2x : ${common-instance-properties} {

type: d2.xlarge

}

t2l : ${common-instance-properties} { # only suitable as a gateway

type: t2.large

}

t2xl : ${common-instance-properties} { # only suitable as a gateway

type: t2.xlarge

}

}

#

# Configuration for Cloudera Manager. Cloudera Director can use an existing instance

# or bootstrap everything from scratch for a new cluster

#

cloudera-manager {

instance: ${instances.m44x} {

tags {

application: "CM5"

group: cm

}

}

csds: [

"http://archive.cloudera.com/spark2/csd/SPARK2_ON_YARN-2.2.0.cloudera1.jar",

]

javaInstallationStrategy: NONE

#

# Automatically activate 60-Day Cloudera Enterprise Trial

#

enableEnterpriseTrial: true

}

#

# Cluster description

#

cluster {

# List the products and their versions that need to be installed.

# These products must have a corresponding parcel in the parcelRepositories

# configured above. The specified version will be used to find a suitable

# parcel. Specifying a version that points to more than one parcel among

# those available will result in a configuration error. Specify more granular

# versions to avoid conflicts.

products {

CDH: 5.12,

SPARK2: 2.2,

KAFKA: 2

}

parcelRepositories: ["http://archive.cloudera.com/cdh5/parcels/5.12/",

"http://archive.cloudera.com/kafka/parcels/2.2/",

"http://archive.cloudera.com/spark2/parcels/2.2.0/"]

services: [HDFS, YARN, ZOOKEEPER, HIVE, IMPALA, KAFKA, SPARK2_ON_YARN, HUE, OOZIE]

masters {

count: 1

instance: ${instances.m44x} {

tags {

group: master

}

}

roles {

HDFS: [NAMENODE, SECONDARYNAMENODE]

YARN: [RESOURCEMANAGER, JOBHISTORY]

ZOOKEEPER: [SERVER]

HIVE: [HIVESERVER2, HIVEMETASTORE]

IMPALA: [CATALOGSERVER, STATESTORE]

SPARK2_ON_YARN: [SPARK2_YARN_HISTORY_SERVER]

HUE: [HUE_SERVER]

OOZIE: [OOZIE_SERVER]

KAFKA: [KAFKA_BROKER]

}

}

workers {

count: 3

minCount: 3

instance: ${instances.m44x} {

tags {

group: worker

}

}

roles {

HDFS: [DATANODE]

YARN: [NODEMANAGER]

IMPALA: [IMPALAD]

}

}

gateways {

count: 1

instance: ${instances.m44x} {

tags {

group: gateway

}

}

roles {

HIVE: [GATEWAY]

SPARK2_ON_YARN: [GATEWAY]

HDFS: [GATEWAY]

YARN: [GATEWAY]

}

}

}

And here are the corresponding environment variables -

export AWS_ACCESS_KEY=REDACTED export AWS_SECRET_ACCESS_KEY=REDACTED export aws_region=us-west-1 export aws_subnet=subnet-00e5d567 export aws_security_group=sg-6e228616 export path_to_private_key=/home/ec2-user/.ssh/id_rsa export aws_owner=REDACTED export aws_ami=ami-d1315fb1

Note that have both of these have been configured according to the instructions given here

Created 06-19-2018 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sg321,

I've followed the instructions you linked through cluster bootstrap and successfully created a cluster using ami-2051294a in us-east-1.

I did have to make 1 modification to get the spark2 csd to match the spark2 parcel repo. You can do this by either bumping the csd up to http://archive.cloudera.com/spark2/csd/SPARK2_ON_YARN-2.2.0.cloudera2.jar or pinning the parcel repo to http://archive.cloudera.com/spark2/parcels/2.2.0.cloudera2/

Is this reliably/repeatably failing first run for you?

Created 06-21-2018 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This finally worked for me.

I have no idea why the YARN job history server failed to come. After adding permissions for "mapred" (described earlier in the forums), I was able to get the YARN service up. After that, spark installation failed.

After following your recommendation and updating the spark parcels in the director .conf file, the cluster came up successfully. I still have no idea how upgrading the spark versions prevented yarn from failing!

Thanks!