Support Questions

- Cloudera Community

- Support

- Support Questions

- Impala hash join optimization

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Impala hash join optimization

- Labels:

-

Apache Impala

-

Apache Kudu

-

HDFS

Created on 10-03-2018 01:38 AM - edited 09-16-2022 06:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Cloudera Impala community,

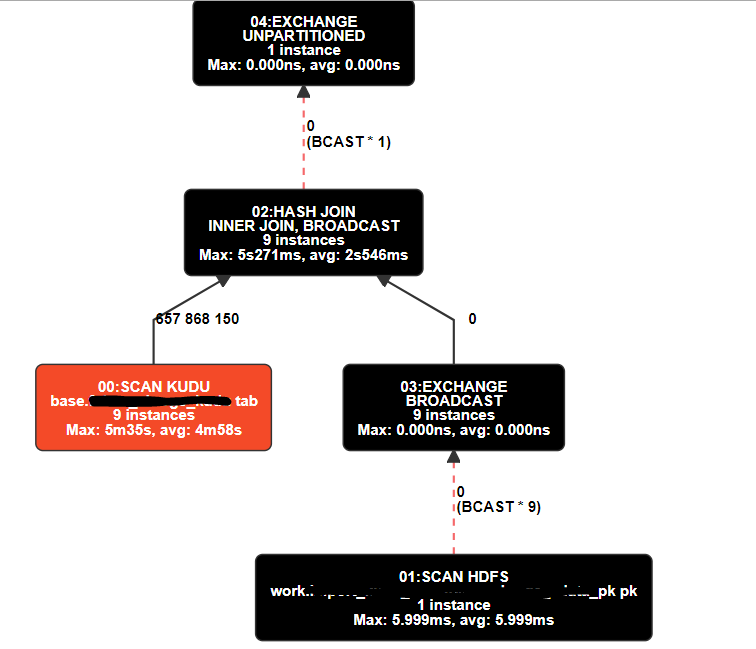

we have many join queries between Impala (HDFS) and Kudu datasets where the large kudu table is joined with a small HDFS table. I see in many cases, that the HDFS dataset condition returns 0 rows, but the query still scans all the 600mil records in Kudu.

For example this query is running for 10min+ and it is clear that the right part of the query, the broadcasted dataset is empty, zero rows. So the optimizer could just skip the scanning and return empty dataset.

Query plan

Max Per-Host Resource Reservation: Memory=17.00MB

Per-Host Resource Estimates: Memory=193.00MB

F02:PLAN FRAGMENT [UNPARTITIONED] hosts=1 instances=1

| Per-Host Resources: mem-estimate=0B mem-reservation=0B

PLAN-ROOT SINK

| mem-estimate=0B mem-reservation=0B

|

04:EXCHANGE [UNPARTITIONED]

| mem-estimate=0B mem-reservation=0B

| tuple-ids=0,1 row-size=1.62KB cardinality=88114

|

F00:PLAN FRAGMENT [RANDOM] hosts=9 instances=9

Per-Host Resources: mem-estimate=17.00MB mem-reservation=17.00MB

02:HASH JOIN [INNER JOIN, BROADCAST]

| hash predicates: tab.key1 = pk.key1, tab.key2 = pk.key2, tab.key3 = pk.key3, tab.key4 = pk.key4, tab.root_key3 = pk.root_key3

| fk/pk conjuncts: tab.key1 = pk.key1, tab.key2 = pk.key2, tab.key3 = pk.key3, tab.key4 = pk.key4, tab.root_key3 = pk.root_key3

| mem-estimate=17.00MB mem-reservation=17.00MB spill-buffer=1.00MB

| tuple-ids=0,1 row-size=1.62KB cardinality=88114

|

|--03:EXCHANGE [BROADCAST]

| | mem-estimate=0B mem-reservation=0B

| | tuple-ids=1 row-size=159B cardinality=88114

| |

| F01:PLAN FRAGMENT [RANDOM] hosts=1 instances=1

| Per-Host Resources: mem-estimate=176.00MB mem-reservation=0B

| 01:SCAN HDFS [work.pk_table_pk pk, RANDOM]

| partitions=1/1 files=1 size=2.51MB

| predicates: pk.rn = 1, pk.tr_code IN ('D')

| stats-rows=249225 extrapolated-rows=disabled

| table stats: rows=249225 size=2.51MB

| column stats: all

| parquet statistics predicates: pk.rn = 1, pk.tr_code IN ('D')

| parquet dictionary predicates: pk.rn = 1, pk.tr_code IN ('D')

| mem-estimate=176.00MB mem-reservation=0B

| tuple-ids=1 row-size=159B cardinality=88114

|

00:SCAN KUDU [base.large_table tab]

mem-estimate=0B mem-reservation=0B

tuple-ids=0 row-size=1.46KB cardinality=674357713

The query:

select *

from base.large_table tab join

work.pk_table_pk pk ON

tab.`key1` = pk.`key1` and

tab.`key2` = pk.`key2` and

tab.`key3` = pk.`key3` and

tab.`key4` = pk.`key4` and

tab.`root_key3` = pk.`root_key3`

where pk.tr_code in ('D') and pk.rn =1

This is running on CDH 5.13. Could this be optimized in the future version of Impala?

Thanks

Created 10-03-2018 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the Kudu min-max filter pushdown optimisation in C5.14+ would achieve this: https://issues.apache.org/jira/browse/IMPALA-4252

Created 10-03-2018 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the Kudu min-max filter pushdown optimisation in C5.14+ would achieve this: https://issues.apache.org/jira/browse/IMPALA-4252