Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Cannot start an HA namenode with name dirs tha...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot start an HA namenode with name dirs that need recovery. Dir: Storage Directory /data0/dfs/nn

- Labels:

-

Cloudera Manager

-

HDFS

Created on 11-02-2017 09:30 AM - edited 11-02-2017 03:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After attempting a large "insert as select" operation, I returned this morning to find that the query had failed and I could not issue any commands to my cluster this morning (e.g. hdfs dfs -df -h).

When logging into CM, I noticed that most nodes had an health issue related to "clock offset".

At this point, I am only concerned about trying to recover the data on HDFS. I am happy to build a new cluster (given that I am on CDH4, anyway) and migrate the data to that new cluster.

I tried to restart the cluster but the start-up step failed. Specifically, it failed to start the HDFS service and reported this error in Log Details:

Exception in namenode join

java.io.IOException: Cannot start an HA namenode with name dirs that need recovery. Dir: Storage Directory /data0/dfs/nn state: NOT_FORMATTED

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverStorageDirs(FSImage.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:207)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:741)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:531)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:403)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:445)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:621)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:606)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1177)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1241)

Below are some more details that I have gathered about the situation.

- I am running CDH4

- There are two namenodes in the cluster. One reporting the errors above and another one which reports

Unable to trigger a roll of the active NN

java.net.ConnectException: Call From ip-10-0-0-154.ec2.internal/10.0.0.154 to ip-10-0-0-157.ec2.internal:8022 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused - If I log into the first name node, the one with the initial error, and try to look at the namenode directory, it is completely empty

ubuntu@ip-10-0-0-157:~$ sudo ls -a /data0/dfs/nn/ . .. ubuntu@ip-10-0-0-157:~$ sudo ls -a /data1/dfs/nn/ . ..

- If I log into the other name node, it has data in those directories

ubuntu@ip-10-0-0-154:~$ sudo ls -lah /data0/dfs/nn/ total 12K drwx------ 3 hdfs hadoop 4.0K Nov 2 22:20 . drwxr-xr-x 3 root root 4.0K Jun 6 2015 .. drwxr-xr-x 2 hdfs hdfs 4.0K Nov 2 09:49 current ubuntu@ip-10-0-0-154:~$ sudo ls -lah /data1/dfs/nn/ total 12K drwx------ 3 hdfs hadoop 4.0K Nov 2 22:20 . drwxr-xr-x 3 root root 4.0K Jun 6 2015 .. drwxr-xr-x 2 hdfs hdfs 4.0K Nov 2 09:49 current ubuntu@ip-10-0-0-154:~$ sudo ls -lah /data0/dfs/nn/current total 13M drwxr-xr-x 2 hdfs hdfs 4.0K Nov 2 09:49 . drwx------ 3 hdfs hadoop 4.0K Nov 2 22:20 .. -rw-r--r-- 1 hdfs hdfs 697 Jun 6 2015 edits_0000000000000000001-0000000000000000013 -rw-r--r-- 1 hdfs hdfs 1.0M Jun 6 2015 edits_0000000000000000014-0000000000000000913 -rw-r--r-- 1 hdfs hdfs 549 Jun 6 2015 edits_0000000000000000914-0000000000000000923 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000000924-0000000000000000937 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000000938-0000000000000000951 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000000952-0000000000000000965 -rw-r--r-- 1 hdfs hdfs 1.8K Jun 6 2015 edits_0000000000000000966-0000000000000000987 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000000988-0000000000000001001 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001002-0000000000000001015 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001016-0000000000000001029 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001030-0000000000000001043 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001044-0000000000000001057 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001058-0000000000000001071 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001072-0000000000000001085 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001086-0000000000000001099 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001100-0000000000000001113 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001114-0000000000000001127 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001128-0000000000000001141 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001142-0000000000000001155 -rw-r--r-- 1 hdfs hdfs 1.3K Jun 6 2015 edits_0000000000000001156-0000000000000001169 -rw-r--r-- 1 hdfs hdfs 1.0M Jun 6 2015 edits_inprogress_0000000000000001170 -rw-r--r-- 1 hdfs hdfs 5.1M Nov 2 08:49 fsimage_0000000000024545561 -rw-r--r-- 1 hdfs hdfs 62 Nov 2 08:49 fsimage_0000000000024545561.md5 -rw-r--r-- 1 hdfs hdfs 5.1M Nov 2 09:49 fsimage_0000000000024545645 -rw-r--r-- 1 hdfs hdfs 62 Nov 2 09:49 fsimage_0000000000024545645.md5 -rw-r--r-- 1 hdfs hdfs 5 Jun 6 2015 seen_txid -rw-r--r-- 1 hdfs hdfs 170 Nov 2 09:49 VERSION

Created 11-13-2017 11:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I continued the resolution of this issue in another thread specific to the error:

ls: Operation category READ is not supported in state standby

The solution is marked on that thread, however, a quick summary was that I needed to add the Failover Controller role to a node in my cluster, enable Automatic Failover, and then restart the cluster for it all to kick in.

Created 11-02-2017 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@epowell, I moved this from Cloudera Manager to HDFS Community since the error is coming out of HDFS itself. This should help you get better and quicker assistance.

-Ben

Created on 11-03-2017 06:31 AM - edited 11-03-2017 06:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First : save the namenode dir content.

Second : can you launch the second namenode only ? Does it start ?

If yes, you should be able to start the data-nodes and get access to the data.

Created 11-03-2017 07:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your response. I think the other namenode may be started but things are such a mess that I can't be sure.

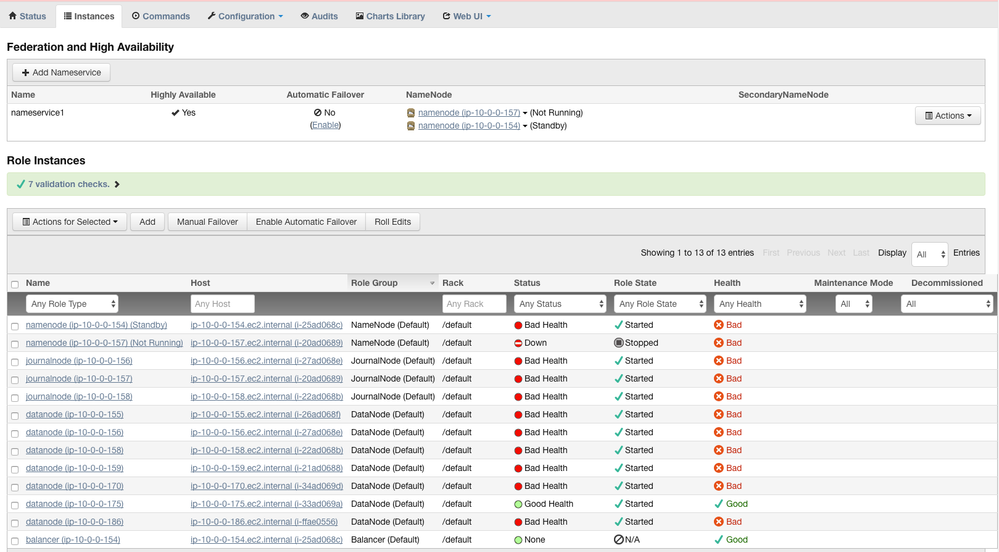

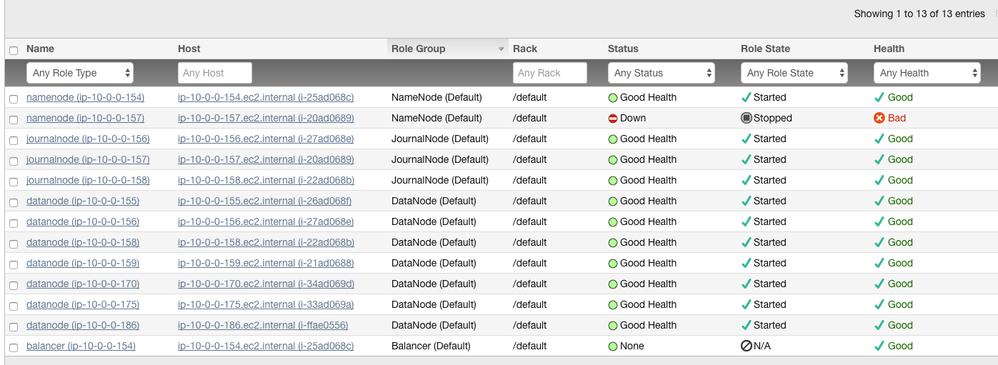

I've attached a screenshot from CM. This is after starting Zookeeper and HDFS. (I didn't attempt to start the entire cluster this time but I'm pretty sure the result is the same.)

The first line seems to show the other namenode as 'Started' (as well as the data nodes). However, if I go to that node and attempt to run any `hdfs` commands, here is what I get:

ubuntu@ip-10-0-0-154:~$ hdfs dfs -ls / 17/11/03 14:20:15 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 1 fail over attempts. Trying to fail over after sleeping for 1126ms. 17/11/03 14:20:16 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 2 fail over attempts. Trying to fail over after sleeping for 1373ms. 17/11/03 14:20:17 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 3 fail over attempts. Trying to fail over after sleeping for 4470ms.

Created 11-03-2017 07:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just thought of two quick things to add to the discussion:

First, I crossposted this issue on StackOverflow late yesterday prior to receiving any responses on this thread. I will update both posts with the solution to prevent any duplicated effort. (That thread has not received any responses so far).

Second, in the screenshot I noticed that in the 'Federation and High Availability' section is an item that controls 'Automatic Failover' and in my case it says it is not enabled. This sheds some light on why my cluster is still down despite all the documentation on HA mentioning the automatic failover feature.

Should I just try clicking 'Enable' for Automatic Failover? (I have made sure to back everything in the current/ dir of the other namenode.)

Created 11-03-2017 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Before fixing the situation, I would try to start only one namenode (the one with data in its directory).

It should be considered as the active namenode if he is alone as long as it can start successfuly.

Created 11-03-2017 09:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a bunch for your help thus far, @mathieu.d!

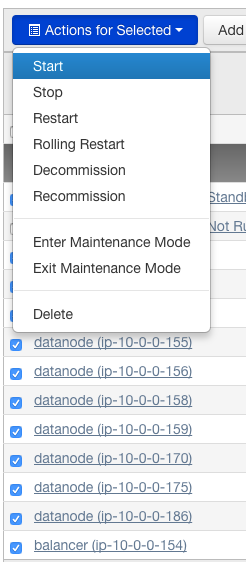

Based on your recommendation of starting only the good namenode, I have done the following:

- Stop the cluster - In CM, I went to Services -> All Services, Actions -> Stop. This stopped the only two running services, Zookeeper and HDFS.

- Start all HDFS instances except the troubled namenode - I went back to the list of instances in the HDFS page, selected all instances except the troubled namenode and started them (see screenshot)

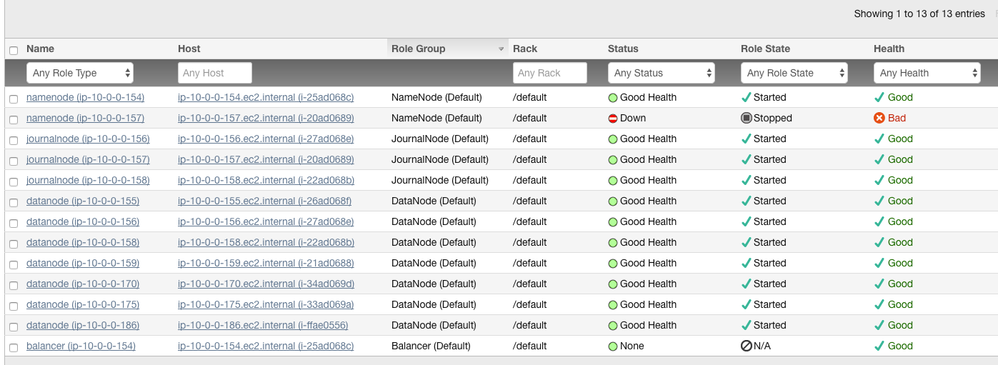

Things are looking much, much better after that (screenshot showing most instances in Good Health).

However, when I return to the namenode (or any node for that matter) and attempt to run an hdfs command, I still get the same error:

ubuntu@ip-10-0-0-154:~/backup/data1$ hdfs dfs -ls / 17/11/03 16:02:38 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 1 fail over attempts. Trying to fail over after sleeping for 1248ms. 17/11/03 16:02:39 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 2 fail over attempts. Trying to fail over after sleeping for 1968ms. 17/11/03 16:02:41 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 3 fail over attempts. Trying to fail over after sleeping for 2614ms.

Should I have tried to start all the services on the cluster (e.g. Zookeeper) as well as the HDFS service? If so, I'm not sure which order the services should be started because I usually just use the overall cluster start action.

Created 11-03-2017 10:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a bunch for your help thus far, @mathieu.d!

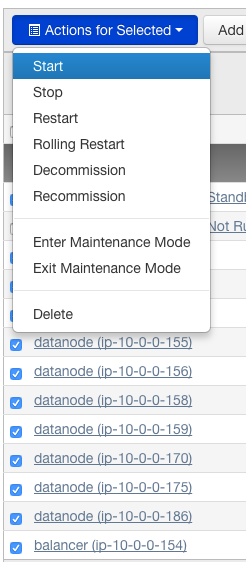

Based on your recommendation of starting only the namenode with data, I have done the following:

- Stop the cluster - In CM, I went to Services -> All Services, Action -> Stop. This stopped the only two running services, Zookeeper and HDFS.

- Start all the hdfs instances except the namenode without data - In the hdfs instances screen, I selected everything except the troubled namenode and started them (see screenshot).

After doing this, the situation seemed much better. Most instances were now in Good Health.

However, hdfs commands still fail on the call to getInfo:

ubuntu@ip-10-0-0-154:~/backup/data1$ hdfs dfs -ls / 17/11/03 17:19:50 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 1 fail over attempts. Trying to fail over after sleeping for 595ms. 17/11/03 17:19:50 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 2 fail over attempts. Trying to fail over after sleeping for 1600ms. 17/11/03 17:19:52 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 3 fail over attempts. Trying to fail over after sleeping for 4983ms.

I am also wondering whether I should have started all the services instead of just HDFS. Normally, I do that through the overall cluster start action but that is starting the troubled namenode so I was trying to find a workaround.

Thanks in advance!

Created 11-03-2017 10:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hdfs dfs -ls hdfs://host:8020/

Created on 11-03-2017 10:19 AM - edited 11-03-2017 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10.0.0.154 is the namenode with data that is Started according to CM.

From that node, I used 'localhost' as the host. It returned connection refused.

ubuntu@ip-10-0-0-154:~/backup/data1$ hdfs dfs -ls hdfs://localhost:8020/ ls: Call From ip-10-0-0-154.ec2.internal/10.0.0.154 to localhost:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

EDIT:

I have just tried with the actual ip address and it was different. I also get the same error when trying the command on other nodes.

ubuntu@ip-10-0-0-154:~/backup/data1$ hdfs dfs -ls hdfs://10.0.0.154:8020/ ls: Operation category READ is not supported in state standby