Support Questions

- Cloudera Community

- Support

- Support Questions

- Volume failure reported while disks seem fine

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Volume failure reported while disks seem fine

- Labels:

-

HDFS

Created 12-16-2014 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just upgraded our cluster from CDH 5.0.1 to 5.2.1, using parcels and following the provided instructions.

After the upgrade has finished, the health test "Data Directory Status" is critical for one of the data nodes. The reported error message is "The DataNode has 1 volume failure(s)". By running 'hdfs dfsadmin -report' I can also confirm that the available HDFS space on that node is approximately 4 TB less than on the other nodes, indicating that one of the disks is not being used.

However, when checking the status of the actual disks and regular file system we can not find anything that seems wrong. All disks are mounted and seem to be working as they should. There is also an in_use.lock file in the dfs/nn directory on all of the disks.

How can I get more detailed information about which volume the DataNode is complaining about, and what the issue might be?

Best Regards

\Knut Nordin

Created 01-02-2015 12:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The source of this error has been found. It turned out that /etc/fstab on this node was badly configured, so that one of the disks was mounted twice as two separate data directories. Interestingly, this has not been causing any visible errors until upgrading to CDH 5.2.1. Nice that it was pointed out to us by this version though.

Created 12-17-2014 12:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Host Inspector in CM

- CM agent logs under /var/log/cloudera-scm-agent

Gautam Gopalakrishnan

Created 12-17-2014 12:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the suggestions!

Host Inspector reports that everything is OK. I have checked the agent logs and also the log for the DataNode, but can not really find anything that gives any clues about this particular error.

Is there a command for making the DataNode report which physical directories it is using and what it thinks their status is?

Created 12-18-2014 02:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

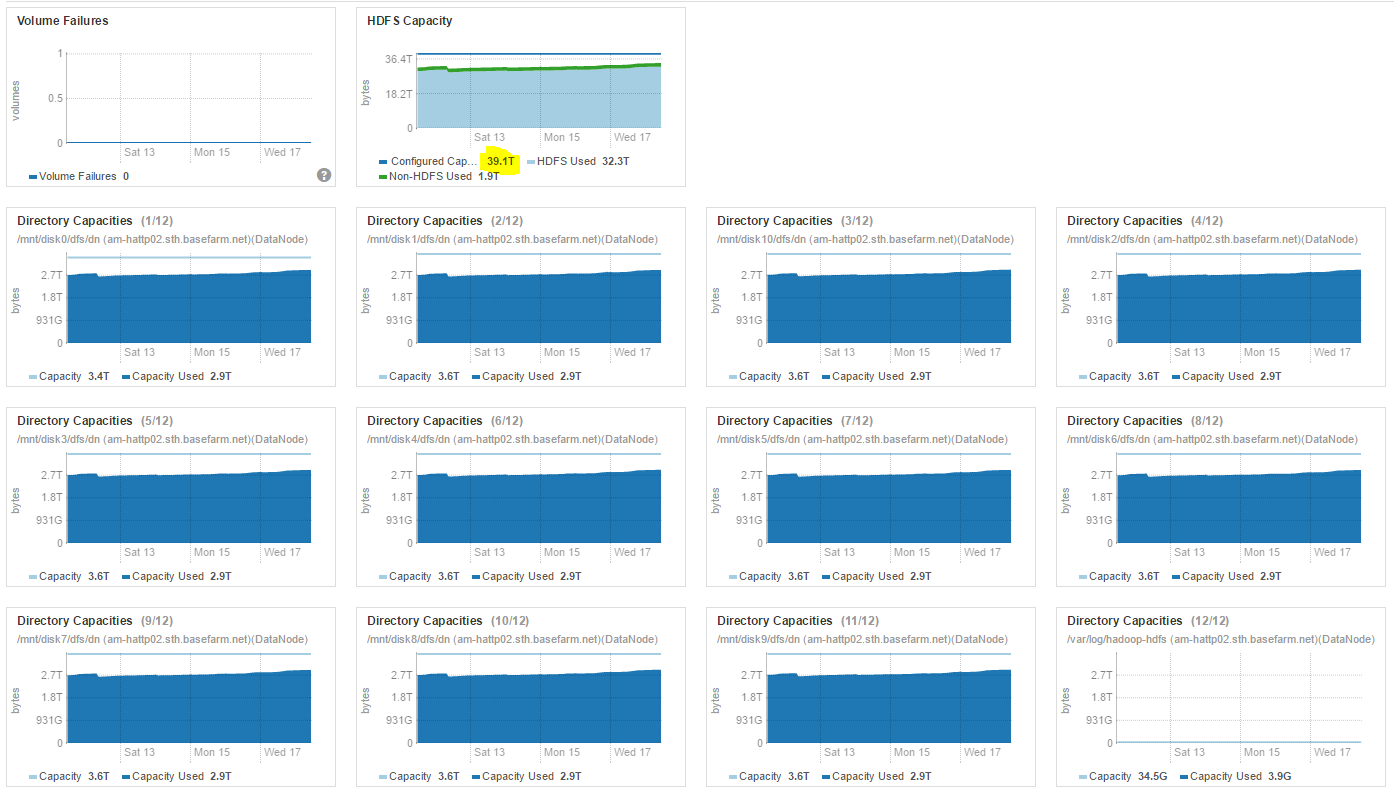

Not sure if this is helpful, but here are some charts of volume failures and disk capacity the faultly node, and for a healthy one as reference. The strange thing is that all disks seem to be in use on both nodes, but the total configured capacity is different.

Knut

Created 12-18-2014 04:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Next, go to Cloudera Management services -> even search -> critical. See if the volume related error is listed. If so, please paste details here.

Gautam Gopalakrishnan

Created 12-18-2014 11:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can confirm that CM is running version 5.2.1, so that should not be an issue.

When searching for critical log messages I came across these:

It appears that another namenode 56559@datanode_hostname has already locked the storage directory

datanode_hostname:50010:DataXceiver error processing WRITE_BLOCK operation src: /10.8.19.28:37029 dst: /10.8.19.14:50010

We have only ever had one namenode, and none on the datanode host, so I am a bit confused by what the first message really means. The second one I suspect might be a secondary failure of the first one?

Regards

Knut

Created 01-02-2015 12:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The source of this error has been found. It turned out that /etc/fstab on this node was badly configured, so that one of the disks was mounted twice as two separate data directories. Interestingly, this has not been causing any visible errors until upgrading to CDH 5.2.1. Nice that it was pointed out to us by this version though.

Created 01-02-2015 12:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Gautam Gopalakrishnan

Created 05-17-2016 07:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

can you tell us please how it was pointed out ? where to check if this is the case ?

regards

Created 12-10-2016 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you tell us please how it was pointed out ? where to check if this is the case ?

Here's one way of checking for duplicate mounts:

mount |wc -l > a mount |sort -u|uniq|wc -l > b cmp a b

If there's no output, there are no duplicate mounts.

HTH