Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark 2.1 didn't work in CDH 5.12

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark 2.1 didn't work in CDH 5.12

- Labels:

-

Apache Spark

-

Cloudera Manager

Created on 07-27-2017 09:46 PM - edited 09-16-2022 04:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have installed and activated spark 2.1 from CDH 5.12 parcel. But its not working or configured properly. Executing command 'spark2-shell' gives below exception:

$ spark2-shell

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream

at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$1.apply(SparkSubmitArguments.scala:118)

at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$1.apply(SparkSubmitArguments.scala:118)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.deploy.SparkSubmitArguments.mergeDefaultSparkProperties(SparkSubmitArguments.scala:118)

at org.apache.spark.deploy.SparkSubmitArguments.<init>(SparkSubmitArguments.scala:104)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FSDataInputStream

at java.net.URLClassLoader$1.run(URLClassLoader.java:366)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

It seems looking for some Hadoop class file, but its not available. I have hadoop version:

$ hadoop version

Hadoop 2.6.0-cdh5.12.0

Subversion http://github.com/cloudera/hadoop -r dba647c5a8bc5e09b572d76a8d29481c78d1a0dd

Compiled by jenkins on 2017-06-29T11:31Z

Compiled with protoc 2.5.0

From source with checksum 7c45ae7a4592ce5af86bc4598c5b4

This command was run using /opt/cloudera/parcels/CDH-5.12.0-1.cdh5.12.0.p0.29/jars/hadoop-common-2.6.0-cdh5.12.0.jar

Do I need to configure anything explicitly ?

Also, there are no files in ' /etc/spark2/conf' , which looks to me that spark2 is not configured properly.

Created 07-28-2017 07:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-27-2017 10:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

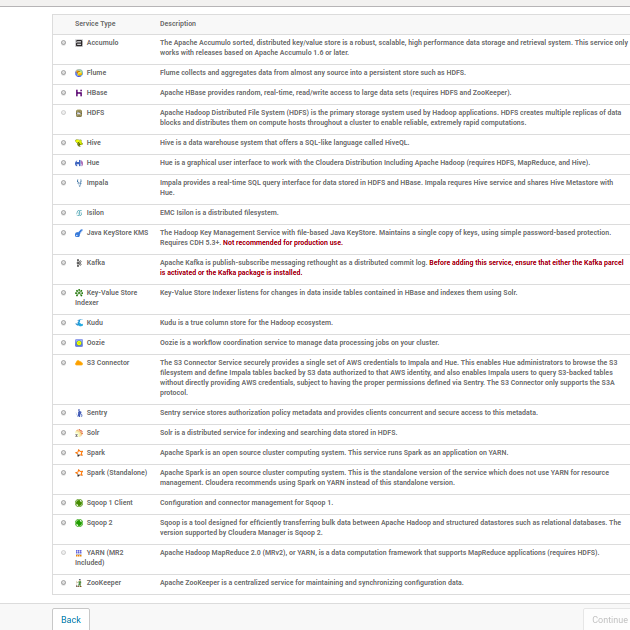

At a cluster screen click the Action menu and select 'Add a Service'. Follow the wizard and add Spark2 from the list of services available.

Created 07-27-2017 11:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mbigelow I have tried to add but there is no option in clouder-manager UI to add anything called 'Spark2' . It has 'Spark' and 'Spark(Standalone)' only. PFA screenshot.

Created 07-28-2017 07:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-28-2017 09:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The /opt/cloudera/csd dir was empty, I downloaded CSD and configured it and then re-added service. The above exception is resolved.