Community Articles

- Cloudera Community

- Support

- Community Articles

- Datagen - Data Generator tool built for CDP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-22-2022 09:01 PM - edited 01-23-2024 06:44 AM

Datagen lets you generate data on all services provided by Cloudera (HDFS, Hive, HBase, Ozone, Kafka, Kudu, SolR, Local files), in any format (CSV, JSON, Avro, Parquet, ORC).

Users can generate pre-defined data (such as customers or transactions) or create their own data model which supports a wide variety of types.

Users can schedule data generation and provide a large set of configuration (such as replication factor, keys etc…).

Datagen is a basic web server exposing APIs that deploys natively as a service in CDP 7.1.7+.

Why ?

There could be many reasons to install and use Datagen, here is a list of some:

- Test your installation

- Benchmark your platform

- Reproduce another environment and data (usually a production in a build)

- Test your code on sample data

- Test your code on data at scale before going to production

- Make a Proof Of Concept cluster with test data

- Test one service or compare services for one use case

What ?

Datagen comes as a new side service that you can easily install using publicly available CSD & Parcel built for CDP 7.1.7+ (7.1.7, 7.1.8 and 7.1.9).

It is a web server, exposing APIs to let users: generate data, schedule data generation, follow data generation progress, get health and metrics.

It is integrated fully with Cloudera Manager, so in one click you can generate pre-defined data.

To generate data, Datagen will take as input a “data-model”, that is a JSON file describing what kind of data and where data should be generated.

It comes with a pre-defined data model file (customer or transaction as examples), but anyone can define its own data model file.

Data model file allows a wide variety of data to be generated, for example: a Name of a person, a City (with latitude and longitude). Some column values generated can depend on other column’s values. It is fully extensible: users can predefined values or load them from a csv file.

Installation

First, install the CSD, like this:

- To find the right version of your CSD, check this url:https://datagen-repo.s3.eu-west-3.amazonaws.com/index.html

- Go to Cloudera Manager and make a curl or wget of it:

wget https://datagen-repo.s3.eu-west-3.amazonaws.com/0.4.13/7.1.9.3/csd/DATAGEN-0.4.13.7.1.9.3.jar

- Make a copy of the downloaded jar file into /opt/cloudera/csd/:

cp DATAGEN-*.jar /opt/cloudera/csd/

- Restart Cloudera Server:

systemctl restart cloudera-scm-server

Then install the Parcel with following procedure:

- To find the right version of your CSD, check this url:https://datagen-repo.s3.eu-west-3.amazonaws.com/index.html

- Go to Cloudera Manager, in Parcels > Parcel Repositories & Network:

- Add this public repository to Cloudera Manager:

https://datagen-repo.s3.eu-west-3.amazonaws.com/0.4.13/7.1.9.3/parcels/ - Download/Distribute/Activate the new DATAGEN parcel detected

Now, you can install this new service with simple procedure:

- In Cloudera Manager, Go to your Cluster : Actions > Add a Service

- If you have Ranger enabled, select it as a dependency.

- Then select where to places Datagen servers (best is to start with only one and scale up later if needed)

- Review changes, they all should be filled in automatically, however it is recommended to properly set the ranger properties: URL, admin user and password. These are used to create needed base ranger policies to push data during initialization phase, they could be removed later.

Finally Restart CMS before going on: Clusters > Cloudera Management Service , then Actions > Restart.

You should now have a running Datagen service composed of one server.

N.B: If you have health tests not set because of an “Invalid configuration role for Datagen Web Server”, you can safely suppress this warning in order to have proper health tests running.

Basic Data Generation

Now, it is possible to launch some data generation easily.

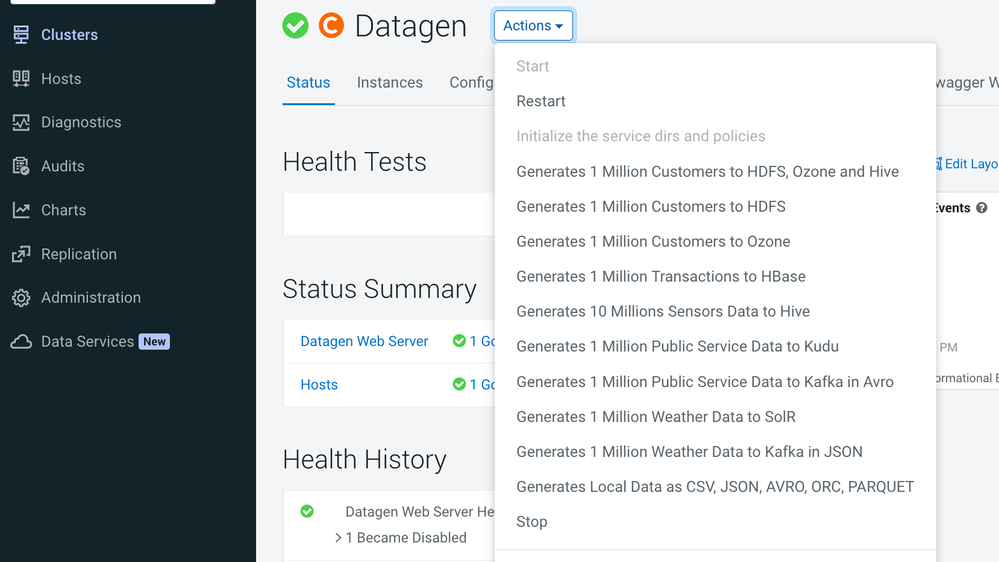

Go to Datagen service, and click on Actions, you have a lot of different possibilities to generate some pre-defined data into different services.

If you click on: Generates 1 Million Customers to HDFS

It launches a Cloudera Manager command making different API calls to Datagen Web server to generate data representing customers from different countries and pushes it to HDFS as Parquet files.

In a shell with a logged in user (optionally use datagen ones):

hdfs dfs -ls /user/datagen/hdfs/customer/

If you click on: Generates 1 Million Sensors Data to Hive

It will generate data into multiple Hive tables and if you login into Hue or with beeline to Hive, you are able to see this:

jdbc:hive2://ccycloud-2.lisbon.root.hwx.si> show databases;

...

INFO : OK

+---------------------+

| database_name |

+---------------------+

| datagen_industry |

| default |

| information_schema |

| sys |

+---------------------+

0: jdbc:hive2://ccycloud-2.lisbon.root.hwx.si> use datagen_industry;

...

0: jdbc:hive2://ccycloud-2.lisbon.root.hwx.si> show tables;

...

INFO : OK

+------------------+

| tab_name |

+------------------+

| plant |

| plant_tmp |

| sensor |

| sensor_data |

| sensor_data_tmp |

| sensor_tmp |

+------------------+

6 rows selected (0.059 seconds)

0: jdbc:hive2://ccycloud-2.lisbon.root.hwx.si> select * from plant limit 2;

...

INFO : OK

+-----------------+--------------------+------------+-------------+----------------+

| plant.plant_id | plant.city | plant.lat | plant.long | plant.country |

+-----------------+--------------------+------------+-------------+----------------+

| 1 | Chotebor | 49,7208 | 15,6702 | Czechia |

| 2 | Tecpan de Galeana | 17,25 | -100,6833 | Mexico |

+-----------------+--------------------+------------+-------------+----------------+

2 rows selected (0.361 seconds)

0: jdbc:hive2://ccycloud-2.lisbon.root.hwx.si> select * from sensor limit 2;

...

INFO : OK

+-------------------+---------------------+------------------+

| sensor.sensor_id | sensor.sensor_type | sensor.plant_id |

+-------------------+---------------------+------------------+

| 70001 | motion | 186 |

| 70002 | temperature | 535 |

+-------------------+---------------------+------------------+

2 rows selected (0.173 seconds)

0: jdbc:hive2://ccycloud-2.lisbon.root.hwx.si> select * from sensor_data limit 2;

...

INFO : OK

+------------------------+--------------------------------------+----------------------+

| sensor_data.sensor_id | sensor_data.timestamp_of_production | sensor_data.value |

+------------------------+--------------------------------------+----------------------+

| 88411 | 1665678228258 | 1895793134684555135 |

| 52084 | 1665678228259 | -621460457255314082 |

+------------------------+--------------------------------------+----------------------+

2 rows selected (0.189 seconds)

Configuration

Before digging into custom data generation, it is important to understand how Datagen is configured to generate data.

First, Datagen is fully configurable from Cloudera Manager, each service configuration can be overridden from CM.

But, by default Datagen is doing what is called auto-discovery. It uses CM to auto-discover all services present in the cluster and get their required configurations to create data into.

You also can directly provide any configuration regarding any service when you make an API call to generate data to this said service.

API

As already mentioned earlier, Datagen is a web server exposing APIs to :

- Generate data

- Schedule data Generation

- Follow up progress of data generation

- Get metrics

All these APIs are accessible with one user/password which by default is admin/admin.

Note that the web server will be TLS if auto-TLS is activated in your cluster.

To ease interaction with these APIs, a swagger is available, link is available directly from Cloudera Manager, in Datagen > Datagen Swagger Web UI.

Custom Data Generation

Now, that you are familiar with Datagen, it’s time to fully use its power and leverage its own utility by creating your own data.

You must know that pre-built models are bundled with the parcels and so located here on all machines: /opt/cloudera/parcels/DATAGEN/models/.

A model file is composed of basically 4 sections:

{

"Fields": [

],

"Table_Names": [

],

"Primary_Keys": [

],

"Options": [

]

}

- Fields list all fields (columns) you want to generate with their type etc…

- Table_Names is an array of keys/values to define where data should be generated

- Primary_Keys is an array of keys/values to define what primary keys will be used for kafka, kudu, hbase

- Options is an array of keys/values to define some specific properties (such as replication factor, buffer etc..)

List of all possible Table Names is here: https://frischhwc.github.io/datagen/data-generation/models#table_names

List of all possible Primary Keys is here: https://frischhwc.github.io/datagen/data-generation/models#primary_keys

List of all possible Options is here: https://frischhwc.github.io/datagen/data-generation/models#options

In case of any doubt, refer to the full documentation available here: https://frischhwc.github.io/datagen/data-generation/models or use git repository documentation here: https://github.com/frischHWC/datagen#data-generated.

Or you can also browse the full-model.json located on your cluster here: /opt/cloudera/parcels/DATAGEN/models/full-model.json . It has all possible parameters and an exhaustive list of all different fields.

Basically fields can be:

- Basic: String, long, increment etc…

- Advanced: City, IP, ,Name etc…

- Limited in size: length for a string/byte array etc… or min, max for a long/int/float etc…

- Set to specific values, with a different probability to each of these value (or not)

- Filtered to render only values from some countries if it is a Name, a City, a CSV file

- Derived from another field: latitude/longitude from a city, another column of a csv file

- Computed from other fields values

Let’s create our own custom data model.

Imagine, we would like to modelize our employee database from Italy, we will need:

- A name (Italian if possible)

- A city in Italy where the employee is located

- An email

- An ID

- Date of arrival (lets say in the last 10 years)

- A department among: "HR", "CONSULTING", "FINANCE", "SALES", "ENGINEERING", "ADMINISTRATION", "MARKETING"

If we go through each field, one by one, see:

Name (with a filter on Italy, to get only Italian Names):

{

"name": "name",

"type": "NAME",

"filters": ["Italy"]

}

City (with a filter on Italy, to get only Italian Cities):

{

"name": "city",

"type": "CITY",

"filters": [

"Italy"

]

},

Email (which is made with the concatenation of name + id):

{

"name": "email",

"type": "STRING",

"conditionals": {

"injection": "${name}.${employee_id}@our_awesome_company.it"

}

},

Date of Arrival (between 1st January 2012 and 1st November 2022):

{

"name": "date_of_arrival",

"type": "BIRTHDATE",

"min": "1/1/2012",

"max": "1/11/2022"

},

Employee ID (starts at 345688 to always have 6 digits employees number):

{

"name": "employee_id",

"type": "INCREMENT_INTEGER",

"min": 345688

},

The department:

{

"name": "department",

"type": "STRING",

"possible_values": [

"HR",

"CONSULTING",

"FINANCE",

"SALES",

"ENGINEERING",

"ADMINISTRATION",

"MARKETING"

]

}

We can so create such file:

{

"Fields": [

{

"name": "name",

"type": "NAME",

"filters": [

"Italy"

]

},

{

"name": "city",

"type": "CITY",

"filters": [

"Italy"

]

},

{

"name": "email",

"type": "STRING",

"conditionals": {

"injection": "${name}.${employee_id}@our_awesome_company.it"

}

},

{

"name": "date_of_arrival",

"type": "BIRTHDATE",

"min": "1/1/2012",

"max": "1/11/2022"

},

{

"name": "employee_id",

"type": "INCREMENT_INTEGER",

"min": 345688

},

{

"name": "department",

"type": "STRING",

"possible_values": [

"HR",

"CONSULTING",

"FINANCE",

"SALES",

"ENGINEERING",

"ADMINISTRATION",

"MARKETING"

]

}

],

"Table_Names": [

{

"HIVE_HDFS_FILE_PATH": "/user/datagen/hive/employee_model_italy/"

},

{

"HIVE_DATABASE": "datagen_test"

},

{

"HIVE_TABLE_NAME": "employee_model_italy"

},

{

"HIVE_TEMPORARY_TABLE_NAME": "employee_model_it_tmp"

},

{

"AVRO_NAME": "datagenemployeeit"

}

],

"Primary_Keys": [

],

"Options": [

]

}

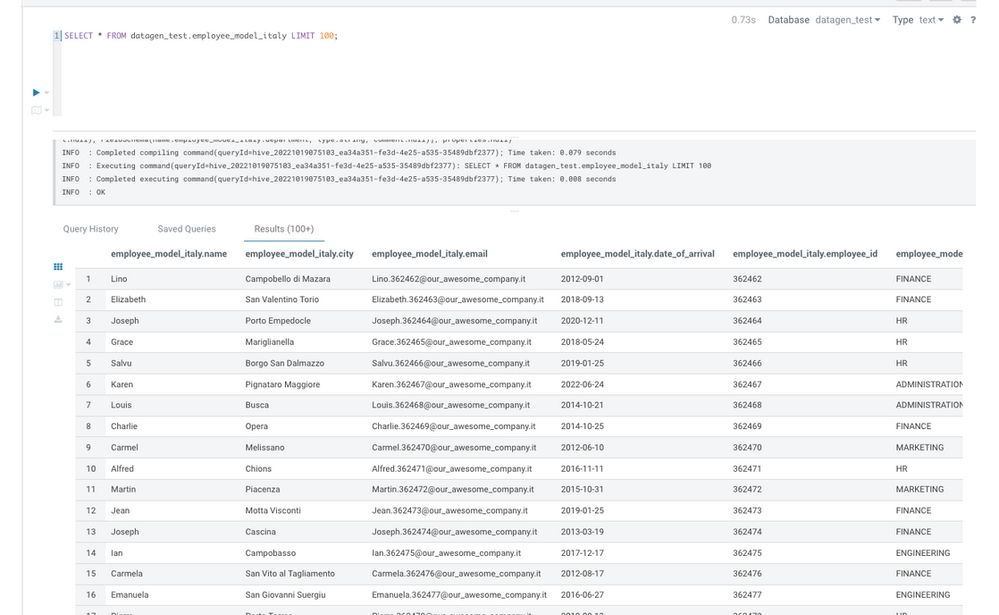

This can generate data into Hive table named: employee_model_italy in database datagen_test.

We can now switch to the Swagger and test our model with endpoint /model/test in model-tester-controller.

It answers us:

{ "name" : "Judith", "city" : "Melfi", "email" : "Judith.345689@our_awesome_company.it", "date_of_arrival" : "2017-01-29", "employee_id" : "345689", "department" : "ENGINEERING" }

It has been validated, so we can start generation using endpoint /datagen/hive, with following parameters: 10 batches of 10 000 rows with 10 threads and for sure the model file uploaded.

API answers directly a UUID to follow the progress using endpoint: /command/getCommandStatus

Once finished, go to Hue or beeline client to check data generated:

Resources

- Public doc: https://frischhwc.github.io/datagen

- CSD & Parcel repository: https://datagen-repo.s3.eu-west-3.amazonaws.com/index.html

(Go through the different versions to find out the suitable one for you)

Created on 12-01-2022 01:40 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Risch,

Thanks for your article.

On the other hand,i can't install the parcels. Are there versions for Ubuntu bionic?

Thank you,

M.M

Created on 12-01-2022 02:08 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

Unfortunately, there are currently no parcels for Ubuntu Bionic.

But that could be in a future release.

Will keep you updated if so.

Thanks.

Created on 12-29-2022 10:43 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello.

Parcel is not available in the repository. Does anyone know if it will be restored?

https://datagen-repo.s3.eu-west-3.amazonaws.com/parcels/0.4.0/7.1.7.1000/

Regards

Created on 01-02-2023 07:38 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello @gestaobigdata ,

You should copy/paste this url in Cloudera Manager, that will download the manifest.json and be able to retrieve them.

URL of the manifest.json if needed: https://datagen-repo.s3.eu-west-3.amazonaws.com/parcels/0.4.0/7.1.7.1000/manifest.json

Regards.

Created on 01-03-2023 06:20 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Frisch, thanks for the help.

We managed to download using the internal repository. Success.

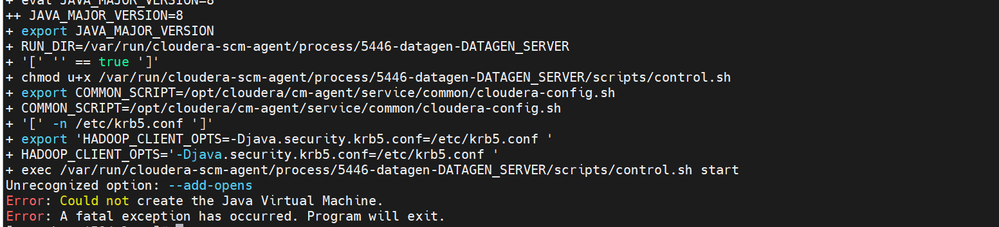

When starting the service, we get the error "Unrecognized option: --add-opens - Error: Could not create the Java Virtual Machine"

Are there any impediments to using Datagen with Java 8? We use the "Java 1.8.0_131-b11" version in our environment.

Regards

Created on 01-03-2023 07:39 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Unfortunately it is built with java 11 and will not work on Java 8 so.

However, you can install Java 11 on only one node, along with Java 8 and let Java 8 be the default (so you do not impact any of CDP components or your programs).

Then go in CM > Datagen > Configuration and set java_home_custom to the path on the node where Java 11 is installed. Datagen should start with it.

Thanks.