Community Articles

- Cloudera Community

- Support

- Community Articles

- How Cloudera Data Platform excels at hybrid use ca...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-18-2022 08:06 PM - edited 08-19-2022 12:10 PM

Table of Contents

Overview

Over the last few years, Cloudera has evolved to become the Hybrid Data Company. Inarguably, the future of the data ecosystem is a hybrid one that enables common security and governance across on-premise and multi-cloud environments. In this article you will learn how Cloudera enables key hybrid capabilities like application portability, and data replication in order to quickly move workloads and data to the cloud.

Let's explore how Cloudera Data Platform (CDP) excels at following hybrid use cases, through data pipeline replication and data pipeline migration exercises to the public cloud.

- Develop Once and Run Anywhere

- De-risk Cloud Migration

Design

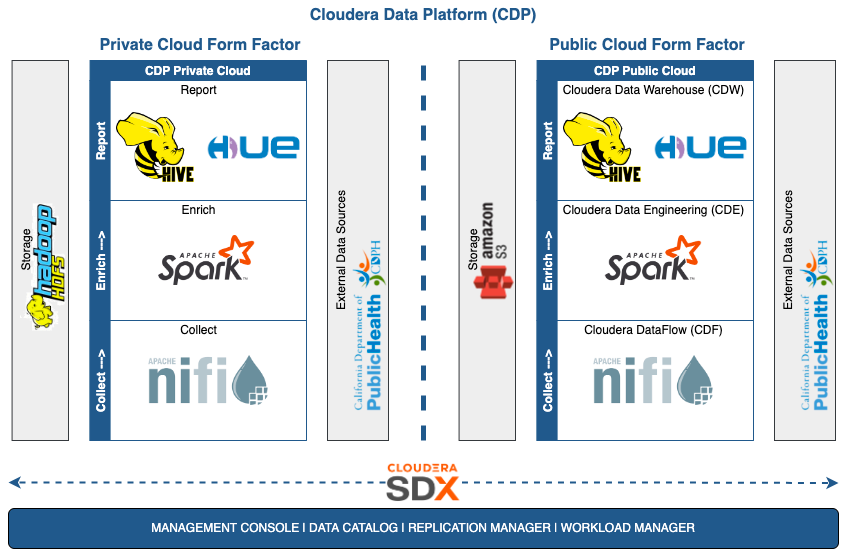

The following diagram shows a data pipeline in private & public form factors -

Prerequisites

- A data pipeline in a private cloud environment. Please follow the instructions provided in cdp-pvc-data-pipeline to set one up.

- Cloudera Data Platform (CDP) on Amazon Web Services (AWS). To get started, visit AWS quick start guide.

Implementation

Below are the steps to replicate a data pipeline from a private cloud base (PVC) cluster to a public cloud (PC) environment -

Replication Manager

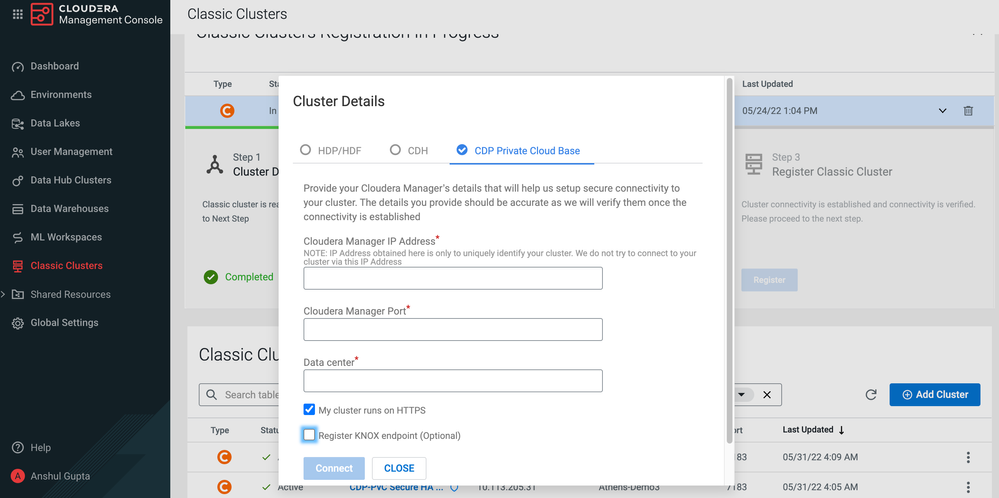

Prior to creating replication policies, register the private cloud cluster in the public cloud environment. Go to Classic Clusters in CDP PC Management Console and add PVC cluster. In the Cluster Details dialog, select the CDP Private Cloud Base option and fill out all the details. If you have Knox enabled in your PVC cluster, check the "Register KNOX endpoint (Optional)" box and add the KNOX IP address & port.

To ensure the PVC cluster works with Replication Manager in the PC environment, please follow the instructions given in Adding CDP Private Cloud Base cluster for use in Replication Manager and Data Catalog.

For more details on adding & managing classic clusters, please visit Managing classic clusters.

Once the PVC cluster is added, proceed to create policies in Replication Manager.

Create HDFS Policy

- Add all the code to the HDFS directory of your choice. This includes NiFi flow definitions and the PySpark program in this exercise.

- Now, go to Replication Manager and create an HDFS policy. While creating the policy, you will be asked to provide source cluster, source path, destination cluster, destination path, schedule, and a few other additional settings. If you need help during any step in the process, please visit Using HDFS replication policies and Creating HDFS replication policy.

- Sample replication policy for reference -

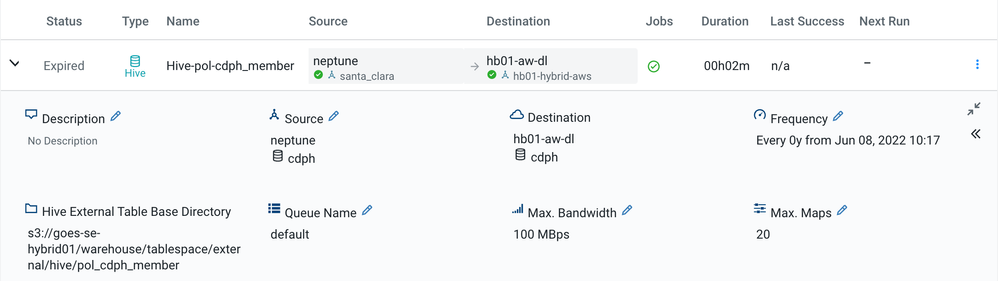

Create Hive Policy

- While creating the policy, you will be asked to provide a source cluster, destination cluster, database & tables to replicate, schedule, and a few other additional settings. In additional settings, ensure "Metadata and Data" is checked under the Replication Option. If you need help during any step in the process, please visit Using Hive replication policies and Creating Hive replication policy

- Sample replication policy for reference -

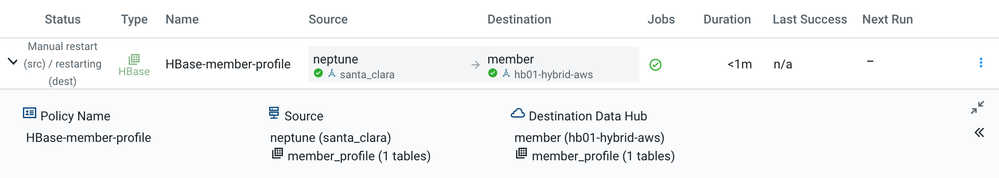

Create HBase Policy

- While creating the policy, you will be asked to provide the source cluster, source tables, destination cluster, and a few other initial snapshot settings. If you need help during any step in the process, please visit Using HBase replication policies and Creating HBase replication policy

- Sample replication policy for reference -

NiFi

- Download NiFi flow definition from PVC cluster on your local machine.

- Go to Cloudera DataFlow (CDF) in the PC environment and import the flow definition.

- Update the NiFi flow to use the PutS3Object processor instead of the PutHDFS processor. Update properties in this processor to use desired S3 bucket. With this change, you will start storing incoming files in AWS S3 bucket instead of the PVC HDFS directory. For any help with the PutS3Object processor, please see cdp-data-pipeline nifi flow.

- Once updates are done, re-import NiFi flow as a newer version and deploy it.

Spark

- Update the PySpark program to use AWS S3 bucket instead of PVC HDFS directory.

- Go to Cloudera Data Engineering (CDE), and create a Spark job using the PySpark program.

Hive

- Go to Cloudera Data Warehouse (CDW) in the PC environment, and choose Virtual Warehouse of the data lake selected during Hive replication.

- Now, open Hue editor to access replicated database(s) and table(s).

- Interested in gathering insights from this data? Check out cdp-data-pipeline data visualization.

HBase

- Go to Cloudera Operational Database (COD) in PC environment, and choose database selected during HBase replication.

- Now, open Hue editor to access replicated table(s).

Data Catalog

- To ensure the PVC cluster shows up as a data lake in the PC environment's Data Catalog, please follow instructions given in Adding CDP Private Cloud Base cluster for use in Replication Manager and Data Catalog.

- Once the configuration is done, Data Catalog in the PC environment will let you see data objects available in both the PVC cluster and the PC environment.

Conclusion

Great job if you have made it this far!

Develop Once and Run Anywhere

So what did we learn? We learned that with CDP's hybrid capabilities we are able to easily replicate and migrate data pipelines from one environment to another, whether on-premise or in the public cloud with minimal effort! In this exercise, all the code that ran on the private cloud was successfully replicated over to AWS environment with minimal configuration and code changes (due to change in the filesystem - HDFS > S3).

A similar set of steps can be followed to migrate data pipelines to Microsoft Azure and Google Cloud Platform (GCP) environments.

De-risk Cloud Migration

When it comes to migrating to the cloud, it's usually extremely risky to migrate all workloads at the same time. As part of your migration strategy, you need to be able to divide the workloads in a logical manner and migrate them accordingly, and CDP gives you great flexibility to ensure the migration is done according to your strategy.

Please don't hesitate to add a comment if you have any questions.