Support Questions

- Cloudera Community

- Support

- Support Questions

- Use Flume to get a webpage data. How to configure,...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Use Flume to get a webpage data. How to configure, how to use it to stream data

- Labels:

-

Apache Flume

Created on 11-28-2016 04:20 PM - edited 09-16-2022 03:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have 3 node cluster, using latest cloudera parcels for 5.9 version. OS is CentOS 6.7 on all three of them.

I am using Flume for the 1st time. I have just used 'add service' option on CLoudera GUI to add Flume.

My purpose is to get the data from a webpage to hdfs/hbase.

Can you please help me how can I do it? what else do I need to make the data streaming from a webpage possible.

Also, I have a seen an example on net for Twitter, there we need to make token on twitter page to get the data. However the webpage I am referring is a 3rd party one and I am not sure how to configure Flume to get the data on my cluster. I guess it would be over http.

Please help me to get this done.

Thanks in Advance.

Shilpa

Created on 01-06-2017 04:03 PM - edited 01-06-2017 04:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@pdvorak thanks!

Yes, i wrote a java code to pull RSS feed and used Exec source and Avro Sink on 2 nodes and Avro Source as collector and HDFS sink on the 3rd node.

Created 11-29-2016 05:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since you are going to use third party webpage I assuming that you wont be able to integrate or deploy flume sdk.if the webpage is ok in sending data via HTTP rather than using Flume's RPC , then I think HTTP source would be a good fit. From a client point of view HTTP source will act like a web server that accepts flume event.Either you can write your own Handler or use HTTPSourceXMLHandler in your configuration , the default Handler accepts Json format .

The format which HTTPSourceXMLHandler accept is state below

<events> <event 1 2 3 ..> <headers> <header 1 2 3 ..> </header> <body> </body> </event..> </events>

The handler will parse the XML into flume events and pass it on to the HTTP Source. Which will then pass on to Channel and goes to Sink or Another agent depends on the flow.

Created on 11-29-2016 12:09 PM - edited 11-29-2016 12:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks so much for the reply. It really helps me to understand how can it work.

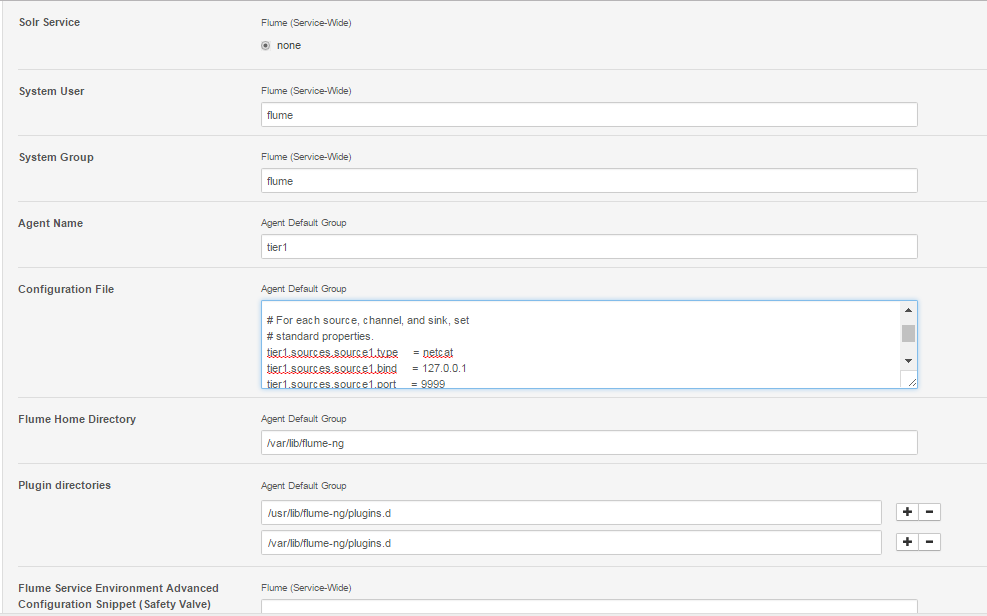

However as I told you i am using Flume for the 1st time, i have no idea how to change its configurations to make it work. my configs are currently set to default values.

I will really appreciate it if you could help me with them. PFB

I look forward to your reply.

Thanks in Advance!

Shilpa

Created 11-30-2016 02:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please help me with the Flume configurations. I am running out of time.

Thanks,

Shilpa

Created 12-02-2016 09:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey guys!

I am still wating for the reply. Please help me with those configs or point me to a document.

Thanks,

Shilpa

Created 12-02-2016 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would suggest reviewing the documentation here: http://flume.apache.org/FlumeUserGuide.html

To see some examples and different configuration options.

In Cloudera Manager, you will be editing Configuration file section, and that is the configuration that is read when flume starts up.

-pd

Created 12-02-2016 12:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks pd. 🙂

I will go through the links and will get back If I am stuck anywhere.

-Shilpa

Created on

12-15-2016

03:31 PM

- last edited on

12-16-2016

05:36 AM

by

cjervis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @pdvorak,

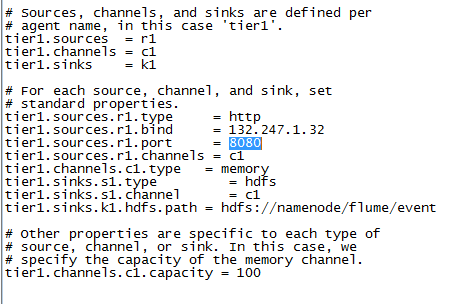

My flume.conf look like below. However I have a question as i want to get data from a third party website, in my case a news website.

I dont know which port to use in the configuration, please see highlighted part below. I thought of using 8080 but

it is not open.

it i

[root@LnxMasterNode01 ~]# nc -l 132.247.1.32 8080

nc: Cannot assign requested address

Can you tell me how to deal with this part.

Also, i am looking for special keywords in this website such as fashion, sports and i want the data only related to them. Can you tell me if this can be done using multiple sink for each header such as:

# list the sources, sinks and channels in the agent

agent_foo.sources = avro-AppSrv-source1

agent_foo.sinks = hdfs-Cluster1-sink1 avro-forward-sink2

agent_foo.channels = mem-channel-1 mem-channel-2

# set channels for source

agent_foo.sources.avro-AppSrv-source1.channels = mem-channel-1 mem-channel-2

# set channel for sinks

agent_foo.sinks.hdfs-Cluster1-sink1.channel = mem-channel-1 mem-channel-2

# channel selector configuration

agent_foo.sources.avro-AppSrv-source1.selector.type = multiplexing

agent_foo.sources.avro-AppSrv-source1.selector.header = Category

agent_foo.sources.avro-AppSrv-source1.selector.mapping.Fashion = mem-channel-1

agent_foo.sources.avro-AppSrv-source1.selector.mapping.Baseball = mem-channel-2

agent_foo.sources.avro-AppSrv-source1.selector.mapping.Basketball = mem-channel-1 mem-channel-2

agent_foo.sources.avro-AppSrv-source1.selector.default = mem-channel-1

Thanks in Advance!

Shilpa

Created 12-16-2016 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created on 12-19-2016 02:21 PM - edited 12-19-2016 02:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @hshreedharan / @pdvorak / All,

My project is in crisis really need help.

my flume.conf is as follows:

tier1.sources = http-source

tier1.channels = mem-channel-1

tier1.sinks = hdfs-sink

# For each source, channel, and sink, set

# standard properties.

tier1.sources.http-source.type = http

tier1.sources.http-source.handler = org.apache.flume.source.http.JSONHandler

tier1.sources.http-source.bind = 132.247.1.32

tier1.sources.http-source.port = 21

tier1.sources.http-source.channels = mem-channel-1

tier1.channels.mem-channel-1.type = memory

tier1.sinks.hdfs-sink.type = hdfs

tier1.sinks.hdfs-sink.channel = mem-channel-1

tier1.sinks.hdfs-sink.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S

# Other properties are specific to each type of

# source, channel, or sink. In this case, we

# specify the capacity of the memory channel.

tier1.channels.mem-channel-1.capacity = 100

The error I am getting while starting flume-ng:

2016-12-19 15:52:27,356 WARN org.mortbay.log: failed SelectChannelConnector@132.247.1.32:21: java.net.BindException: Cannot assign requested address

2016-12-19 15:52:27,356 WARN org.mortbay.log: failed Server@9439eee: java.net.BindException: Cannot assign requested address

2016-12-19 15:52:27,357 ERROR org.apache.flume.source.http.HTTPSource: Error while starting HTTPSource. Exception follows.

java.net.BindException: Cannot assign requested address

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:444)

at sun.nio.ch.Net.bind(Net.java:436)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:214)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216)

at org.mortbay.jetty.nio.SelectChannelConnector.doStart(SelectChannelConnector.java:315)

at org.mortbay.component.AbstractLifeCycle.start(AbstractLifeCycle.java:50)

at org.mortbay.jetty.Server.doStart(Server.java:235)

at org.mortbay.component.AbstractLifeCycle.start(AbstractLifeCycle.java:50)

at org.apache.flume.source.http.HTTPSource.start(HTTPSource.java:207)

at org.apache.flume.source.EventDrivenSourceRunner.start(EventDrivenSourceRunner.java:44)

at org.apache.flume.lifecycle.LifecycleSupervisor$MonitorRunnable.run(LifecycleSupervisor.java:251)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:304)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:178)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

2016-12-19 15:52:27,367 ERROR org.apache.flume.lifecycle.LifecycleSupervisor: Unable to start EventDrivenSourceRunner: { source:org.apache.flume.source.http.HTTPSource{name:http-source,state:IDLE} } - Exception follows.

java.lang.RuntimeException: java.net.BindException: Cannot assign requested address

at com.google.common.base.Throwables.propagate(Throwables.java:156)

at org.apache.flume.source.http.HTTPSource.start(HTTPSource.java:211)

at org.apache.flume.source.EventDrivenSourceRunner.start(EventDrivenSourceRunner.java:44)

at org.apache.flume.lifecycle.LifecycleSupervisor$MonitorRunnable.run(LifecycleSupervisor.java:251)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

I guess, the source is not able to connect to IP and Port I provided. This is because the IP I provided is of 3rd Party, a news webpage. And I dont know which port is open to connect.

Please guide me how can I can enable data streaming from a 3rd Party webpage to HDFS using Flume.

Also, I cannot see my plugins.d for Flume. Can this be one of the reason? Do i have to download it jars separetly? I have installed Flume from Cloudera Manager UI.

[root@LnxMasterNode01 CDH-5.9.0-1.cdh5.9.0.p0.23]# cd /var/lib/flume-ng/

[root@LnxMasterNode01 flume-ng]# ll

total 0

[root@LnxMasterNode01 flume-ng]# cd /

[root@LnxMasterNode01 /]# find . -name plugins.d

./etc/audisp/plugins.d

[root@LnxMasterNode01 /]#

@hshreedharan I saw your post on Flume on a seperate forum hence looped you. Please help.

Thanks,

Shilpa