Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to load json record to postgres as json da...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to load json record to postgres as json datatype ?

- Labels:

-

Apache NiFi

Created on 06-16-2022 02:55 AM - edited 06-16-2022 02:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a list of json records : [{}, {},{},{}]

I splitted them to get : {},{},{},{}

i need to load them to postgres as json datatype

the result in postgres should be like that

| data |

|---------------------------------------|

| { "name":"alex", "surname":"rolfi"}|

| { "name":"rolf", "surname":"syon"}|

| { "name":"med", "surname":"akil"}|

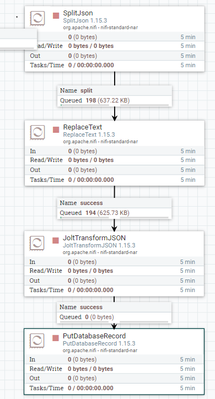

i did that with the following processors :

but Putdatabaserecord did not solve the problem

Created 06-16-2022 06:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The way PutDatabaseRecord works is basically once it reads the json , it will try to match the json keys to the DB columns hence the error you are getting if not being able to map fields to columns, in your case it will be looking for columns: name & surename to store their values, so it wont store the whole json in one field. I had similar situation where I needed to store json and the only way I can think of is first load the json into an attribute using the ExtractText Processor, then use PutSQL processor and write the insert statement to the target table where the column is the Data and the value is the attribute contain the json value for example ${JsonDataAttribute}. You have to be careful how big your json is, if its too big then you have to figure out another way, but if its small then no harm from saving into an attribute.

Created 06-16-2022 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you explain what happen when you use the PutDatabaseRecrod Processor?

Created 06-16-2022 06:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-16-2022 06:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The way PutDatabaseRecord works is basically once it reads the json , it will try to match the json keys to the DB columns hence the error you are getting if not being able to map fields to columns, in your case it will be looking for columns: name & surename to store their values, so it wont store the whole json in one field. I had similar situation where I needed to store json and the only way I can think of is first load the json into an attribute using the ExtractText Processor, then use PutSQL processor and write the insert statement to the target table where the column is the Data and the value is the attribute contain the json value for example ${JsonDataAttribute}. You have to be careful how big your json is, if its too big then you have to figure out another way, but if its small then no harm from saving into an attribute.

Created 06-16-2022 06:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please explain in more detail how to do this.

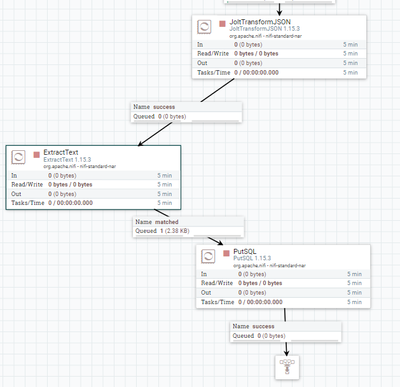

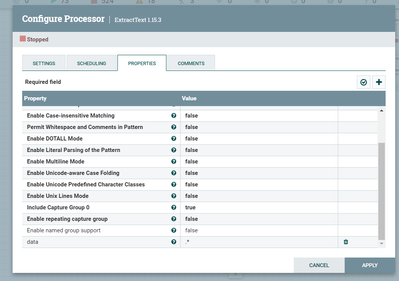

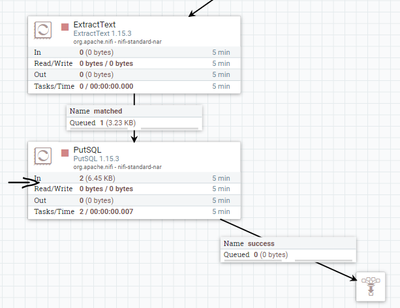

i tried this solution but i think i missed something in the configuration

Extract text

is it correct how i loaded the text to the variable data

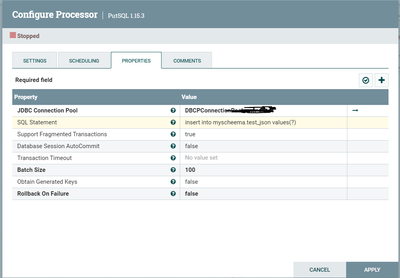

then PUTSQL

Created 06-16-2022 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Im not familiar with the postgress sql but should not be like this:

INSERT INTO table(column1, column2, …)

VALUES

(value1, value2, …);

Also for the value you need to provide the attribute name , so it should be something like this:

INSERT INTO table(jsonDataColumn) VALUES(${data});

Created 06-16-2022 07:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i did that , PutSQL accepts as you see the flowfile but it gives no output, also no data inserted to the DB

Created 06-16-2022 07:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I forgot to mention that in the PutSql processor set the Batch Size property to 1