Support Questions

- Cloudera Community

- Support

- Support Questions

- NiFi Dynamic reader

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi Dynamic reader

- Labels:

-

Apache NiFi

Created on 08-01-2018 10:49 AM - edited 08-17-2019 10:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello guys,

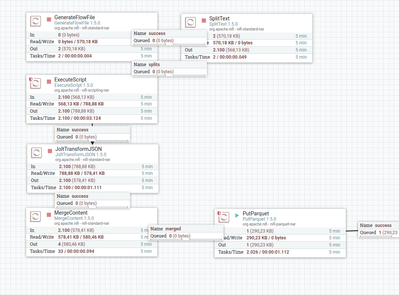

I want to transform JSON to Parquet, i followed a tutorial and my current flow is functionally.

My problem is that i need to create parquet schema's dynamically. Every schema needs to get generated reading attributes/content of the incoming flowfile. Putparquet processor uses a RecordReader. I found this processor called ScriptedReader but i have no idea about how i can generate a schema and use it for PutParquet processor. Does anyone now how to use it? Or, are there any alternatives about creating a schema dynamically for Putparquet processor?

Thanks in advice.

Created 08-01-2018 01:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you plan to determine the schema from your json? are you saying you want to infer a schema based on the data?

Typically this approach doesn't work that great because it is hard to guess the correct type for a given field. Imagine the first record has a field "id" and the value is "1234" so it looks like it is a number, but the second record has id as "abcd", so if it guesses a number based on the first record then it will fail on the second record because its not a number.

There is a processor that attempts to do this though, InferAvroSchema... you could probably do something like InferAvroSchema -> ConvertJsonToAvro -> PutParquet with Avro Reader.

Created 08-01-2018 01:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you plan to determine the schema from your json? are you saying you want to infer a schema based on the data?

Typically this approach doesn't work that great because it is hard to guess the correct type for a given field. Imagine the first record has a field "id" and the value is "1234" so it looks like it is a number, but the second record has id as "abcd", so if it guesses a number based on the first record then it will fail on the second record because its not a number.

There is a processor that attempts to do this though, InferAvroSchema... you could probably do something like InferAvroSchema -> ConvertJsonToAvro -> PutParquet with Avro Reader.

Created 08-01-2018 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Bryan,

Thanks for the quick answer.

I have no problem about the datatype, in my case every field of JSON is going to be long type.

Im stuck on finding a reader that can manage dynamic JSON keys. For example

1st flow file:

... { "id_4344" : "1532102971, "id_4544" : 1532102972 } ...

2nd flow file:

... { "id_7177" : "1532102972, "id_8154" : 1532102972 } ...

I need to find out how to read those ids that change in everyflowfile.

Meanwhile i'll try your suggestion.

Thanks.

Created 08-01-2018 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bryan's InferAvroSchema answer should work well in this case, but as an alternative, you might consider "normalizing" your schema by using JoltTransformJSON to change each flow file into the same schema. For example, using the following Chain spec:

[

{

"operation": "shift",

"spec": {

"id_*": {

"@": "entry.[#2].value",

"$(0,1)": "entry.[#2].id"

}

}

}

]And the following input:

{ "id_4344" : 1532102971, "id_4544" : 1532102972 }You get the following output:

{

"entry" : [ {

"value" : 1532102971,

"id" : "4344"

}, {

"value" : 1532102972,

"id" : "4544"

} ]

}This allows you to predefine the schema, removing the need for the schema and readers to be dynamic. If you don't want the (possibly unnecessary) "entry" array inside the single JSON object, you can produce a top-level array with the following spec:

[

{

"operation": "shift",

"spec": {

"id_*": {

"@": "[#2].value",

"$(0,1)": "[#2].id"

}

}

}

]Which gives you the following output:

[ {

"value" : 1532102971,

"id" : "4344"

}, {

"value" : 1532102972,

"id" : "4544"

} ]

Created 08-01-2018 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot guys, will give it a try asap.