Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What is a good approach for Spilitting 100GB f...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is a good approach for Spilitting 100GB file in to multiple files.?

- Labels:

-

Apache NiFi

Created 10-26-2016 04:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

I have a 100 GB file while I will have to Split in to multiple (may be 1000) files depending on the value "Tagname" in the sample data below and write in to HDFS.

Tagname,Timestamp,Value,Quality,QualityDetail,PercentGood

ABC04.PI_B04_EX01_A_STPDATTRG.F_CV,2/18/2015 1:03:32 AM,627,Good,NonSpecific,100 ABC04.PI_B04_EX01_A_STPDATTRG.F_CV,2/18/2015 1:03:33 AM,628,Good,NonSpecific,100 ABC05.X4_WET_MX_DDR.F_CV,2/18/2015 12:18:00 AM,12,Good,NonSpecific,100 ABC05.X4_WET_MX_DDR.F_CV,2/18/2015 12:18:01 AM,4,Good,NonSpecific,100 ABC04.PI_B04_FDR_A_STPDATTRG.F_CV,2/18/2015 1:04:19 AM,3979,Good,NonSpecific,100 ABC04.PI_B04_FDR_A_STPDATTRG.F_CV,2/18/2015 9:35:23 PM,4018,Good,NonSpecific,100 ABC04.PI_B04_FDR_A_STPDATTRG.F_CV,2/18/2015 9:35:24 PM,4019,Good,NonSpecific,100

In reality the "Tagname" will be continues to be the same(may be 10K+) until its value changes. I need to create one file for each Tag.

Do i have to split the file in to smaller files (may be 20 , 5GB files) using SplitFile.? If i do that will it split exactly at the end of lines.? Do I have to read line by line using ExtractText or any better approach.?

Can i use ConvertCSVToAvro and then ConvertAVROToJson and then split the Json file by Tag using SplitJson..??

Can i use do i have to change any default NiFi settings for this.?

Regards,

Sai

Created on 10-26-2016 07:10 PM - edited 08-18-2019 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

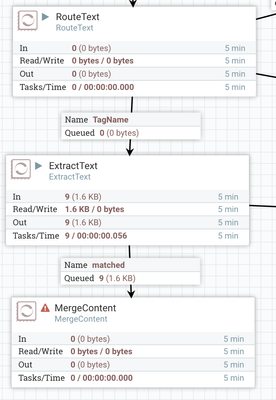

I agree that you may still need to split your very large incoming FlowFile into smaller FlowFiles to better manage heap memory usage, but you should be able to use the RouteText and ExtractText as follows to accomplish what you want:

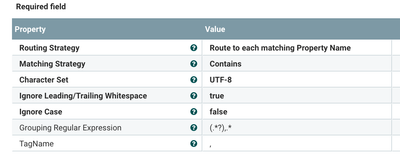

RouteText configured as follows:

All Grouped lines will be routed to relationship "TagName" as a new FlowFile.

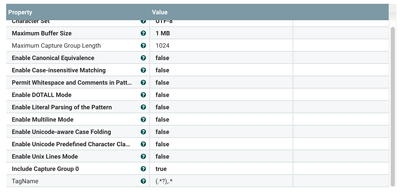

They feed into an ExtractText configured as follows:

This will extract the TagName as an attribute of on the FlowFile which you can then use as the correlationAttribute name in the MergeContent processor that follows.

Thanks,

Matt

Created on 10-26-2016 09:56 PM - edited 08-18-2019 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot to both of you.

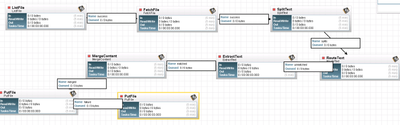

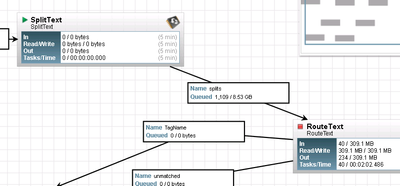

I got it to work with a smaller file and tried with a 10 GB file. and experiencing performance issues as expected.

trying to see which processes I can set "concurrent tasks" and improve performance.

the SplitText with 100000 lines took nearly 8 minutes. can I multi thread it.? is it thread aware.?

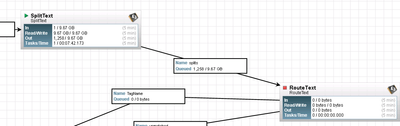

Splitfile where it took 7.42 mins

I took this screen shot after 10 mins..

but when I tried 4 concurrent tasks with RouteText , it routed lot of data to Original relation which I found thru data provenance.

any advice.?? we will get 100 GB files in reality. I was trying to prove the concept with 10GB file.

Regards,

Sai

Created 10-27-2016 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The SplitText will always route the incoming FlowFiles to the original relationship. What the SplitText processor is really doing is producing a bunch of new FlowFiles form a single "original FlowFile". Once all the splits have been created, the original un-split FlowFile is routed to the "Original" relationship. Most often that relationship is auto-terminated because users have need use for the original FlowFile after the splits are created.

Created 10-27-2016 01:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You've got a single SplitText in your example, you might find better performance out of multiple SplitTexts to reduce the size incrementally, maybe 100,000 lines is too many, perhaps split into 10,000 then 100 (or 1, etc.) If you have a single file in FetchFile, you won't see performance improvements with multiple tasks unless you break down the huge file as described. Otherwise, with such a large single input file, you might see a bottleneck at some point due to the vast amount of data moving through the pipeline, and multi-threading will not help with a single SplitText if you have a single input. With multiple SplitTexts (and the "downstream" ones having multiple threads/tasks), you may find some improvement in throughput.

Created 10-27-2016 09:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so in any case does the file needs to be read in to memory before it splits.?? either by lines or by bytes.

i was hoping it starts the next process work once it receive first split.? in my case it waited 8 minutes until it split the 10GB file into 1200+ splits. If my files are about 100 GB each (I have 18 such files) I am scared to run the whole flow for all files. I may have to run for each file one by one.?

- « Previous

-

- 1

- 2

- Next »