Support Questions

- Cloudera Community

- Support

- Support Questions

- Zeppelin Server does not able to start

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Zeppelin Server does not able to start

- Labels:

-

Apache Zeppelin

Created on 10-11-2017 04:35 AM - edited 08-17-2019 08:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

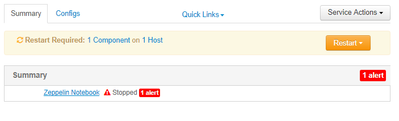

Hi, I am having trouble to start Zeppelin server after editing at Zeppelin Home Directory.

Below are the zeppelin error that I found

From /var/log/zeppelin

stderr: /var/lib/ambari-agent/data/errors-680.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/ZEPPELIN/0.6.0.2.5/package/scripts/master.py", line 330, in <module>

Master().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 720, in restart

self.start(env, upgrade_type=upgrade_type)

File "/var/lib/ambari-agent/cache/common-services/ZEPPELIN/0.6.0.2.5/package/scripts/master.py", line 187, in start

+ params.zeppelin_log_file, user=params.zeppelin_user)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 273, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 293, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/hdp/current/zeppelin-server/bin/zeppelin-daemon.sh restart >> /var/log/zeppelin/zeppelin-setup.log' returned 1.stdout: /var/lib/ambari-agent/data/output-680.txt

2017-10-10 23:42:04,513 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2017-10-10 23:42:04,515 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2017-10-10 23:42:04,517 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-10-10 23:42:04,557 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2017-10-10 23:42:04,558 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-10-10 23:42:04,587 - checked_call returned (0, '')

2017-10-10 23:42:04,588 - Ensuring that hadoop has the correct symlink structure

2017-10-10 23:42:04,588 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-10-10 23:42:04,800 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2017-10-10 23:42:04,802 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2017-10-10 23:42:04,803 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-10-10 23:42:04,844 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2017-10-10 23:42:04,844 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-10-10 23:42:04,877 - checked_call returned (0, '')

2017-10-10 23:42:04,878 - Ensuring that hadoop has the correct symlink structure

2017-10-10 23:42:04,878 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-10-10 23:42:04,880 - Group['livy'] {}

2017-10-10 23:42:04,882 - Group['elasticsearch'] {}

2017-10-10 23:42:04,883 - Group['spark'] {}

2017-10-10 23:42:04,883 - Group['zeppelin'] {}

2017-10-10 23:42:04,883 - Group['hadoop'] {}

2017-10-10 23:42:04,884 - Group['kibana'] {}

2017-10-10 23:42:04,884 - Group['users'] {}

2017-10-10 23:42:04,884 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,886 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,887 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,888 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,889 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,890 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,892 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-10-10 23:42:04,893 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,894 - User['logsearch'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,895 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,896 - User['elasticsearch'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,897 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,898 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2017-10-10 23:42:04,899 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,900 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,901 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,902 - User['kibana'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,903 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,904 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,906 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2017-10-10 23:42:04,907 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-10-10 23:42:04,909 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2017-10-10 23:42:04,916 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2017-10-10 23:42:04,916 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2017-10-10 23:42:04,916 - Creating directory Directory['/tmp/hbase-hbase'] since it doesn't exist.

2017-10-10 23:42:04,917 - Changing owner for /tmp/hbase-hbase from 0 to hbase

2017-10-10 23:42:04,917 - Changing permission for /tmp/hbase-hbase from 755 to 775

2017-10-10 23:42:04,918 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2017-10-10 23:42:04,919 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2017-10-10 23:42:04,926 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2017-10-10 23:42:04,927 - Group['hdfs'] {}

2017-10-10 23:42:04,928 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': [u'hadoop', u'hdfs']}

2017-10-10 23:42:04,929 - FS Type:

2017-10-10 23:42:04,929 - Directory['/etc/hadoop'] {'mode': 0755}

2017-10-10 23:42:04,952 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2017-10-10 23:42:04,953 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2017-10-10 23:42:04,974 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2017-10-10 23:42:04,983 - Skipping Execute[('setenforce', '0')] due to not_if

2017-10-10 23:42:04,984 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2017-10-10 23:42:04,986 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2017-10-10 23:42:04,986 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2017-10-10 23:42:04,986 - Creating directory Directory['/tmp/hadoop-hdfs'] since it doesn't exist.

2017-10-10 23:42:04,987 - Changing owner for /tmp/hadoop-hdfs from 0 to hdfs

2017-10-10 23:42:04,991 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2017-10-10 23:42:04,992 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2017-10-10 23:42:04,993 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2017-10-10 23:42:05,003 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs', 'group': 'hadoop'}

2017-10-10 23:42:05,004 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2017-10-10 23:42:05,005 - File['/usr/hdp/current/hadoop-client/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2017-10-10 23:42:05,008 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2017-10-10 23:42:05,013 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2017-10-10 23:42:05,329 - call['ambari-python-wrap /usr/bin/hdp-select status spark-client'] {'timeout': 20}

2017-10-10 23:42:05,349 - call returned (0, 'spark-client - 2.5.3.0-37')

2017-10-10 23:42:05,358 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2017-10-10 23:42:05,361 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2017-10-10 23:42:05,363 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-10-10 23:42:05,402 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2017-10-10 23:42:05,402 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2017-10-10 23:42:05,445 - checked_call returned (0, '')

2017-10-10 23:42:05,446 - Ensuring that hadoop has the correct symlink structure

2017-10-10 23:42:05,446 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-10-10 23:42:05,447 - Directory['/var/log/zeppelin'] {'owner': 'zeppelin', 'group': 'zeppelin', 'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2017-10-10 23:42:05,451 - Execute['/usr/hdp/current/zeppelin-server/bin/zeppelin-daemon.sh stop >> /var/log/zeppelin/zeppelin-setup.log'] {'user': 'zeppelin'}

2017-10-10 23:42:05,670 - Pid file is empty or does not exist

2017-10-10 23:42:05,672 - Directory['/var/log/zeppelin'] {'owner': 'zeppelin', 'group': 'zeppelin', 'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2017-10-10 23:42:05,673 - XmlConfig['zeppelin-site.xml'] {'owner': 'zeppelin', 'group': 'zeppelin', 'conf_dir': '/etc/zeppelin/conf', 'configurations': ...}

2017-10-10 23:42:05,691 - Generating config: /etc/zeppelin/conf/zeppelin-site.xml

2017-10-10 23:42:05,691 - File['/etc/zeppelin/conf/zeppelin-site.xml'] {'owner': 'zeppelin', 'content': InlineTemplate(...), 'group': 'zeppelin', 'mode': None, 'encoding': 'UTF-8'}

2017-10-10 23:42:05,716 - File['/etc/zeppelin/conf/zeppelin-env.sh'] {'owner': 'zeppelin', 'content': InlineTemplate(...), 'group': 'zeppelin'}

2017-10-10 23:42:05,718 - File['/etc/zeppelin/conf/shiro.ini'] {'owner': 'zeppelin', 'content': InlineTemplate(...), 'group': 'zeppelin'}

2017-10-10 23:42:05,719 - File['/etc/zeppelin/conf/log4j.properties'] {'owner': 'zeppelin', 'content': ..., 'group': 'zeppelin'}

2017-10-10 23:42:05,719 - File['/etc/zeppelin/conf/hive-site.xml'] {'owner': 'zeppelin', 'content': StaticFile('/etc/spark/conf/hive-site.xml'), 'group': 'zeppelin'}

2017-10-10 23:42:05,721 - File['/etc/zeppelin/conf/hbase-site.xml'] {'owner': 'zeppelin', 'content': StaticFile('/etc/hbase/conf/hbase-site.xml'), 'group': 'zeppelin'}

2017-10-10 23:42:05,722 - Execute[('chown', '-R', u'zeppelin:zeppelin', '/etc/zeppelin')] {'sudo': True}

2017-10-10 23:42:05,731 - Execute[('chown', '-R', u'zeppelin:zeppelin', u'/usr/hdp/current/zeppelin-server/notebook')] {'sudo': True}

2017-10-10 23:42:05,741 - HdfsResource['/user/zeppelin'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'default_fs': 'hdfs://bigdata.ais.utm.my:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'zeppelin', 'recursive_chown': True, 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'directory', 'action': ['create_on_execute'], 'recursive_chmod': True}

2017-10-10 23:42:05,743 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpuzyGbS 2>/tmp/tmpS7DTt5''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:05,876 - call returned (0, '')

2017-10-10 23:42:05,877 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpwHTYSF 2>/tmp/tmpBIImwQ''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,038 - call returned (0, '')

2017-10-10 23:42:06,039 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin?op=GETCONTENTSUMMARY&user.name=hdfs'"'"' 1>/tmp/tmpMfDCJV 2>/tmp/tmp5npAnl''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,162 - call returned (0, '')

2017-10-10 23:42:06,164 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmpY4wRD9 2>/tmp/tmpuWuB9S''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,318 - call returned (0, '')

2017-10-10 23:42:06,321 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.Trash?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmpxpXkUl 2>/tmp/tmpVyw8B9''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,500 - call returned (0, '')

2017-10-10 23:42:06,502 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmpNpCBGb 2>/tmp/tmp9y6w1H''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,662 - call returned (0, '')

2017-10-10 23:42:06,663 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0006?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmp2vVPKb 2>/tmp/tmpg95wZl''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,804 - call returned (0, '')

2017-10-10 23:42:06,807 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0011?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmpCH4cnD 2>/tmp/tmpLwkU82''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:06,987 - call returned (0, '')

2017-10-10 23:42:06,990 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/test?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmp92_TDl 2>/tmp/tmp2fd_45''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:07,150 - call returned (0, '')

2017-10-10 23:42:07,152 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.Trash?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpdcZrlC 2>/tmp/tmpkUy4QD''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:07,328 - call returned (0, '')

2017-10-10 23:42:07,329 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://bigdata.ais.utm.my:50070/webhdfs/v1/user/zeppelin/.sparkStaging?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpULFoCS 2>/tmp/tmpnDoNvY''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:07,507 - call returned (0, '')

2017-10-10 23:42:07,509 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0006?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmppKonyG 2>/tmp/tmpGNruG_''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:07,681 - call returned (0, '')

2017-10-10 23:42:07,683 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0006/__spark_conf__2379760639591875615.zip?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpVYOcwR 2>/tmp/tmpaWfLft''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:07,877 - call returned (0, '')

2017-10-10 23:42:07,879 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0006/py4j-0.9-src.zip?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpyhEcHT 2>/tmp/tmpaDfPbE''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:08,056 - call returned (0, '')

2017-10-10 23:42:08,058 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0006/pyspark.zip?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmphgxYKG 2>/tmp/tmp4VKkoQ''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:08,241 - call returned (0, '')

2017-10-10 23:42:08,243 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0011?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpqQhioW 2>/tmp/tmppr5_uT''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:08,415 - call returned (0, '')

2017-10-10 23:42:08,417 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0011/__spark_conf__8077160833487577672.zip?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpiTVJ2d 2>/tmp/tmpXbCjLv''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:08,589 - call returned (0, '')

2017-10-10 23:42:08,591 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0011/py4j-0.9-src.zip?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmp93uvDP 2>/tmp/tmpDguuGb''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:08,775 - call returned (0, '')

2017-10-10 23:42:08,777 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://myhostname:50070/webhdfs/v1/user/zeppelin/.sparkStaging/application_1505703113454_0011/pyspark.zip?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpQJ4JzG 2>/tmp/tmpnxXSi3''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:08,954 - call returned (0, '')

2017-10-10 23:42:08,956 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://hostname:50070/webhdfs/v1/user/zeppelin/test?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpPso5rr 2>/tmp/tmpPxP2vj''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:09,151 - call returned (0, '')

2017-10-10 23:42:09,153 - HdfsResource['/user/zeppelin/test'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'default_fs': 'hdfs://bigdata.ais.utm.my:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'zeppelin', 'recursive_chown': True, 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'directory', 'action': ['create_on_execute'], 'recursive_chmod': True}

2017-10-10 23:42:09,154 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://hostname:50070/webhdfs/v1/user/zeppelin/test?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpGtjd97 2>/tmp/tmpkA5q6U''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:09,347 - call returned (0, '')

2017-10-10 23:42:09,350 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://hostname:50070/webhdfs/v1/user/zeppelin/test?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpuxF1In 2>/tmp/tmp8DCBmB''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:09,523 - call returned (0, '')

2017-10-10 23:42:09,525 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://hostname:50070/webhdfs/v1/user/zeppelin/test?op=GETCONTENTSUMMARY&user.name=hdfs'"'"' 1>/tmp/tmpuHexKw 2>/tmp/tmp9z6X5D''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:09,720 - call returned (0, '')

2017-10-10 23:42:09,721 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://hostname:50070/webhdfs/v1/user/zeppelin/test?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmpQf3cSh 2>/tmp/tmpY09VhK''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:09,910 - call returned (0, '')

2017-10-10 23:42:09,912 - HdfsResource['/apps/zeppelin'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'default_fs': 'hdfs://bigdata.ais.utm.my:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'zeppelin', 'recursive_chown': True, 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'directory', 'action': ['create_on_execute'], 'recursive_chmod': True}

2017-10-10 23:42:09,914 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://hostname:50070/webhdfs/v1/apps/zeppelin?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpSEUtOb 2>/tmp/tmpm2jnhY''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:10,083 - call returned (0, '')

2017-10-10 23:42:10,086 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://hostname:50070/webhdfs/v1/apps/zeppelin?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpwyKh8n 2>/tmp/tmpyDNDLY''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:10,295 - call returned (0, '')

2017-10-10 23:42:10,297 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://bigdata.ais.utm.my:50070/webhdfs/v1/apps/zeppelin?op=GETCONTENTSUMMARY&user.name=hdfs'"'"' 1>/tmp/tmpmPec8L 2>/tmp/tmpZa2Epj''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:10,466 - call returned (0, '')

2017-10-10 23:42:10,468 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://hostname:50070/webhdfs/v1/apps/zeppelin?op=LISTSTATUS&user.name=hdfs'"'"' 1>/tmp/tmplFQm2Z 2>/tmp/tmpCKK9Hy''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:10,644 - call returned (0, '')

2017-10-10 23:42:10,646 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://bigdata.ais.utm.my:50070/webhdfs/v1/apps/zeppelin/zeppelin-spark-dependencies-0.6.0.2.5.3.0-37.jar?op=SETOWNER&user.name=hdfs&owner=zeppelin&group='"'"' 1>/tmp/tmpg6bT27 2>/tmp/tmptE7eZ_''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:10,818 - call returned (0, '')

2017-10-10 23:42:10,819 - HdfsResource['/apps/zeppelin/zeppelin-spark-dependencies-0.6.0.2.5.3.0-37.jar'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'source': '/usr/hdp/current/zeppelin-server/interpreter/spark/dep/zeppelin-spark-dependencies-0.6.0.2.5.3.0-37.jar', 'default_fs': 'hdfs://hostname:8020', 'replace_existing_files': True, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'zeppelin', 'group': 'zeppelin', 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'file', 'action': ['create_on_execute'], 'mode': 0444}

2017-10-10 23:42:10,820 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://hostname:50070/webhdfs/v1/apps/zeppelin/zeppelin-spark-dependencies-0.6.0.2.5.3.0-37.jar?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmp7dJv6q 2>/tmp/tmpEtGvUN''] {'logoutput': None, 'quiet': False}

2017-10-10 23:42:10,973 - call returned (0, '')

2017-10-10 23:42:10,973 - DFS file /apps/zeppelin/zeppelin-spark-dependencies-0.6.0.2.5.3.0-37.jar is identical to /usr/hdp/current/zeppelin-server/interpreter/spark/dep/zeppelin-spark-dependencies-0.6.0.2.5.3.0-37.jar, skipping the copying

2017-10-10 23:42:10,974 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'default_fs': 'hdfs://bigdata.ais.utm.my:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'action': ['execute'], 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf'}

2017-10-10 23:42:10,977 - File['/etc/zeppelin/conf/interpreter.json'] {'content': ..., 'owner': 'zeppelin', 'group': 'zeppelin'}

2017-10-10 23:42:10,977 - Writing File['/etc/zeppelin/conf/interpreter.json'] because contents don't match

2017-10-10 23:42:10,978 - Execute['/usr/hdp/current/zeppelin-server/bin/zeppelin-daemon.sh restart >> /var/log/zeppelin/zeppelin-setup.log'] {'user': 'zeppelin'}

Command failed after 1 tries

Created 10-11-2017 04:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 10-11-2017 04:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 10-11-2017 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

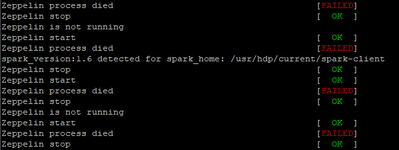

Hi Aditya. Here is the attachment. Thanks.

[me@mylaptop zeppelin]$ cat zeppelin-setup.log spark_version:1.6 detected for spark_home: /usr/hdp/current/spark-client Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin is not running Zeppelin is not running Zeppelin is not running Zeppelin is not running Zeppelin is not running Zeppelin is not running Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] spark_version:1.6 detected for spark_home: /usr/hdp/current/spark-client Zeppelin stop [ OK ] Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] spark_version:1.6 detected for spark_home: /usr/hdp/current/spark-client Zeppelin stop [ OK ] Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED] Zeppelin stop [ OK ] Zeppelin is not running Zeppelin start [ OK ] Zeppelin process died [FAILED]

Created 10-11-2017 05:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There isn't much info in this file. There will be another log file. zeppelin-zeppelin-<hostname>-.log . Can you please attach this file.

Created 10-11-2017 06:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[me@host zeppelin]$ sudo cat zeppelin-zeppelin-hostname.log

INFO [2017-10-11 01:03:10,494] ({main} ZeppelinConfiguration.java[create]:98) - Load configuration from file:/etc/zeppelin/2.5.3.0-37/0/zeppelin-site.xml

INFO [2017-10-11 01:03:10,560] ({main} Log.java[initialized]:186) - Logging initialized @362ms

INFO [2017-10-11 01:03:10,616] ({main} ZeppelinServer.java[setupWebAppContext]:266) - ZeppelinServer Webapp path: /usr/hdp/current/zeppelin-server/webapps INFO [2017-10-11 01:03:10,821] ({main} ZeppelinServer.java[main]:114) - Starting zeppelin server INFO [2017-10-11 01:03:10,822] ({main} Server.java[doStart]:327) - jetty-9.2.15.v20160210

WARN [2017-10-11 01:03:10,840] ({main} WebAppContext.java[doStart]:514) - Failed startup of context o.e.j.w.WebAppContext@157632c9{/,null,null}{/usr/hdp/current/zeppelin-server/lib/zeppelin-web-0.6.0.2.5.3.0-37.war} java.lang.IllegalStateException: Failed to delete temp dir /usr/hdp/2.5.3.0-37/zeppelin/webapps at org.eclipse.jetty.webapp.WebInfConfiguration.configureTempDirectory(WebInfConfiguration.java:372) at org.eclipse.jetty.webapp.WebInfConfiguration.resolveTempDirectory(WebInfConfiguration.java:260) at org.eclipse.jetty.webapp.WebInfConfiguration.preConfigure(WebInfConfiguration.java:69) at org.eclipse.jetty.webapp.WebAppContext.preConfigure(WebAppContext.java:468) at org.eclipse.jetty.webapp.WebAppContext.doStart(WebAppContext.java:504) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.eclipse.jetty.util.component.ContainerLifeCycle.start(ContainerLifeCycle.java:132) at org.eclipse.jetty.util.component.ContainerLifeCycle.doStart(ContainerLifeCycle.java:114) at org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:61) at org.eclipse.jetty.server.handler.ContextHandlerCollection.doStart(ContextHandlerCollection.java:163) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.eclipse.jetty.util.component.ContainerLifeCycle.start(ContainerLifeCycle.java:132) at org.eclipse.jetty.server.Server.start(Server.java:387) at org.eclipse.jetty.util.component.ContainerLifeCycle.doStart(ContainerLifeCycle.java:114) at org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:61) at org.eclipse.jetty.server.Server.doStart(Server.java:354) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.apache.zeppelin.server.ZeppelinServer.main(ZeppelinServer.java:116)

WARN [2017-10-11 01:03:10,847] ({main} AbstractLifeCycle.java[setFailed]:212) - FAILED ServerConnector@4034c28c{HTTP/1.1}{0.0.0.0:9995}: java.net.BindException: Address already in use java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:433) at sun.nio.ch.Net.bind(Net.java:425) at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223) at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) at org.eclipse.jetty.server.ServerConnector.open(ServerConnector.java:321) at org.eclipse.jetty.server.AbstractNetworkConnector.doStart(AbstractNetworkConnector.java:80) at org.eclipse.jetty.server.ServerConnector.doStart(ServerConnector.java:236) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.eclipse.jetty.server.Server.doStart(Server.java:366) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.apache.zeppelin.server.ZeppelinServer.main(ZeppelinServer.java:116) WARN [2017-10-11 01:03:10,847] ({main} AbstractLifeCycle.java[setFailed]:212) - FAILED org.eclipse.jetty.server.Server@14ec4505: java.net.BindException: Address already in use java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:433) at sun.nio.ch.Net.bind(Net.java:425) at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223) at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) at org.eclipse.jetty.server.ServerConnector.open(ServerConnector.java:321) at org.eclipse.jetty.server.AbstractNetworkConnector.doStart(AbstractNetworkConnector.java:80) at org.eclipse.jetty.server.ServerConnector.doStart(ServerConnector.java:236) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.eclipse.jetty.server.Server.doStart(Server.java:366) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.apache.zeppelin.server.ZeppelinServer.main(ZeppelinServer.java:116)

ERROR [2017-10-11 01:03:10,847] ({main} ZeppelinServer.java[main]:118) - Error while running jettyServer java.net.BindException: Address already in use at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:433) at sun.nio.ch.Net.bind(Net.java:425) at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223) at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) at org.eclipse.jetty.server.ServerConnector.open(ServerConnector.java:321) at org.eclipse.jetty.server.AbstractNetworkConnector.doStart(AbstractNetworkConnector.java:80) at org.eclipse.jetty.server.ServerConnector.doStart(ServerConnector.java:236) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.eclipse.jetty.server.Server.doStart(Server.java:366) at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) at org.apache.zeppelin.server.ZeppelinServer.main(ZeppelinServer.java:116)

Created 10-11-2017 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From the log it looks like the port is used by some process. Check if the port is occupied by running,

netstat -tupln | grep 9995

sample output : tcp6 0 0 :::9995 :::* LISTEN 4683/java (4683 is the PID here)

kill -9 4683

Get the PID from the above command output and kill the process. After killing the process, check if this dir is present

/usr/hdp/2.5.3.0-37/zeppelin/webapps . remove it if exists

rm -rf /usr/hdp/2.5.3.0-37/zeppelin/webapps

Restart zeppelin after doing the above steps.

Thanks,

Aditya

Created 10-03-2019 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Solution helped me tooo

Created on 10-11-2017 06:45 AM - edited 08-17-2019 08:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

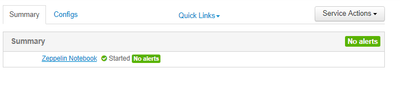

It works like a charm. Thank you so much @Aditya Sirna