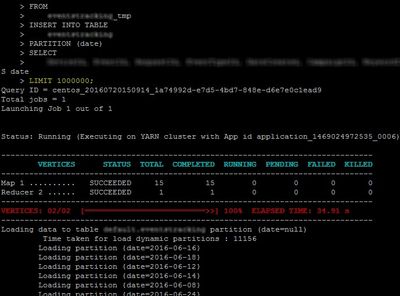

I have a query that

inserts records from one table to another, 1.5 million records approx.

When I run the query most

of the map tasks fails and the job aborts displaying several exceptions.

There is no problem at

all in the data being inserted or in the table schema, as the failed rows can

be successfully inserted in an isolated query. Datanodes and yarn have plenty of

space left, connectivity is not a problem as the cluster is hosted in aws ec2

and the pcs are in the same virtual private cloud.

But here is the

strange thing… If I add a LIMIT clause to the original query the job executes properly!

The limit value is big enough to include all the records, so in reality there

is no actual limit.

Cluster Specs:

The cluster is small for testing purposes, composed by 3 machines, the

ambari-server and 2 datanodes/nodemanagers 8GB RAM each, YARN memory 12.5GB.

Remaining HDFS disk 40GB.

Thanks in advance for

your time,