Community Articles

- Cloudera Community

- Support

- Community Articles

- Apache Spark Fine Grain Security with LLAP Test Dr...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-18-2016 03:15 PM - edited 08-17-2019 07:15 AM

This tutorial is a companion to this article:

The article outlines the use cases and potential benefits to the business that Spark fine grain security with LLAP may yield. This article also has a second part that covers how to apply Tag based security for Spark using Ranger and Atlas in combination.

Tag Based (Meta Data) Security for Apache Spark with LLAP, Atlas, and Ranger

Getting Started

Install an HDP 2.5.3 Cluster via Ambari. Make sure the following components are installed:

- Hive

- Spark

- Spark Thrift Server

- Ambari Infra

- Ranger

Enable LLAP

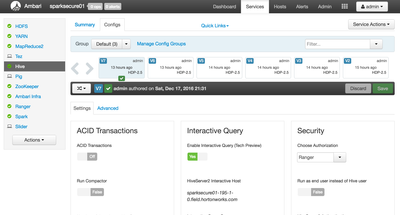

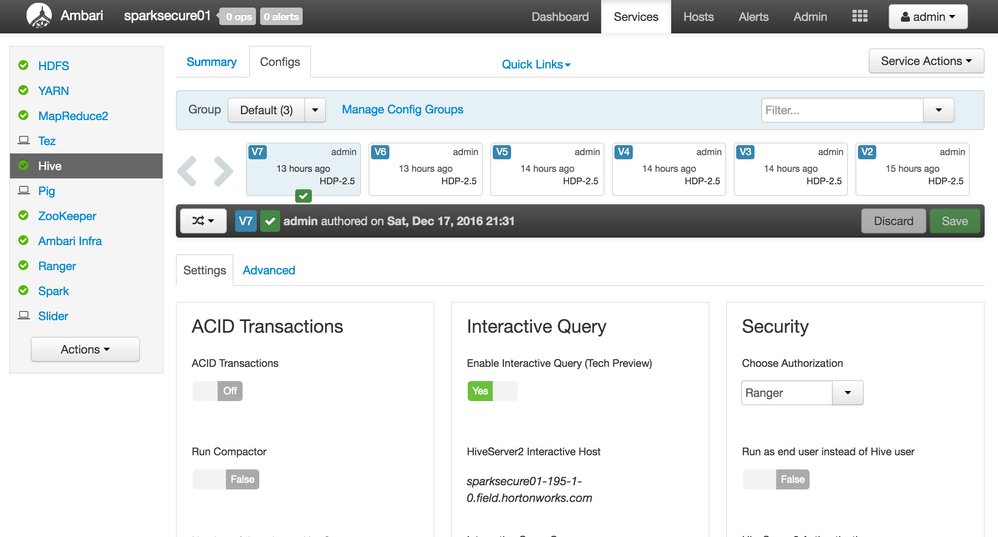

Navigate to the Hive Configuration Page and click Enable Interactive Query. Ambari will ask what host group to put the Hiveserver2 service into. Select the Host Group with the most available resources.

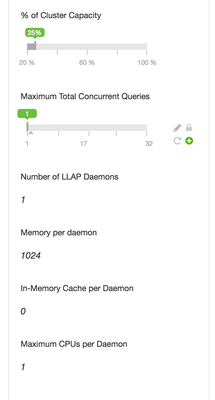

With Interactive Query enabled, Ambari will display new configurations options. These options provide control of resource allocation for the LLAP service. LLAP is a set of long lived daemons that facilitate interactive query response times and fine grain security for Spark. Since the goal of this tutorial is to test out fine grain security for Spark, LLAP only needs a minimal allocation of resources. However, if more resources are available, feel free to crank up the allocation and run some Hive queries against the Hive Interactive server to get a feel for how LLAP improves Hive's performance.

Save configurations, confirm and proceed.

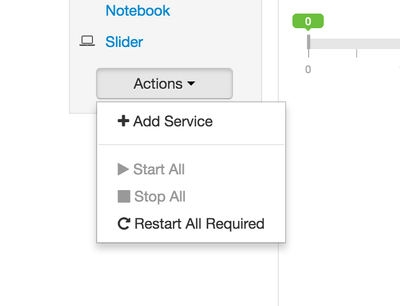

Restart all required services.

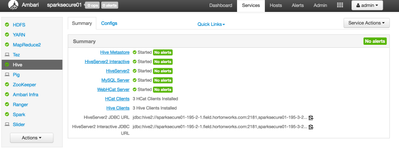

Navigate to Hive Summary tab and ensure that Hiveserver2 Interactive is started

Download Spark-LLAP Assembly

From the command line as root:

wget -P /usr/hdp/current/spark-client/lib/ http://repo.hortonworks.com/content/repositories/releases/com/hortonworks/spark-llap/1.0.0.2.5.3.0-3...

Copy the assembly to the same location on each host where Spark may start an executor. If queues are not enabled, this likely means all hosts running a node manager service.

Make sure all users have read permissions to that location and the assembly file

Configure Spark for LLAP

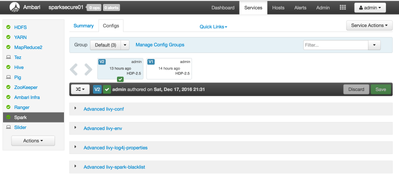

- In Ambari, navigate to the Spark service configuration tab:

- Find Custom-spark-defaults,

- click add property and add the following properties:

- spark.sql.hive.hiveserver2.url=jdbc:hive2://{hiveserver-interactive-hostname}:10500

- spark.jars=/usr/hdp/current/spark-client/lib/spark-llap-1.0.0.2.5.3.0-37-assembly.jar

- spark.hadoop.hive.zookeeper.quorum={some-or-all-zookeeper-hostnames}:2181

- spark.hadoop.hive.llap.daemon.service.hosts=@llap0

- Find Custom spark-thrift-sparkconf,

- click add property and add the following properties:

- spark.sql.hive.hiveserver2.url=jdbc:hive2://{hiveserver-interactive-hostname}:10500

- spark.jars=/usr/hdp/current/spark-client/lib/spark-llap-1.0.0.2.5.3.0-37-assembly.jar

- spark.hadoop.hive.zookeeper.quorum={some-or-all-zookeeper-hostnames}:2181

- spark.hadoop.hive.llap.daemon.service.hosts=@llap0

- Find Advanced-spark-env

- Set spark_thrift_cmd_opts attribute to --jars /usr/hdp/current/spark-client/lib/spark-llap-1.0.0.2.5.3.0-37-assembly.jar

- Save all configuration changes

- Restart all components of Spark

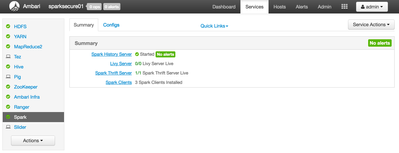

- Make sure Spark-Thrift server is started

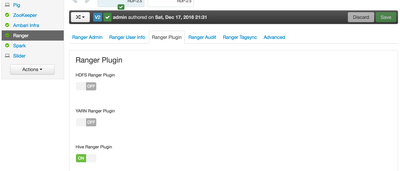

Enable Ranger for Hive

- Navigate to Ranger Service Configs tab

- Click on Ranger Plugin Tab

- Click the switch labeled "Enable Ranger Hive Plugin"

- Save Configs

- Restart All Required Services

Create Stage Sample Data in External Hive Table

- From Command line

cd /tmp wget https://www.dropbox.com/s/r70i8j1ujx4h7j8/data.zip unzip data.zip sudo -u hdfs hadoop fs -mkdir /tmp/FactSales sudo -u hdfs hadoop fs -chmod 777 /tmp/FactSales sudo -u hdfs hadoop fs -put /tmp/data/FactSales.csv /tmp/FactSales beeline -u jdbc:hive2://sparksecure01-195-3-2:10000 -n hive -e "CREATE TABLE factsales_tmp (SalesKey int ,DateKey timestamp, channelKey int, StoreKey int, ProductKey int, PromotionKey int, CurrencyKey int, UnitCost float, UnitPrice float, SalesQuantity int, ReturnQuantity int, ReturnAmount float, DiscountQuantity int, DiscountAmount float, TotalCost float, SalesAmount float, ETLLoadID int,LoadDate timestamp, UpdateDate timestamp) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED ASTEXTFILE LOCATION '/tmp/FactSales'"

Move data into Hive Tables

- From Command line

beeline -u jdbc:hive2://sparksecure01-195-3-2:10000 -n hive -e "CREATE TABLE factsales (SalesKey int ,DateKey timestamp, channelKey int, StoreKey int, ProductKey int, PromotionKey int, CurrencyKey int, UnitCost float, UnitPrice float, SalesQuantity int, ReturnQuantity int, ReturnAmount float, DiscountQuantity int, DiscountAmount float, TotalCost float, SalesAmount float, ETLLoadID int, LoadDate timestamp, UpdateDate timestamp) clustered by (saleskey) into 7 buckets stored as ORC" beeline -u jdbc:hive2://sparksecure01-195-3-2:10000 -n hive -e "INSERT INTO factsales SELECT * FROM factsales_tmp"

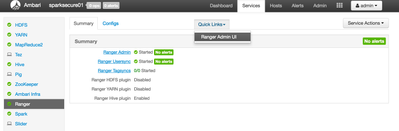

Configure Ranger Security Policies

- Navigate to the Ranger Service

- Click on Quicklinks --> Ranger Admin UI

- user: admin password: admin

- Click on {clustername}_hive service link, under the Hive section

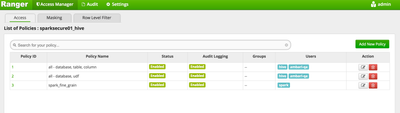

- Several Hive security policies should be visible

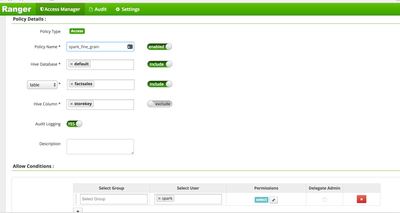

- Add a new Column level policy for User spark as show in the screenshot below. Make sure that the storekey column is excluded from access.

- User hive is allowed access to all tables and all columns, user spark is restricted from accessing the storekey column in the factsales table

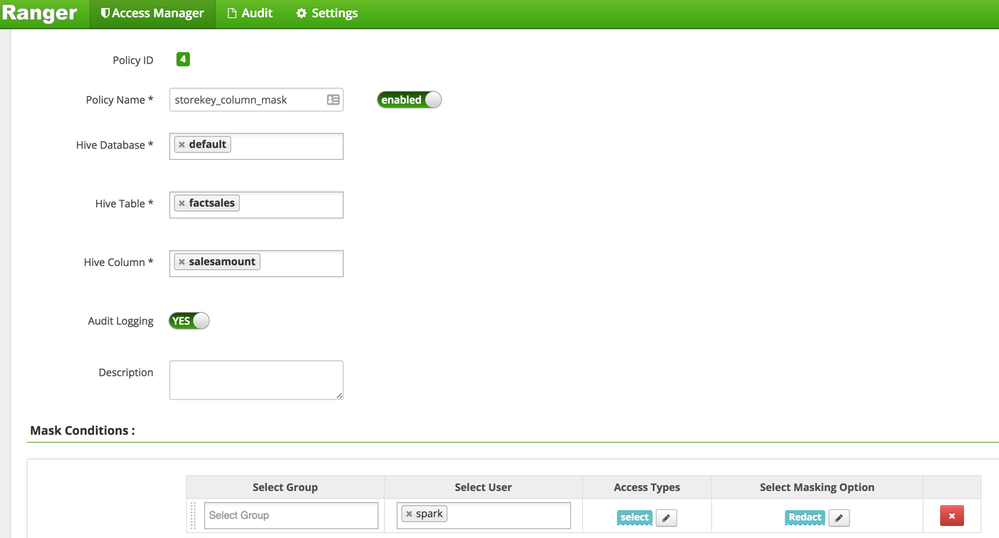

- Click on the Masking Tab

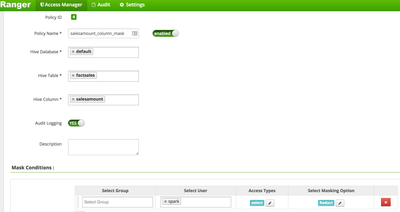

- Add a new Masking policy for the User spark to redact the salesamount column

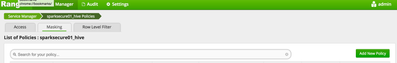

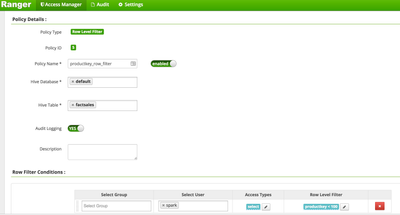

- Click on the Row Level Filter tab and add a new Row Level Filter policy for User spark to only show productkey < 100

Test Fine Grain Security with Spark

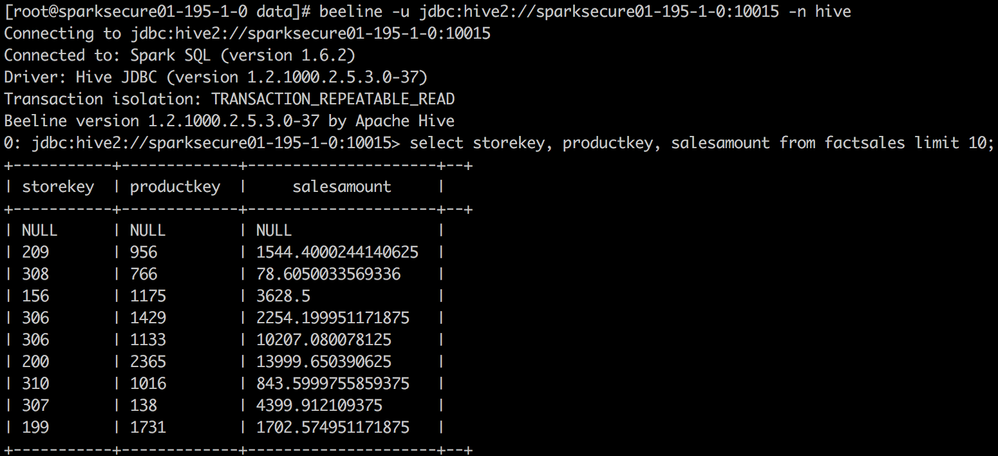

- Connect to Spark-Thrift server using beeline as hive User and verify sample tables are visible

beeline -u jdbc:hive2://sparksecure01-195-1-0:10015 -n hive Connecting to jdbc:hive2://sparksecure01-195-1-0:10015 Connected to: Spark SQL (version 1.6.2) Driver: Hive JDBC (version 1.2.1000.2.5.3.0-37) Transaction isolation: TRANSACTION_REPEATABLE_READ Beeline version 1.2.1000.2.5.3.0-37 by Apache Hive 0: jdbc:hive2://sparksecure01-195-1-0:10015> show tables; +----------------+--------------+--+ | tableName | isTemporary | +----------------+--------------+--+ | factsales | false | | factsales_tmp | false | +----------------+--------------+--+ 2 rows selected (0.793 seconds)

- Get the Explain Plan for a simple query

0: jdbc:hive2://sparksecure01-195-1-0:10015> explain select storekey from factsales; | == Physical Plan == | | Scan LlapRelation(org.apache.spark.sql.hive.llap.LlapContext@44bfb65b,Map(table -> default.factsales, url -> jdbc:hive2://sparksecure01-195-1-0.field.hortonworks.com:10500))[storekey#66] | +---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+--+ 2 rows selected (1.744 seconds)

- The explain plan should show that the table will be scanned using the LlapRelation class. This confirms that Spark is using LLAP to read from HDFS.

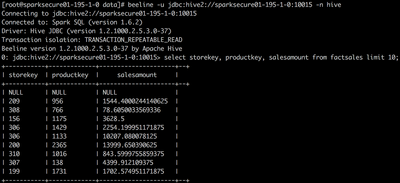

- Verify that hive User is able to see the storekey, unredacted salesamount, and unfiltered productkey columns in the factsales table, as specified by the policy

Hit Ctrl-C to exit beeline

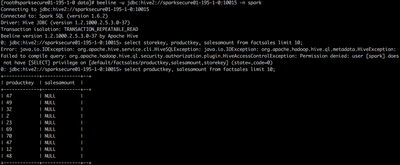

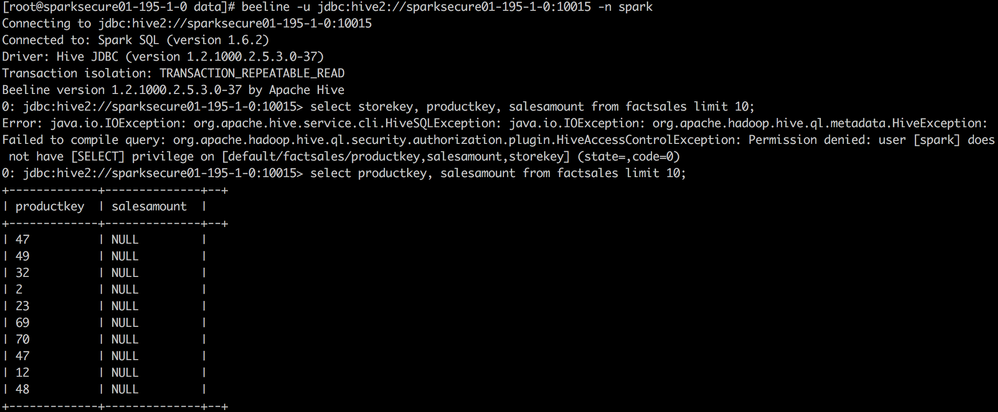

- Connect to Spark-Thrift server using beeline as User spark and run the exact same query as the User hive just ran. An exception will be thrown by the authorization plugin because User spark is not allowed to see results of any query that includes the storekey column.

- Try the same query but omit storekey column from the request. The response will show a filtered productkey column and a redacted salesamount column.

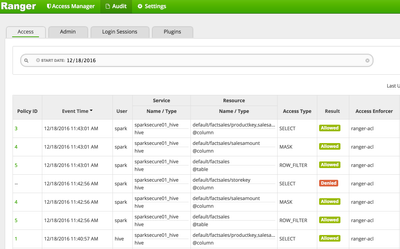

View Audit Trail

- Navigate back to the Ranger Admin UI

- Navigate to Audit (Link at the top of the screen)

Ranger Audit registers both Allowed and Denied access events

Now access to data through Spark Thrift server is secured by the same granular security policies as Hive. Ranger provides the centralized policies, LLAP ensures they are enforced. Now BI tools can be pointed at Spark or Hive interchangeably.

Created on 09-02-2017 03:27 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I just tried and this also works on HDP-2.6.0 and I believe other 2.6.x. Instead of the jar in the article I used the latest version at http://repo.hortonworks.com/content/repositories/releases/com/hortonworks/spark-llap/1.0.0.2.5.5.5-2.... And regarding the copy targets, it's enough to copy the assembly jar only to /usr/hdp/current/spark-client/lib on nodes where this directory already exists. I guess it can be also placed on hdfs, under /hdp but I haven't tried.

Created on 12-21-2017 08:53 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Also I've tried spark-llap on HDP-2.6.2.0 with Spark 1.6.3 and http://repo.hortonworks.com/content/repositories/releases/com/hortonworks/spark-llap/1.0.0.2.5.5.5-2..., but unfortunately, when I tried to execute a simple "select count" query in beeline, got the following error messages:

0: jdbc:hive2://node-05:10015/default> select count(*) from ods_order.cc_customer; Error: org.apache.spark.sql.catalyst.errors.package$TreeNodeException: execute, tree: TungstenAggregate(key=[], functions=[(count(1),mode=Final,isDistinct=false)], output=[_c0#56L]) +- TungstenExchange SinglePartition, None +- TungstenAggregate(key=[], functions=[(count(1),mode=Partial,isDistinct=false)], output=[count#59L]) +- Scan LlapRelation(org.apache.spark.sql.hive.llap.LlapContext@690c5838,Map(table -> ods_order.cc_customer, url -> jdbc:hive2://node-01.hdp.wiseda.com.cn:10500))[] (state=,code=0)

and the log messages in thriftserver as shown in attached "thriftserver-err-msg.txt".