- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-13-2018 07:39 PM - edited 08-17-2019 05:20 AM

This article will demonstrate how to install anaconda on an HDP 3.0.1 instance and the configuration to enable Zeppelin to utilize the anaconda python libraries to use with apache spark.

Pre-requisites:

- bzip2 library needs to be installed prior to installing anaconda

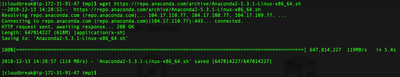

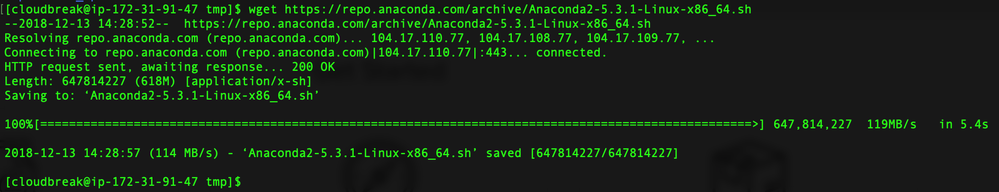

Step 1. Download and Install Anaconda

https://www.anaconda.com/download/#linux

From the following link, download the anaconda installer. It will be a large bash script around 600MB in size. Please land this file in an appropriate directory like /tmp.

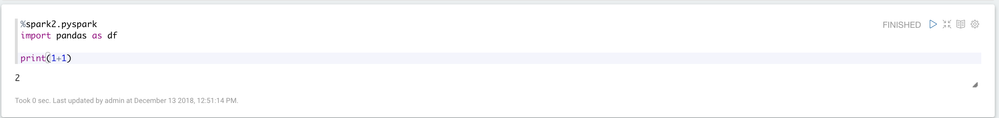

Once downloaded, provide the installer with executable permissions.

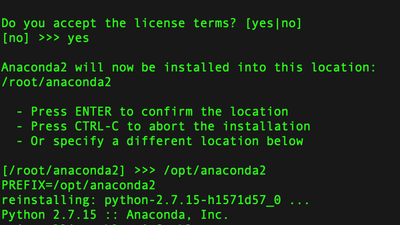

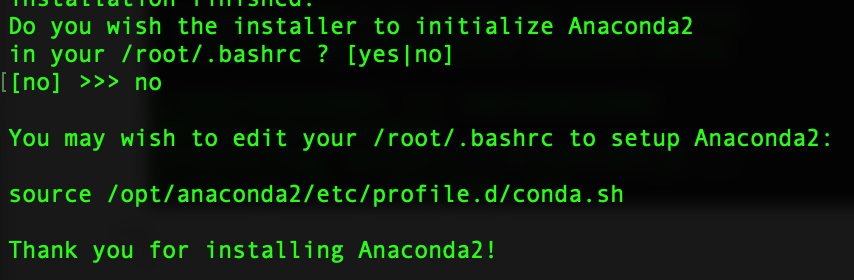

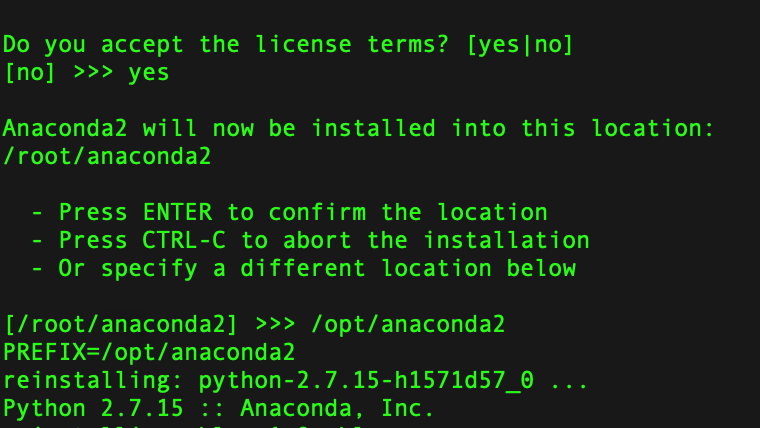

One completed, the installer can be executed. Please execute with a user with sudo privileges. Follow the prompts and accept the license agreements. As a best practice, please install anaconda in a separate directory than the default. /opt is the default for my installations.

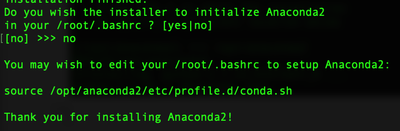

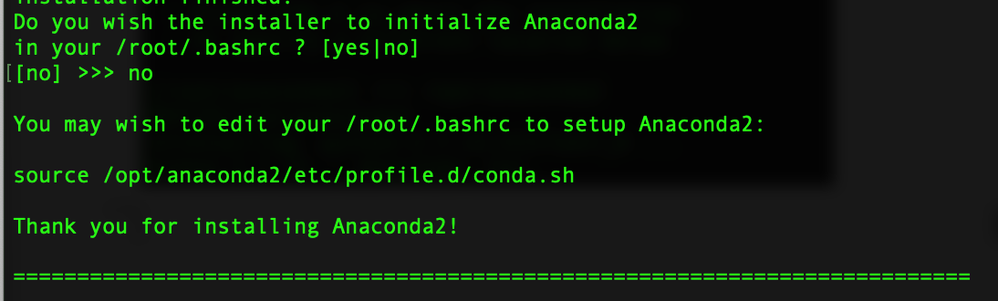

At the very end of the installation it will prompt the user to initialize the install in the installing users basrc file.

If you have a mulit-node environment, please re-run this process

on the remaining nodes.

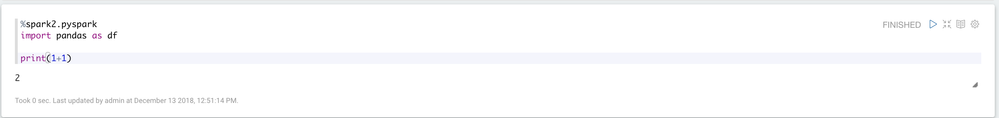

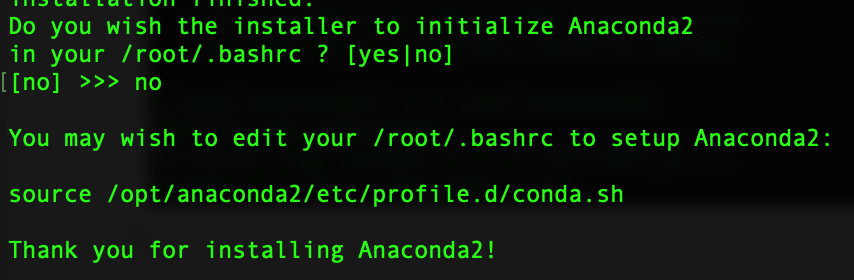

Step 2. Configure Zeppelin

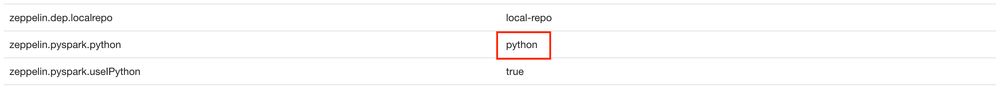

Log into Zeppelin and open the page for interpreters. Scroll down to the spark interpreter and look for the zeppelin.pyspark.python. This parameter points to the python binary that is used when executing python applications. This is defaulted to the python binary bundled with the OS. This will need to be updated to the anaconda python binary.

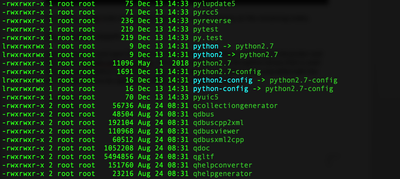

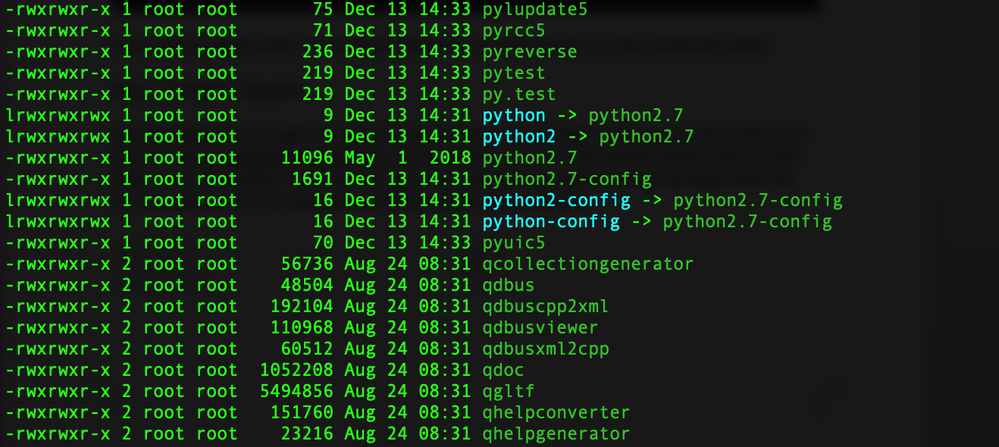

Next, navigate to the location you installed anaconda. In this example the location is /opt/anaconda2/bin.

Add the full path to the python binary in the /opt/anaconda2/bin directory.

Make the changes and save the settings for the interpreter. Finally, restart the interpreter and you should be good to go!

If you run into any issues, make sure that zeppelin has the correct file permissions to run the new python libraries.

Created on 05-08-2019 04:34 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@jtaras, thank you for this wonderful article!

I have a follow-up question (just curious) : do Spark workers use the same custom Python path as Zeppelin driver?

If yes, how do they know it? (the Zeppelin UI setting seems to apply to the Zeppelin driver application only and its SparkContext)

If no, why is there no version conflict between the driver's python and the workers' python? (I tested with the default of 2.X and the custom Anaconda python of 3.7)

NOTE: I had to additionally set the PYSPARK_PYTHON environment variable in Spark > Config to the same path in Ambari, in order to be able to use all the Python libraries in the scripts submitted via "spark-submit", but this does not affect the Zeppelin functionality in any way.