Community Articles

- Cloudera Community

- Support

- Community Articles

- Connecting to Azure Data Lake from a NiFi dataflow

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-15-2016 09:42 PM - edited 08-17-2019 07:21 AM

Hortonworks Data Flow 2.1 was recently released and includes a new feature which can be used to connect to an Azure Data Lake Store. This is a fantastic use case for HDF as the data movement engine supporting a connected data plane architecture spanning on-premise and cloud deployments.

This how-to will assume that you have created an Azure Data Lake Store account and that you have remote access to an HD Insights head node in order to retrieve some dependent JARs.

We will make use of the new Additional Classpath Resources feature for the GetHdfs and PutHdfs processors in NiFi 1.1, included within HDF 2.1. The following additional dependencies are required for ADLS connectivity:

- adls2-oauth2-token-provider-1.0.jar

- azure-data-lake-store-sdk-2.0.4-SNAPSHOT.jar

- hadoop-azure-datalake-2.0.0-SNAPSHOT.jar

- jackson-core-2.2.3.jar

- okhttp-2.4.0.jar

- okio-1.4.0.jar

The first three Azure-specific JARs can be found in /usr/lib/hdinsight-datalake/ on the HDI head node. The Jackson JAR can be found in /usr/hdp/current/hadoop-client/lib, and the last two can be found in /usr/hdp/current/hadoop-hdfs-client/lib .

Once you've gathered these JARs, distribute to all NiFi nodes and place in a created directory /usr/lib/hdinsight-datalake.

In order to authenticate to ADLS, we'll use OAuth2. This requires the TenantID associated with your Azure account. This simplest way to obtain this is via the Azure CLI, using the azure account show command.

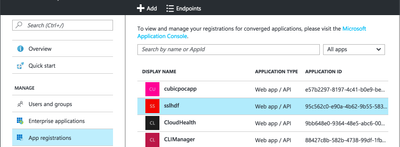

You will also need to create an Azure AD service principal as well as an associated key. Navigate to Azure AD > App Registrations > Add

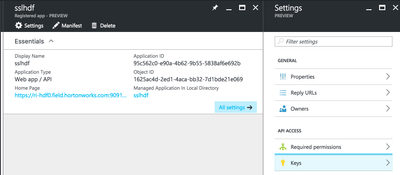

Take note of the Application ID (aka the Client ID) and then generate a key via the Keys blade (please note the Client Secret value will be Hidden after leaving this blade so be sure to copy somewhere safe and store securely).

The service principal associated with this application will need to have service-level authorization to access the Azure Data Lake Store instance that exists by assumption as a pre-requisite. This can be done via the IAM blade for your ADLS instance (please note you will not see the Add button in the top toolbar unless you have administrative access for your Azure subscription).

In addition, the service principal will need to have appropriate directory-level authorizations for the ADLS directories to which it should be authorized to read or write. These can be assigned via Data Explorer > Access within your ADLS instance.

At this point, you should have your TenantID, ClientID, and Client Secret available and we will now to be able to configure core-site.xml in order to access Azure Data Lake via the PutHdfs processor.

The important core-site values are as follows (note the variables identified with the '$' sigil below, including part of the refresh URL path).

<property>

<name>dfs.adls.oauth2.access.token.provider.type</name>

<value>ClientCredential</value>

</property>

<property>

<name>dfs.adls.oauth2.refresh.url</name>

<value>https://login.microsoftonline.com/$YOUR_TENANT_ID/oauth2/token</value>

</property>

<property>

<name>dfs.adls.oauth2.client.id</name>

<value>$YOUR_CLIENT_ID</value>

</property>

<property>

<name>dfs.adls.oauth2.credential</name>

<value>$YOUR_CLIENT_SECRET</value>

</property>

<property>

<name>fs.AbstractFileSystem.adl.impl</name>

<value>org.apache.hadoop.fs.adl.Adl</value>

</property>

<property>

<name>fs.adl.impl</name>

<value>org.apache.hadoop.fs.adl.AdlFileSystem</value>

</property>

We're now ready to configure the PutHdfs processor in NiFi.

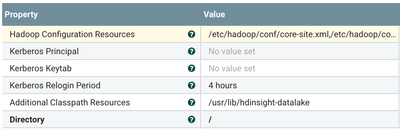

For Hadoop configuration resources, point to your modified core-site.xml including the properties above and an hdfs-site.xml (no ADLS-specific changes are required).

Additional Classpath Resources should point to the /usr/lib/hdinsight-datalake to which we copied the dependencies on all NiFi nodes.

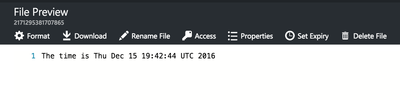

The input to this PutHdfs processor can be any FlowFile, it may be simplest to use the GenerateFlowFile processor to create the input with some Custom Text such as

The time is ${now()}

When you run the data flow, you should see the FlowFiles appear in the ADLS directory specified in the processor, which you can verify using the Data Explorer in the Azure Portal, or via some other means.

Created on 05-15-2017 12:28 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Thanks for publishing the article. I'm very new to NiFi but I managed to get PutHDFS writing to ADL using HDF 2.1 but was wondering if there is a similar way to get CreateHDFSFolder to work in the same manner? From the docs I can see the Additional Classpath Resources property is not available for CreateHDFSFolder. Any recommendations?

Kind Regards,

Geouffrey

Created on 10-12-2017 11:48 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi slachterman thanks for the post.

I get below error while running PutHDFS processor. I didn't find the SNAPSHOT jars in HDInsight, May I know the HDI version you used? Can you help me fixing this error?

Caused by: java.lang.NoSuchMethodError: org.apache.hadoop.security.ProviderUtils.excludeIncompatibleCredentialProviders(Lorg/apache/hadoop/conf/Configuration;Ljava/lang/Class;)Lorg/apache/hadoop/conf/Configuration;

Thanks

Vinay

Created on 10-12-2017 06:27 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This approach is supported by HDI 3.5. It appears HDI 3.6 relies on Hadoop 2.8 code. I can look into an approach for HDI 3.6, but NiFi bundles Hadoop 2.7.3.

Created on 11-10-2017 09:46 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

I've used this article to get NiFi 1.3.0 working with Azure Data Lake, but no luck so far. I just get the "Wrong FS expecting file:///" error message.

Has anyone tested this on plain NiFi? Does it only work on HDF?

Thank you very much

Juan

Created on 11-10-2017 06:29 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This should work in NiFi 1.3 as well, there is nothing specific to HDF. That error sounds like you haven't provided core-site.xml as one of the Hadoop Configuration Resources as discussed in the article.

Created on 11-14-2017 04:03 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi slachterman,

Can you please confirm if this scenario works when Nifi is local to HDI Cluster which is configured to Datalake? More details on the architecture. I was trying with below steps and didn't work.

1) We have HDP & NiFi Installed on Azure VM.

2) using above NiFi trying to connect to azure HDInsight (another VM) which can talk to datalake.

3) All VM's are on same Azure VNET and subscription.

4) when I followed the instructions and tested files are writing to the HDFS of HDP cluster but not to Datalake of HDI cluster.

Can you please let me know exactly how to write files to Azure Datalake?

Thanks in advance.

Regards

Mahesh.

Created on 11-17-2017 03:35 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @slachterman

To let you know, with current HDP setup I have, i'm able to lift/connect to Azure Datalake using hdfs command. But using NiFi it is not working as you mentioned i'm using PutHdfs and GenerateFlowFile processors. I'm getting below error. From the error we can make out permission issue, But I have provided full rights/permission to whole world on the ADLS i'm using. Can you please suggest and help me resolve this issue.

Failed to write to HDFS due to org.apache.hadoop.security.AccessControlException Permission denied: user=root, access=WRITE, Inode="/hdp-lake":hdfs:hdfs:drwx-r-xr-x at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

Thanks in advance for your help!.

Created on 01-10-2018 01:04 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I found this to be a great way to get NiFi to talk to Azure Data Lake Store.

Created on 02-22-2018 02:51 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi All,

I try to ingest data from a cluster Nifi 1.5 (hosted in 3 Vms -not on Hadoop) to Azure Data Lake Store.

I followed this wonderful tutorial but i still have problem.

I get these jar files (not exactly the same versions than in this tutorial):

- adls2-oauth2-token-provider-1.0.jar

- azure-data-lake-store-sdk-2.1.4.jar

- hadoop-azure-datalake-2.7.3.2.6.2.25-1.jar

- jackson-core-2.2.3.jar okhttp-2.4.0.jar

- okio-1.4.0.jar

I do not have hdfs-site.xml (because Nifi is not part of the Hadoop) ; so I referenced my Azure Datalake Store in core-site.xml like this:

<property> <name>fs.defaultFS</name> <value>adl://xxxx.azuredatalakestore.net</value> </property>

I'm right ?

When I start the PutHDFS, I have this error in log file : java.lang.NoClassDefFoundError: Could not initialize class org.apache.hadoop.fs.adl.AdlFileSystem (with jar tf, I saw that this method is referenced in hadoop-azure-datalake-2.7.3.2.6.2.25-1.jar). In the property tab of the PutHDFS, the "Additional Classpath Resources" is filled with /usr/lib/hdinsight-datalake (where I copy the jar files).

What is wrong with this configuration ?

Thanks,

Olivier.

Created on 03-29-2018 06:40 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

A there ways to solve this problem. Is it possible to compile the PutHDFS code with a new version of Hadoop code ?