Community Articles

- Cloudera Community

- Support

- Community Articles

- Event driven pipelines in Azure with CDE(Cloudera ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-05-2022 03:49 PM - edited 07-06-2022 07:23 AM

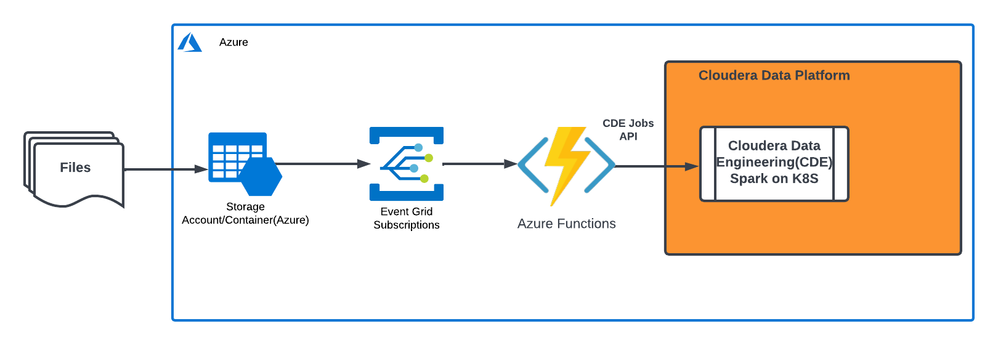

There are a numerous ways of doing event driven architectures in Cloud with Cloudera Data Platform(CDP) but, lately I was working on a use case which required Spark inferences and transformations on streaming log data in Azure cloud environment on a near realtime basis. The use case is to kickoff Spark transformations as soon as the files get landed in the Azure storage container.

The preferred way of doing Spark transformations for Data Engineering use cases in CDP is by leveraging Cloudera Data Engineering(CDE) which runs Spark on Kubernetes. CDE provides much better isolation, job orchestration with help of Airflow, Spark version independence, scales faster, efficiently utilizes the cloud native resources and the best part is that it does dependency management like a rockstar. Just makes your life easy and simple.

CDE exposes Jobs API for integration with your CI/CD platforms. There are multiple patterns to do streaming architectures with CDE such as leveraging:

- Cloudera Data Flow(Powered by Apache NiFi) to invoke CDE Spark/Airflow jobs using the Jobs API

- Leverage Azure Cloud native frameworks such as Azure Event Grid to trigger CDE jobs using Azure Functions.

In this article we will discuss the later option on how we can leverage Azure Event Grid and Azure Functions to trigger CDE Jobs. There are many other patterns that Azure Event Grid integrates with natively and everything is possible with this pattern, but for the sake of this article, we will explore how Even grid integrates with an Azure Storage containers to monitor for incoming files and invokes an Azure python functions(serverless) which invokes a Cloudera Data Engineering(CDE) Airflow/PySpark job using CDE Jobs API.

Prerequisites:

- Azure subscription

- Some knowledge about Azure Event Grid such as configuring Azure storage account with Event Grid subscriptions & Azure Functions

- Some knowledge about Azure functions(preferably configure Azure functions with some Azure development environment such as Visual Studio Code)

- Azure Storage account with a container to monitor for incoming files(preferably managed by CDP if you wish to read the files in the Spark job)

- Cloudera Data Platform(CDP) environment running in Azure

- Cloudera Data Engineering(CDE) Service enabled and a Running CDE virtual cluster

- Some knowledge on creating resources and jobs in CDE

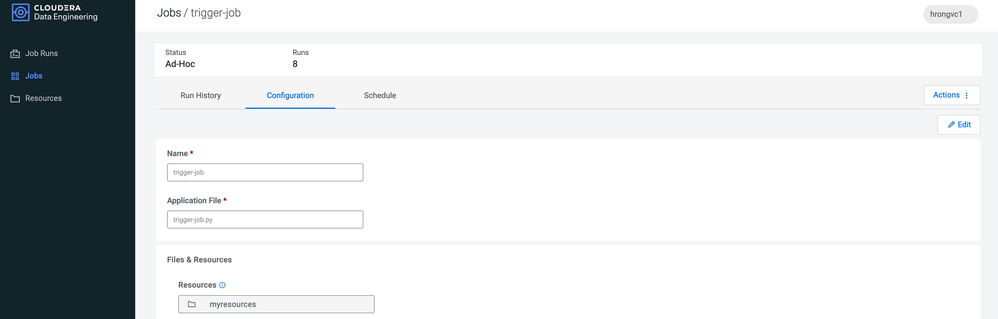

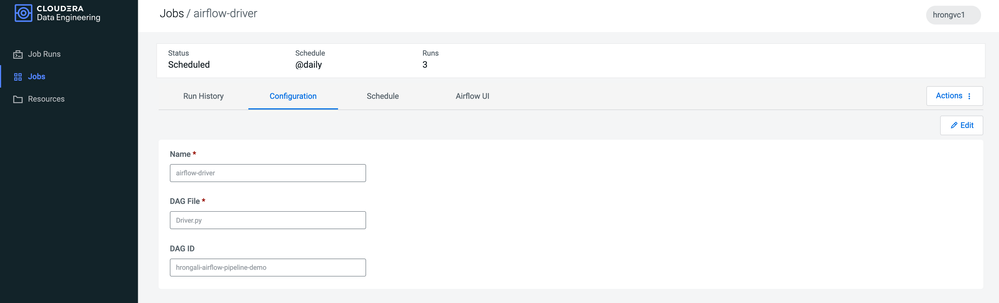

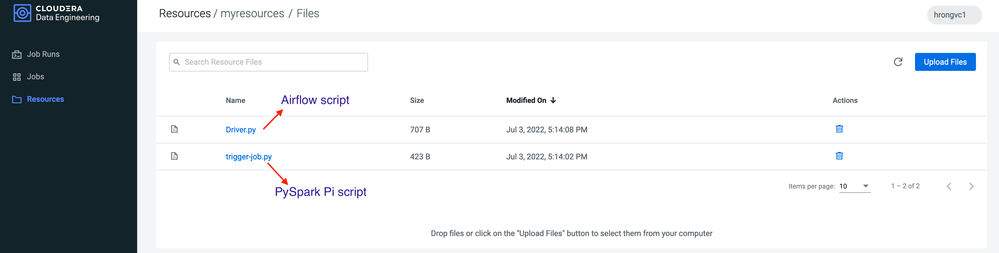

- Airflow and PySpark code uploaded to CDE resources and jobs(Airflow and Spark jobs) pre-created in CDE

- Azure key vault(to store CDE userid and passwords as secrets for authentication) created, granted necessary permissions to be able to work with Azure functions

Project Artifacts:

- Azure function code:

- Other project artifacts including dependencies(requirements.txt) to that are needed by the Azure function:

- Airflow and Spark scripts uploaded into CDE and Jobs pre-created.(I have chosen to run a simple Airflow job that triggers a simple Pi job as a PySpark job.

Processing steps:

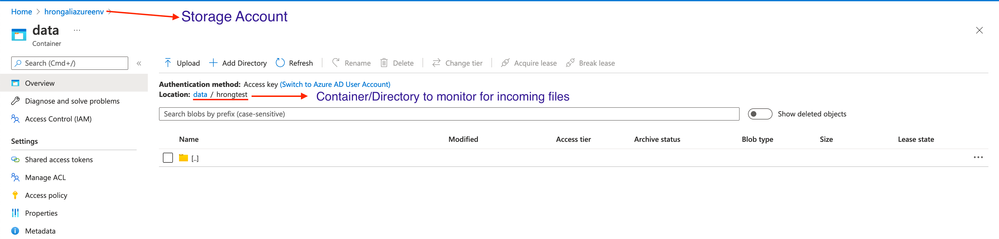

- Create an Azure storage container/directory

- Create CDE components - Resources, Jobs

- Collect CDE URLs required to trigger Spark/Airflow jobs

- Create Azure functions app and Azure python function

- Create Azure key vault and provide access to Azure python function

- Create Event Grid subscription on storage account and configure filter to the directory to be monitored

- Start running the event driven pipeline with Event Grid/Azure functions and CDE

Create a Azure storage container/directory

- Create an Azure Storage account and create a container/directory that we want to monitor for incoming files

Create CDE components - Resources, Jobs

Create Airflow and/or Spark jobs in CDE following these instructions

Collect CDE URLs required to trigger Spark/Airflow jobs

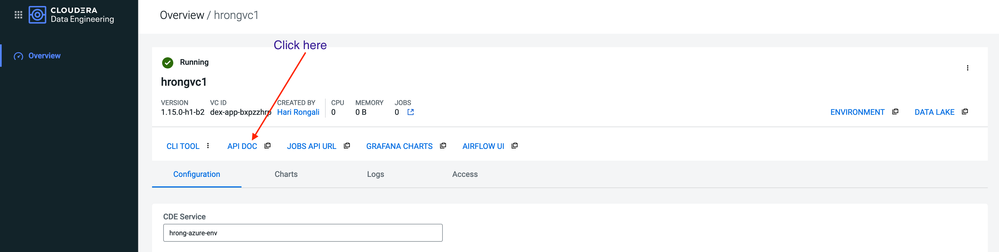

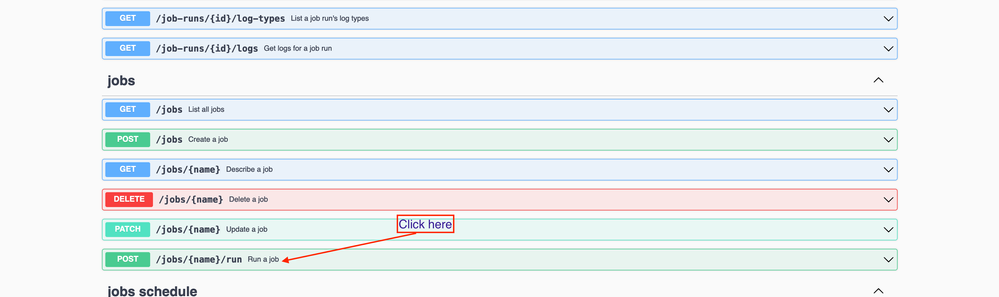

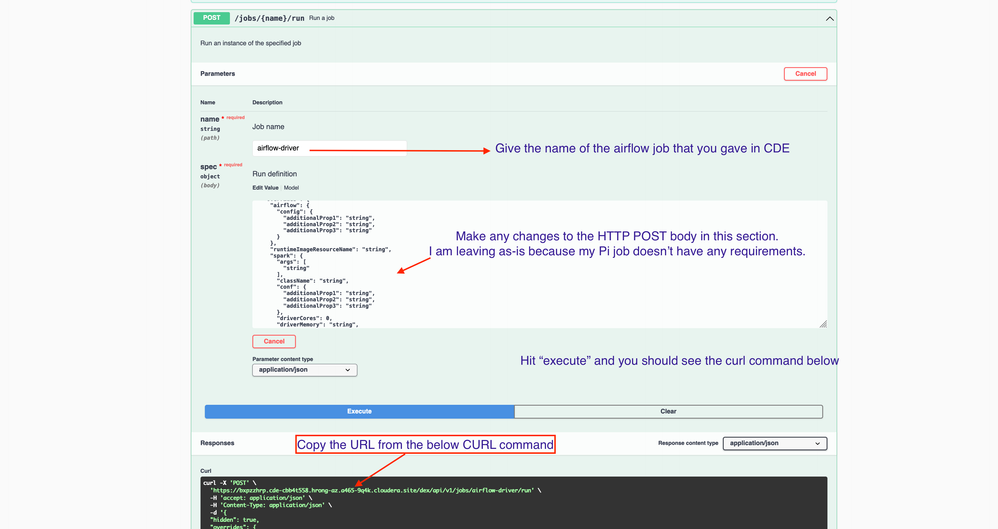

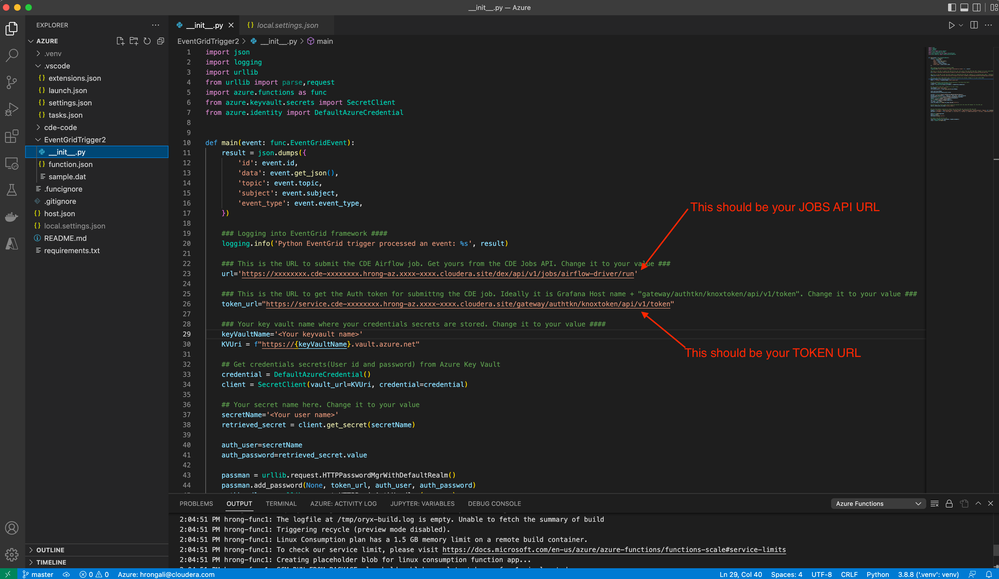

Gather the JOBS API URL for the airflow/spark job and token_url for retrieving the AUTH token

- JOBS API URL:

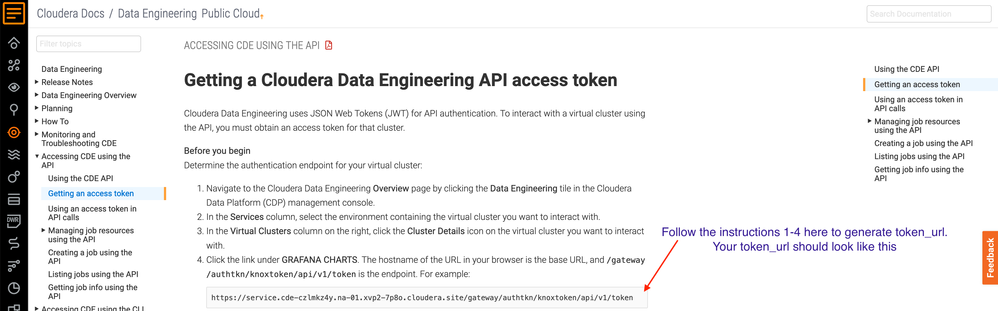

Get the TOKEN URL for extracting the AUTH token for authentication purposes

Create Azure functions App and Azure python function

- Create Azure function App with python flavor and create Azure python function:

- Follow the instructions here to create a Azure function App with Python/Linux flavor

- Configure a local Azure functions development environment with something such as Visual Studio code with Azure plugins installed. Choose "Azure Event Grid Trigger" template in Step#4 of the document instructions.

- Example Azure Event Grid function can be found here. Make changes to the function according to your environment.

- You might need python dependencies as well to run your Python azure function and you might have installed your dependencies in your local development environment in your virtual environment. You should be able to do a

pip freeze > requirements.txt

from your local root project folder structure to collect all your python dependencies into requirements.txt file.

2. Test the function locally(optional). Deploy the function to Azure functions App that was created in step 1-i above. You should be able to do that from Visual studio code and you might have to configure your Azure environment to be able to work with Visual Studio code.

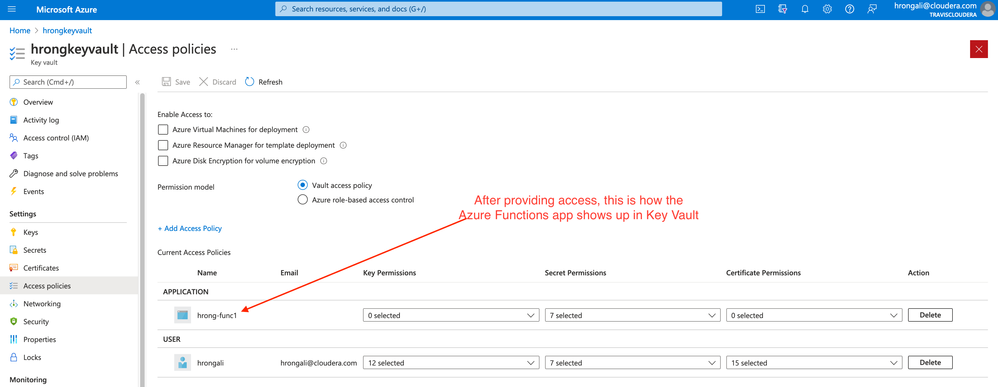

Create Azure key vault and provide access to Azure python function

- Cloudera Data Engineering uses JSON Web Tokens (JWT) for API authentication and you may need user id and password to get the token. Obtaining the token programmatically is covered in the Azure function code itself, but you might have to store the user id and password in a secure location within Azure such as Azure Key Vault by creating a secret.

- Provide access to Azure function to the Azure Key Vault by adding an "Access Policy". I followed this blog to make this integration work.

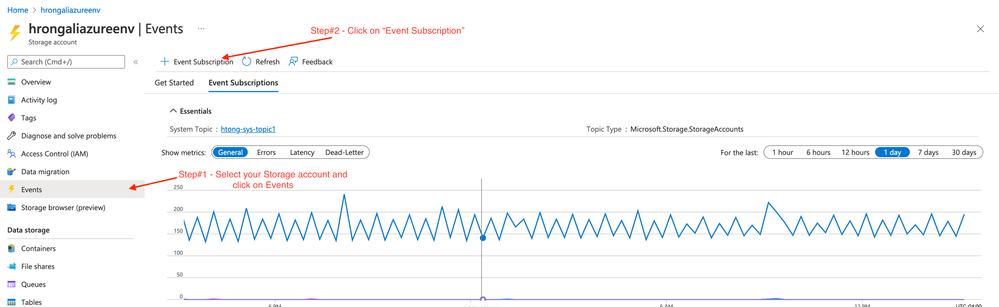

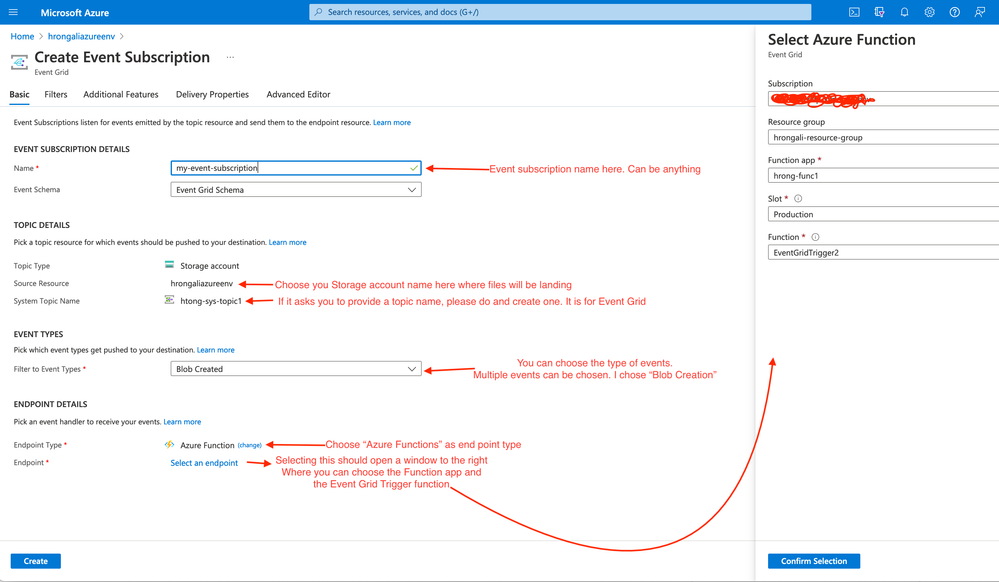

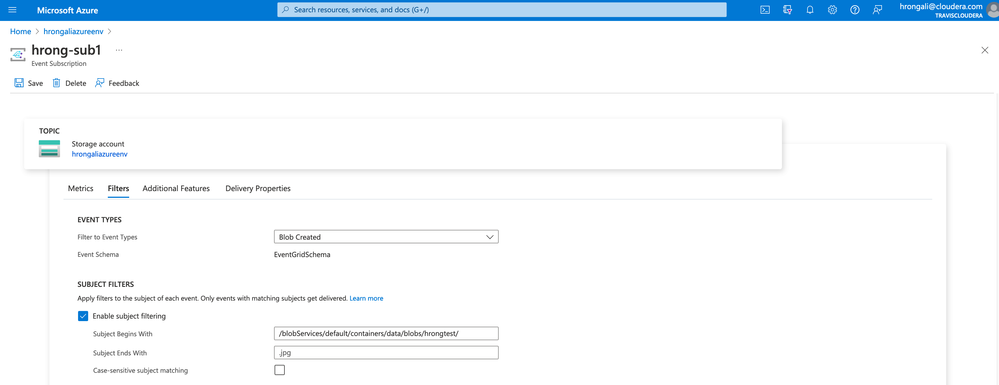

Create Event Grid subscription on storage account and configure filter to the directory to be monitored

- Create Event Grid Subscription on the Storage Account and configure a filter to monitor only the required container/directory -- This is very important, otherwise you will end up with function triggers for every storage container activity(Be careful and test your filter thoroughly)

-

- Choose the correct filter for the container/directory, you want to monitor. On the same page, click on the "Filters" tab and enable "Enable Subject Filtering" checkbox. In my case, I wanted to monitor hrongaliazureenv/data/hrongtest where hrongaliazureenv==My storage account, data==My Container inside my storage account and hrongtest==a directory inside my "data" container. My equivalent filter is:

-

/blobServices/default/containers/data/blobs/hrongtest/

Start running the event driven pipeline with Event Grid/Azure functions and CDE

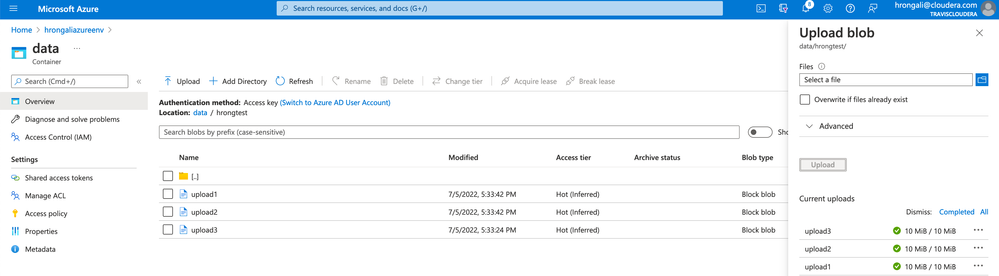

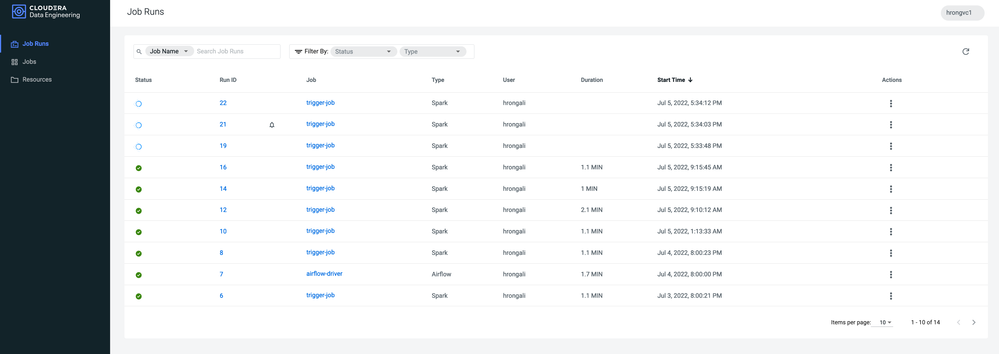

- Start uploading the blobs into the hrongtest directory in your storage account and you should see CDE Spark jobs running in CDE

- In this case, I have uploaded 3 blobs upload1, upload2 and upload3

- You should see logs from the Azure functions monitoring tab for each function invocations:

From Azure functions --> Monitoring

- You should also see the Azure functions triggering CDE Jobs from CDE console

- In this case, I have uploaded 3 blobs upload1, upload2 and upload3

Cloudera Data Engineering(CDE) makes it super simple to run Spark jobs at scale. Azure cloud native integration patterns such as Azure Event Grid makes it much more robust in terms of possibilities. Happy building in Azure with Cloudera Data Engineering(CDE)!!!