- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-15-2022 05:43 AM - edited 01-11-2023 02:19 AM

- Introduction

- Migration Paths

- HDP 2.6 to CDP

- Export

- Import

- HDP 3.X to CDP

- Backup

- Restore

- Useful Links & Scripts

Introduction

Apache Atlas provides open metadata management and governance capabilities for organisations to build a catalog of their data assets, classify and govern these assets and provide collaboration capabilities around these data assets for data scientists, analysts and the data governance team.

This article focuses on backup and restore of Atlas data during HDP to CDP migration.

Migration Paths

HDP 2.6 to CDP

Export

Note: Following commands need to be executed on HDP Cluster

- Download the migration script to download on to Atlas server host.

## Download the script

wget https://archive.cloudera.com/am2cm/hdp2/atlas-migration-exporter-0.8.0.2.6.6.0-332.tar.gz

## Untar

tar zxvf atlas-migration-exporter-0.8.0.2.6.6.0-332.tar.gz

##copy the contents

mkdir /usr/hdp/2.6.5.0-292/atlas/tools/migration-exporter/

cp atlas-migration-exporter-0.8.0.2.6.6.0-332/* /usr/hdp/2.6.5.0-292/atlas/tools/migration-exporter/

chown -R atlas:hadoop /usr/hdp/2.6.5.0-292/atlas/tools/migration-exporter/

- Before taking the backup Stop the atlas

## Stop Atlas via ambari UI

## /root/atals_metadata is by backup directory this is an example

## Run the migration script

[root@ccycloud-1 ~]# python /usr/hdp/2.6.5.0-292/atlas/tools/migration-exporter/atlas_migration_export.py -d /root/atlas_metadata

atlas-migration-export: starting migration export. Log file location /var/log/atlas/atlas-migration-exporter.log

atlas-migration-export: initializing

atlas-migration-export: ctor: parameters: 3

atlas-migration-export: initialized

atlas-migration-export: exporting typesDef to file /root/atlas_metadata/atlas-migration-typesdef.json

atlas-migration-export: exported typesDef to file /root/atlas_metadata/atlas-migration-typesdef.json

atlas-migration-export: exporting data to file /root/atlas_metadata/atlas-migration-data.json

atlas-migration-export: exported data to file /root/atlas_metadata/atlas-migration-data.json

atlas-migration-export: completed migration export!

- Make sure the exported data files in json format found in the location

## make sure the backup is available

[root@ccycloud-1 ~]# ls -ltrh /root/atlas_metadata

total 240K

-rw-r--r-- 1 root root 32K Sep 8 02:57 atlas-migration-typesdef.json

-rw-r--r-- 1 root root 205K Sep 8 02:57 atlas-migration-data.json

Import

Note: Following commands need to be executed on CDP Cluster

Note: For this migration Atlas must be empty before following the next steps.

To restore atlas metadata we need to start Atlas in migration mode this can be done by

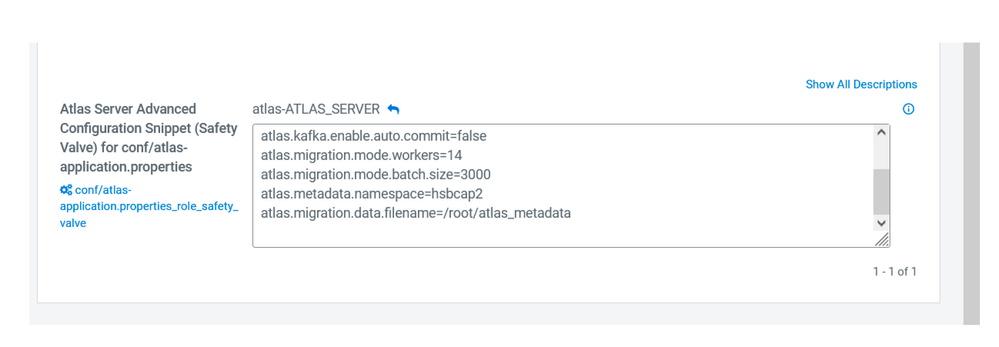

* configure via CM UI >> Atlas >>conf/atlas-application.properties_role_safety_valve

atlas.migration.data.filename=/root/atlas_metadata

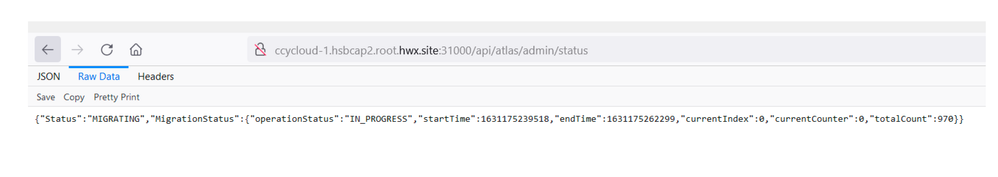

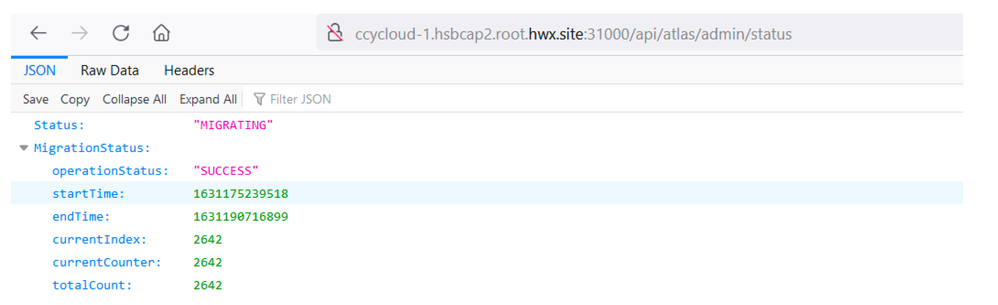

- Start the Atlas and wait until the migration status is completed

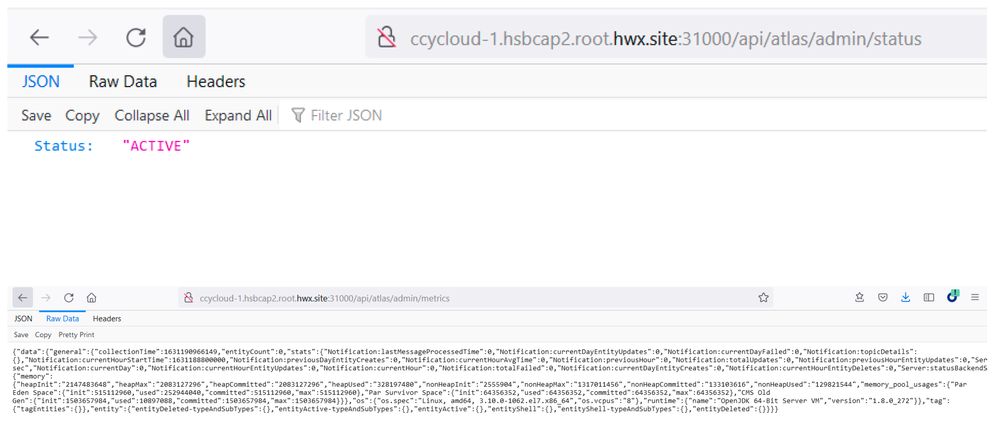

- After the migration is completed as shown above. Please remove the config atlas.migration.data.filename and restart atlas again. Expect to see a status as shown below.

HDP 3.X to CDP

Backup

Note: Following commands need to be executed on HDP Cluster

Atlas metadata is stored at Hbase and Infra-solr collections. Both locations need to be backed up.

- Hbase backup

### script this

hbase shell

disable 'atlas_janus'

snapshot 'atlas_janus', 'atlas_janus-backup-new'

enable 'atlas_janus'

disable 'ATLAS_ENTITY_AUDIT_EVENTS'

snapshot 'ATLAS_ENTITY_AUDIT_EVENTS','ATLAS_ENTITY_AUDIT_EVENTS-backup-new'

enable 'ATLAS_ENTITY_AUDIT_EVENTS'

exit

## Linux cli

hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot 'atlas_janus-backup-new' -copy-to hdfs:///tmp/hbase_new_atlas_backups

hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot 'ATLAS_ENTITY_AUDIT_EVENTS-backup-new' -copy-to hdfs:///tmp/hbase_new_atlas_backups

- Infra-solr collections backup

## Getting Kerberos ticket

klist -kt /etc/security/keytabs/ambari-infra-solr.service.keytab infra-solr/`hostname -f`@REALM

### Taking Dump of atlas collections e.g vertex_index (speed 1min/million records)

/usr/lib/ambari-infra-solr-client/solrCloudCli.sh --zookeeper-connect-string zookeeper_host:2181/infra-solr --jaas-file /etc/ambari-infra-solr/conf/infra_solr_jaas.conf --dump-documents --collection vertex_index --output /home/solr/backup/atlas/vertex_index/data --max-read-block-size 100000 --max-write-block-size 100000

### Replace the collection and back up edge_index,fulltext_index,vertex_index on at a time.

Restore

Note: Following commands need to be executed on CDP Cluster

Use the backup of Hbase and Infra-solr collections restore them in to CDP environment before starting atlas in CDP world.

- Hbase restore tables from snapshot

##copy the backup directories to Target HDFS and run the restore commands

hbase shell

list_snapshots

restore_snapshot 'atlas_janus-backup-new'

restore_snapshot 'ATLAS_ENTITY_AUDIT_EVENTS-backup-new'

- Infra-solr restore

## Getting Kerberos ticket

klist -kt /etc/security/keytabs/ambari-infra-solr.service.keytab infra-solr/`hostname -f`@REALM

### Restoring your collections e.g vertex_index (speed 1min/million records)

/usr/lib/ambari-infra-solr-client/solrCloudCli.sh --zookeeper-connect-string zookeeper_host:2181/solr-infra --jaas-file /etc/ambari-infra-solr/conf/infra_solr_jaas.conf --upload-documents --collection vertex_index --output /home/solr/backup/atlas/vertex_index/data --max-read-block-size 100000 --max-write-block-size 100000

### make sure to restore all 3 collections i.e vertex_index, fulltext_index, edge_index

- After restoring the Hbase tables and Solr collections. Start the Atlas via CM UI and validate the metrics.

Useful Links & Scripts

- Command to download the metrics of atlas data. This metrics can be used for validation before and after migration.

# curl -g -X GET -u admin:admin -H "Content-Type: application/json" -H"Cache-Control: no-cache" "http://ccycloud-1.hsbcap2.root.hwx.site:21000/api/atlas/admin/metrics" >atlas_metrics.json

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 261 0 261 0 0 64 0 --:--:-- 0:00:04 --:--:-- 64

# ls -ltrh atlas_metrics.json

-rw-r--r-- 1 root root 261 Sep 8 05:47 atlas_metrics.json

- Atlas also comes with API to export and import entities sample code can be found here

https://atlas.apache.org/#/ImportExportAPI

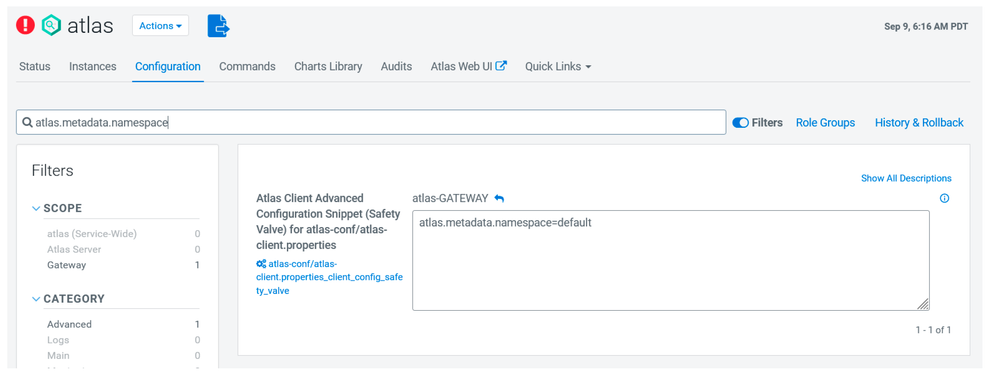

- Known issue during the migration is the namespace of Hbase tables, make sure the tables are restored in default namespace if not configure the right namespace in atlas configs as below

- https://docs.cloudera.com/cdp-private-cloud-upgrade/latest/upgrade-hdp/topics/amb-migrating-atlas-da...

- Hbase backup and restore guide: https://community.cloudera.com/t5/Support-Questions/How-to-take-backup-of-Apache-Atlas-and-restore-i...

- https://blog.cloudera.com/approaches-to-backup-and-disaster-recovery-in-hbase/

Full Disclosure & Disclaimer:

I am an Employee of Cloudera, but this is not part of the formal documentation of the Cloudera Data platform. It is purely based on my own experience of advising people in their choice of tooling and customisations.