- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-15-2022 09:52 AM

This article explains how to configure Spark Connections in Cloudera Machine Learning.

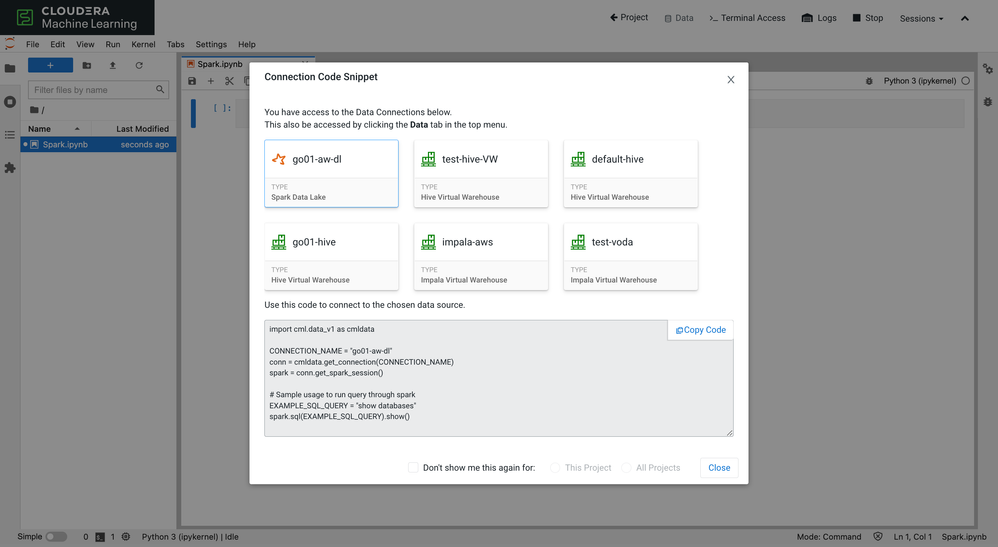

CML enables easy Spark data connections to the data stored in the Data Lake by abstracting the SparkSession connection details. CML users don't need to set complicated endpoint and configuration parameters or load HWC or Iceberg libraries. They can use the cml.data library to get preconfigured connections.

CML Data Connection Snippets

CML Data Connection Snippets

The spark SparkSession object has all the necessary options set to make connections work. Users have two options to set custom or job-specific configurations like executor CPU and memory parameters.

1. Specify the configuration inline

With SparkContext's setSystemProperty method, you can set Spark properties that will be picked up while building the SparkSession object. You can set any Spark property like below before calling cmldata's get_spark_session method.

Python script

import cml.data_v1 as cmldata

from pyspark import SparkContext

SparkContext.setSystemProperty('spark.executor.cores', '4')

SparkContext.setSystemProperty('spark.executor.memory', '8g')

CONNECTION_NAME = "go01-aw-dl"

conn = cmldata.get_connection(CONNECTION_NAME)

spark = conn.get_spark_session()

2. Use spark-defaults.conf

By placing a file called spark-defaults.conf in your project root (/home/cdsw/), you can set Spark properties for your SparkSession. The properties configured in this file will be automatically appended to the global Spark defaults.

spark-defaults.conf

spark.executor.cores=4

spark.executor.memory=8g

Python script

import cml.data_v1 as cmldata

CONNECTION_NAME = "go01-aw-dl"

conn = cmldata.get_connection(CONNECTION_NAME)

spark = conn.get_spark_session()

Conclusion

CML supports flexibility by offering two ways to configure its Spark connection snippets. The inline option helps users who want to fine-tune their Spark applications and want to configure different properties per Spark job. The spark-defaults.conf option helps users who want to set Spark properties that are applied for the whole Project.

To read more about the Data Connections feature, read the following blog post: https://blog.cloudera.com/one-line-away-from-your-data/

Created on 04-18-2024 01:20 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great article!

However there are some complications when attempting this in a Kerberised cluster.

When following the guide to the t' we get an error already in these lines:

SparkContext.setSystemProperty('spark.executor.cores', '4')

SparkContext.setSystemProperty('spark.executor.memory', '8g')Exception in thread "main" java.lang.IllegalArgumentException: Can't get Kerberos realm

at ...

at ...

at ...

Caused by: java.lang.IllegalArgumentException: KrbException: Cannot locate default realm

at ...

at ...

... 12 moreAre there any particular steps regarding this matter?