Community Articles

- Cloudera Community

- Support

- Community Articles

- Semi-structured Dataset Upload-Time Comparison Bet...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-29-2017 08:07 AM - edited 08-17-2019 11:24 AM

Introduction

The

purpose of this article is to compare the upload time between three different

methods for uploading same structured datasets into Hadoop (two methods) and

MariaDB (one method).

Assumptions & Design

a small environment is used to deploy three node Hadoop cluster (one master node, two worker nodes). The exercise will be run from my laptop which has the following specs:

Processor Name: Intel Core i7

Processor Speed: 2.5 GHz

Number of Processors: 1

Total Number of Cores: 4

Memory: 16 GB

The Hadoop cluster will be virtualized on top of my Mac machine by “Oracle VM VirtualBox Manager”. The virtual Hadoop nodes running will have the following specs:

Table 1: Hadoop Cluster –nodes’ specifications

| Specification | Namenode (Master node) | Datanode#1 (Worker node #1) | Datanode #2 (Worker node #2) |

| Hostname | hdpnn.lab1.com | hdpdn1.lab1.com | hdpdn2.lab1.com |

| Memory | 4646 MB | 3072 MB | 3072 MB |

| CPU Number | 3 | 2 | 2 |

| Hard disk size | 20 GB | 20 GB | 20 GB |

| OS | CentOS-7-x86_64-Minimal | CentOS-7-x86_64-Minimal | CentOS-7-x86_64-Minimal |

| IP Address | 192.168.43.15 | 192.168.43.16 | 192.168.43.17 |

The MariaDB standalone virtual machine was used for installing MariaDB database with the following specs:

Hostname: mariadb.lab1.com

IP Address: 192.168.43.55

Memory: 12GB

Disk: 40 GB

O.S: Linux Centos 7

The Semi-structured Datasets were used is the mail archive for Apache Software Foundation (ASF), it was around 200GB of total size. The mail archive contains communications happened regarding more than 80 open-source projects, such as: (such as Hadoop, Hive, Sqoop, Zookeeper, Hbase, Storm, Kafka and much more). The mail archive could be downloaded simply using "wget" command or any other tool from this URL: http://mail-archives.apache.org/mod_mbox/

Results

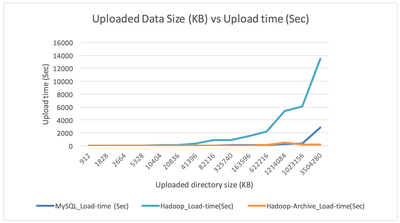

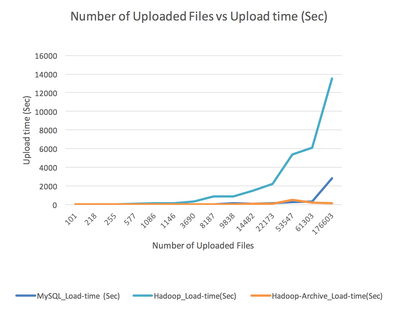

Results collected from uploading mails files to Hadoop cluster

The following results table was collected after distinct 14 uploads for different 13 sub-directories that vary in sizes, number of contained files and sizes of contained files. The last upload was done for testing upload of all previous 13 sub-directories at once. Two upload methods used that are significantly changed in upload time, the first method used is the normal upload for all files directly from local files system of the Hadoop cluster. The second method used is Hadoop Archive (HAR), which is a Hadoop capability used to combine files together in an archiver before writing it back to HDFS.

Table 2: Results collected from uploading mails files to Hadoop cluster

| Loaded directory/directories | Directory/Directories size (KB) | Number of uploaded files | Avg. size of uploaded files (KB) | Load time (1st attempt) | Load time (2nd attempt) | Load using Hadoop Archive (1st attempt) | Load using Hadoop Archive (2nd attempt) |

| lucene-dev | 1214084 | 53547 | 22.67 | 89m16.790s | 70m43.092s | 2m54.563s | 2m28.416s |

| tomcat-users | 1023156 | 61303 | 16.69 | 101m45.870s | 86m48.927s | 3m17.214s | 2m59.006s |

| cxf-commits | 612216 | 22173 | 27.61 | 36m30.333s | 29m37.924s | 1m28.457s | 1m15.189s |

| usergrid-commits | 325740 | 9838 | 33.11 | 14m50.757s | 14m8.545s | 0m54.038s | 0m44.905s |

| accumulo-notifications | 163596 | 14482 | 11.30 | 24m38.159s | 24m49.356s | 1m3.650s | 0m27.550s |

| zookeeper-user | 82116 | 8187 | 10.03 | 14m40.461s | 14m34.136s | 0m47.865s | 0m40.913s |

| synapse-user | 41396 | 3690 | 11.22 | 5m24.744s | 4m47.196s | 0m38.167s | 0m29.043s |

| incubator-ace-commits | 20836 | 1146 | 18.18 | 2m28.330s | 2m4.168s | 0m25.042s | 0m23.401s |

| incubator-batchee-dev | 10404 | 1086 | 9.58 | 2m18.903s | 2m19.044s | 0m27.165s | 0m23.166s |

| incubator-accumulo-user | 5328 | 577 | 9.23 | 1m10.201s | 1m2.328s | 0m26.572s | 0m23.300s |

| subversion-announce | 2664 | 255 | 10.45 | 0m50.596s | 0m32.339s | 0m29.247s | 0m21.578s |

| www-small-events-discuss | 1828 | 218 | 8.39 | 0m45.215s | 0m20.160s | 0m22.847s | 0m21.035s |

| openoffice-general-ja | 912 | 101 | 9.03 | 0m26.837s | 0m7.898s | 0m21.764s | 0m19.905s |

| All previous directories | 3504280 | 176603 | 19.84 | 224m49.673s | Not tested | 8m13.950s | 8m46.144s |

Results collected from uploading mails files to MariaDB

The following results were collected after distinct 14 uploads for different 13 sub-directories that vary in sizes, number of contained files and sizes of contained files. The last upload was done for testing upload of all previous 13 sub-directories at once.

Table 3: Results collected from uploading mails files to MariaDB

| Loaded directory | Total size (KB) | Number of loaded files | Avg. size of loaded files (KB) | Load time (1st attempt) | Load time (2nd attempt) |

| lucene-dev | 1214084 | 53547 | 22.67 | 4m56.730s | 4m57.884s |

| tomcat-users | 1023156 | 61303 | 16.69 | 5m40.320s | 5m38.747s |

| cxf-commits | 612216 | 22173 | 27.61 | 2m4.504s | 2m2.992s |

| usergrid-commits | 325740 | 9838 | 33.11 | 2m30.519s | 0m55.091s |

| accumulo-notifications | 163596 | 14482 | 11.30 | 1m14.929s | 1m16.046s |

| zookeeper-user | 82116 | 8187 | 10.03 | 0m39.250s | 0m40.822s |

| synapse-user | 41396 | 3690 | 11.22 | 0m18.205s | 0m18.580s |

| incubator-ace-commits | 20836 | 1146 | 18.18 | 0m5.794s | 0m5.733s |

| incubator-batchee-dev | 10404 | 1086 | 9.58 | 0m5.310s | 0m5.276s |

| incubator-accumulo-user | 5328 | 577 | 9.23 | 0m2.869s | 0m2.657s |

| subversion-announce | 2664 | 255 | 10.45 | 0m1.219s | 0m1.228s |

| www-small-events-discuss | 1828 | 218 | 8.39 | 0m1.045s | 0m1.027s |

| openoffice-general-ja | 912 | 101 | 9.03 | 0m0.535s | 0m0.496s |

| All previous directories | 3504280 | 176603 | 19.84 | 46m55.311s | 17m31.941s |

Figure 1: Uploaded data size in KB vs upload time in sec.

Figure 2: No of uploaded files vs upload time in sec.

Conclusion

Traditional data warehouses could be tuned to store small-sized semi-structured data. This could be valid and applicable for small-size upload. By increasing number of files, it may not be the best option, especially when uploading massive number of files of file (millions and above).

Uploading small files into Hadoop is a resource consuming process, uploading massive number of small files could affect the performance of the Hadoop cluster dramatically; normal files upload to HDFS is creating a separate Map-Reduce process for every single file.

Using Hadoop Archive (HAR) tool is critical when loading massive number of small files at once. The HAR concept is to append files together by using a special delimiter before being uploaded to HDFS which reduces uploading time significantly. It’s important to note that the query time of a HAR from Hadoop will not be equivalent to Hadoop direct uploading without using HAR; because processing HAR for query requires an additional process for internal de-indexing.

Future Work

I’ll try doing the same exercise using bigger cluster with higher hardware specs to validate the same conclusion.

References: