Community Articles

- Cloudera Community

- Support

- Community Articles

- Social Media Monitoring with NiFi, Hive/Druid Inte...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 05-28-2018 04:21 PM - edited 09-16-2022 01:43 AM

How do you know if your customers (and potential customers) are talking about you on social media?

The key to making the most of social media is listening to what your audience has to say about you, your competitors, and the market in general. Once you have the data you can undertake analysis, and finally, reach social business intelligence; using all these insights to know your customers better and improve your marketing strategy.

This is the third part of the series of articles on how to ingest social media data like streaming using the integration of HDP and HDF tools.

To implement this article, you first need to implement the previous two.

Let’s get started!

In this new article, we will address two main points:

1 - How to collect data from various social networks at the same time

2 - How to integrate storage between HIVE and DRUID making the most of this integration.

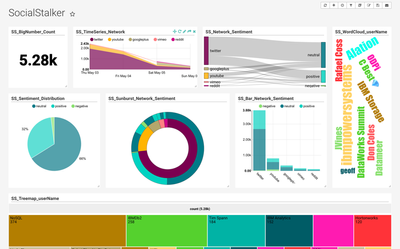

I've called this project "The Social Media Stalker"

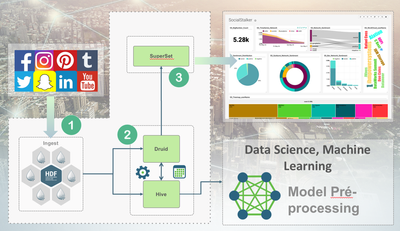

So, our new architecture diagram would look like this:

Ok, it’s time to hands on!

Let's divide this work into 3 parts:

- Create Nifi ingestion for Druid

- Setup Hive-Druid Integration

- Update our SuperSet Dashboard

1. Create Nifi ingestion for Druid

You can access all social media in their own API’s, but it's takes time and patience... Let’s cute the chase going straight to a single point able to collect all social data in a single API.

There are a lot of social media monitoring tools: https://www.brandwatch.com/blog/top-10-free-social-media-monitoring-tools/

We are going to use this one: https://www.social-searcher.com/ (Their main advantage is having sentimental analysis in the API response).

It’s quite simple to get data based on Social Networks you want, just make the request in the API by passing parameters like q = search term and network = desired social network .

Example:

https://api.social-searcher.com/v2/search?q=Hortonworks&network=facebook&limit=20

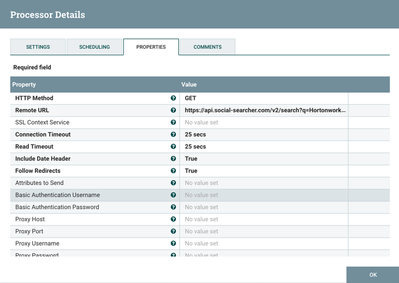

To make this request, let’s pick InvokeHTTP Processor on Nifi

This request will result in a data schema like this:

{ “userId”, “lang”, “location”, “name”, “network”, “posted”, “sentiment”, “text” }I did the same for 7 social networks (Twitter, Facebook, Youtube, Instagram, Reddit, GooglePlus, Vimeo) to have a flow like this:

*To build this Nifi flow, follow the previous article.

I've updated my replace text processor with:

{"userId":"${user.userId}","lang":"${user.lang}","location":"${user.location}","name":"${user.name}","network":"${user.network}","posted":"${user.posted}","sentiment":"${user.sentiment}","text":"${user.text:replaceAll('[$&+,:;=?@#|\'<>.^*()%!-]',''):replace('"',''):replace('\n','')}","timestamp":${now()}}Obviously, you should create your table in the Druid adding the Sentiment and Network fields as described in the previous articles. I called my Druid Table “SocialStalker” - Once you have done it, just push play to see all data at Druid.

2. Setup Hive-Druid Integration

Our main goal is to be able to index data from Hive into Druid, and to be able to query Druid datasources from Hive. Completing this work will bring benefits to the Druid and Hive systems alike:

– Efficient execution of OLAP queries in Hive. Druid is a system specially well tailored towards the execution of OLAP queries on event data. Hive will be able to take advantage of its efficiency for the execution of this type of queries.

– Introducing a SQL interface on top of Druid. Druid queries are expressed in JSON, and Druid is queried through a REST API over HTTP. Once a user has declared a Hive table that is stored in Druid, we will be able to transparently generate Druid JSON queries from the input Hive SQL queries.

– Being able to execute complex operations on Druid data. There are multiple operations that Druid does not support natively yet, e.g. joins. Putting Hive on top of Druid will enable the execution of more complex queries on Druid data sources.

– Indexing complex query results in Druid using Hive. Currently, indexing in Druid is usually done through MapReduce jobs. We will enable Hive to index the results of a given query directly into Druid, e.g., as a new table or a materialized view (HIVE-10459), and start querying and using that dataset immediately.

Integration brings benefits both to Apache Druid and Apache Hive like:

– Indexing complex query results in Druid using Hive

– Introducing a SQL interface on top of Druid

– Being able to execute complex operations on Druid data – Efficient execution of OLAP queries in Hive

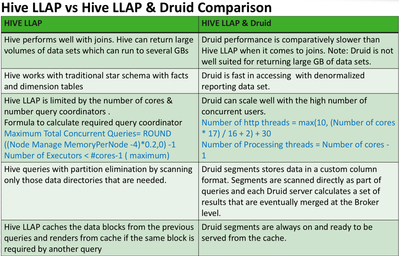

And even there is an overlap between both if you are using Hive LLAP, it's important to see each advantage in separated way:

The power of Druid comes from precise IO optimization, not brute compute force.

End queries are performed to drill down on selected dimensions for a given timestamp predicate for better performance.

Druid queries should use the timestamp predicates; so, druid knows how many segments to scan. This will yield better results.

Any UDFs or SQL functions to be executed on Druid tables will be performed by Hive. Performance of these queries solely depend on Hive. At this point they do not function as Druid queries.

If aggregations over aggregated data are needed, queries will run as Hive LLAP query not as a Druid query.

hands on Hive-Druid!

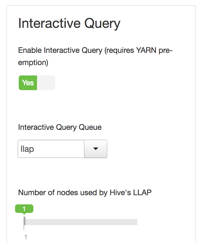

To perform this, first you need be using Hive Interactive (with LLAP) to use the Druid integration.

--> Enable Hive Interactive Query

--> Download hive-druid-handler

If you do not have hive-druid-handler in your HDP version, just download it:

https://javalibs.com/artifact/org.apache.hive/hive-druid-handler

https://github.com/apache/hive/tree/master/druid-handler

…and copy it into hive-server2/lib folder

cp hive-druid-handler-3.0.0.3.0.0.3-2.jar /usr/hdp/current/hive-server2/lib

... restart your Hive.

We need to provide Druid data sources information to Hive:

Let’s register Druid data sources in Hive (CREATE EXTERNAL TABLE) for all Data that already is stored in Druid

// ADD JAR /usr/hdp/current/hive-server2/lib/hive-druid-handler-3.0.0.3.0.0.3-2.jar;

CREATE EXTERNAL TABLE SocialStalkerSTORED BY 'org.apache.hadoop.hive.druid.DruidStorageHandler'

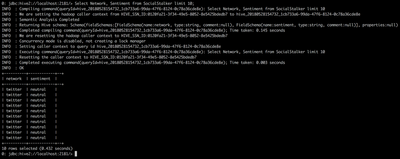

TBLPROPERTIES ("druid.datasource" = "SocialStalker");Now, you should be able to query all Druid Data in Hive, but for that you MUST use Beeline in Interactive mode.

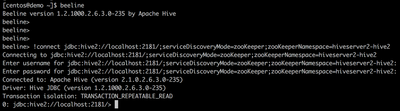

Beeline !connect jdbc:hive2://localhost:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2-hive2

Finally, you can use your beeline terminal, to make any query in your druid table.

You can insert data into your table, make some changes in your SuperSet Slices (as previous articles) to complete step 3 and see your Superset Dashboard like this one:

Conclusion:

You can use HDP and HDF to build an end-to-end platform which allow you achieve the success of your social media marketing campaign as well as the ultimate success of your business. If you don’t pay attention to how your business is doing, you are really only doing half of the job. It is the difference between walking around in the dark and having an illuminated path that allows you to have an understanding and an awareness of how your business is doing and how you can continually make improvements that will bring you more and more exposure and a rock-solid reputation.

Many companies are using social media monitoring to strengthen their businesses. Those business people are savvy enough to realize the importance of social media, how it positively influences their businesses and how critical the monitoring piece of the strategy is to their ultimate success.

References:

https://cwiki.apache.org/confluence/display/Hive/Druid+Integration

https://br.hortonworks.com/blog/sub-second-analytics-hive-druid/