Community Articles

- Cloudera Community

- Support

- Community Articles

- Understanding how NiFi's Content Repository Archiv...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-08-2017 09:55 PM - edited 09-26-2023 11:05 AM

What is Content Repository Archiving?

There are three properties in the nifi.properties file that deal with the archiving on content in the NiFi Content Repository.

The default NiFi values for these are shown below:

nifi.content.repository.archive.max.retention.period=12 hours nifi.content.repository.archive.max.usage.percentage=50% nifi.content.repository.archive.enabled=true

The purpose of content archiving is so that users can view and/ or replay content via the provenance UI that is no longer in their dataflow(s). The configured values do not have any impact on the amount of provenance history that is retained. If content associated to a particular provenance event no longer exists in the content archive, provenance will simply report to the user that the content is not available.

The content archive is kept in within the same directory or directories where you have configured your content repository(s) to exist. When a "content claim" is archived, that claim is moved in to an archive subdirectory within the same disk partition where it originally existed. This keeps archiving from affecting NiFi's content repository performance with unnecessary writes that would be associated with moving archived Files to a new disk/partition for example.

The configured max retention period tells NiFi how long to keep a archived "content claim" before purging it from the content archive directory.

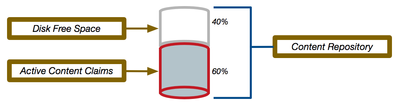

The configured max usage percentage tells NiFi at what point it should start purging archived content claims to keep the overall disk usage at or below the configured percentage. This is a soft limit. Let's say the content repository is at 49% usage. A 4GB content claim then becomes eligible for archiving. Once at time this content claim is archived the usage may exceed the configured 50% threshold. At the next checkpoint, NiFi will remove the oldest archived content claim(s) to bring the overall disk usage back or below 50%. So this value should never be set to 100%.

The above two properties are enforced using an or policy. Whichever max occurs first will trigger the purging of archived content claims.

Let's look at a couple examples:

Example 1:

Here you can see that are Content Repository has 35% of its disk consumed by Content Claims that are tied to FlowFiles still active somewhere in one or more dataflows on the NiFi canvas. This leaves 15% of the disk space to be used for archived content claims.

Example 2:

Here you can see that the amount of Content Claims still active somewhere within your NiFi flow has exceeded 50% disk usage in the content repository. As such you can see there are no archived content claims. The content repository archive setting have no bearing on how much of the content repository disk will be used by active FlowFiles in your dataflow(s). As such, it is possible for your content repository to still fill to 100% disk usage.

*** This is the exact reason why as a best practice you should avoid co-locating your content repository with any of the other Nifi repositories. It should be isolated to a disk(s) that will not affect other applications or the OS should it fill to 100%.

What is a Content Claim?

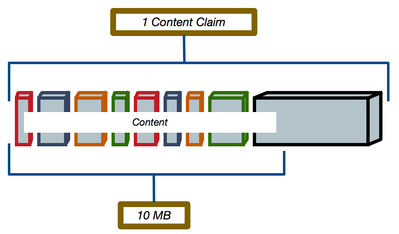

I have mentioned "Content Claim" throughout this article. Understanding what a content claim will help you understand your disk usage. NiFi stores content in the content repository inside claims. A single claim can contain the content from 1 to many FlowFiles. The property that governs how a content claim is built are is found in the nifi.properties file. The default configuration value is shown below:

nifi.content.claim.max.appendable.size=50 KB

The purpose of content claims is to make the most efficient use of disk storage. This is especially true when dealing with many very small files.

The configured max appendable size tells NiFi at what point should NiFi stop appending additional content to an existing content claim before starting a new claim. It does not mean all content ingested by NiFi must be smaller than 50 KB. It also does not mean that every content claim will be at least 50 KB in size.

Example 1:

Here you can see we have a single content claim that contains both large and small pieces of content. The overall size has exceeded the 10 MB max appendable size because at the time NiFi started streaming that final piece of content in to this claim the size was still below 10 MB.

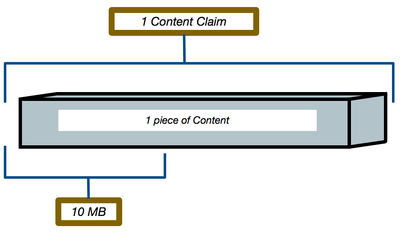

Example 2:

Here we can see we have a content claim that contains only one piece of content. This is because once the content was written to this claim, the claim exceeded the configured max appendable size. If your dataflow(s) deal with nothing but files over 10 MB in size, all your content claims will contain only one piece of content.

So when is a "Content Claim" moved to archive?

A content claim cannot be moved into the content repository archive until none of the pieces of content in that claim are tied to a FlowFile that is active anywhere within any dataflow on the NiFi canvas. What this means is that the reported cumulative size of all the FlowFiles in your dataflows will likely never match the actual disk usage in your content repository.

This cumulative size is not the size of the content claims in which the queued FlowFiles reside, but rather just the reported cumulative size of the individual pieces of content. It is for this reason that it is possible for a NiFi content repository to hit 100% disk usage even if the NiFi UI reports a total cumulative queued data size of less than that.

Take Example 1 from above. Assuming the last piece of content written to that claim was 100 GB in size, all it would take is for one of those very small pieces of content in that same claim to still exist queued in a dataflow to prevent this claim from being archived. As long as a FlowFile still points at a content claim, that entire content claim can not be purged.

When fine tuning your NiFi default configurations, you must always take into consideration your intended data. if you are working with nothing, but very small OR very large data, leave the default values alone. If you are working with data that ranges greatly from very small to very large, you may want to decrease the max appendable size and/or max flow file settings. By doing so you decrease the number of FlowFiles that make it into a single claim. This in turns reduces the likelihood of a single piece of data keeping large amounts of data still active in your content repository.

Created on 06-24-2017 12:29 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@Matt Clarke - Very nicely explained! 🙂

Created on 11-14-2017 12:04 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Matt,

This was indeed a very informative post, i have a question:

If "nifi.content.repository.archive.max.retention.period=12 hours" then is there any need to clean up content repository manually, will this add upt to nifi UI performance in terms of speed in procesing?

Thanks

Created on 10-31-2018 01:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That specific property has to do with how long NIFi will potentially hold on to content claims which have been successfully moved to archive. It works in conjunction with the "nifi.content.repository.archive.max.usage.percentage=50%" property.

-

Neither of these properties will result in the clean-up/removal of any content claims that have not been moved to archive. If the disk usage of the content repository disk has exceeded 50% the "archive" sub-directories in the content repo should all be empty.

-

This does not mean that the active content claims will not continue to increase in number until content repo disk is 10% full.

-

Nothing about how the content repository works will have any impact on NiFi UI performance. A full content repository will howvere impact the overall performance of your NiFi dataflows.

-

Hope this answers your question.

Created on 07-18-2024 10:32 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Resultados de traducción

Traducción

And what is done in this case:

Nothing about how the content repository works will have any impact on NiFi UI performance. A full content repository will howvere impact the overall performance of your NiFi dataflows.

Because when you change nifi.content.repository.archive.enabled to false, in a cluster of 3 nodes, for example, nifi only raises 2 nodes, always the first one that starts and the second, that remains visible in the cluster, but the third , is left running only as a 1-1 clusterer