Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: CDH namenode java heap bigger than it should b...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CDH namenode java heap bigger than it should be

Created on 04-13-2021 08:30 AM - edited 09-16-2022 07:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running CDH 6.3.2.

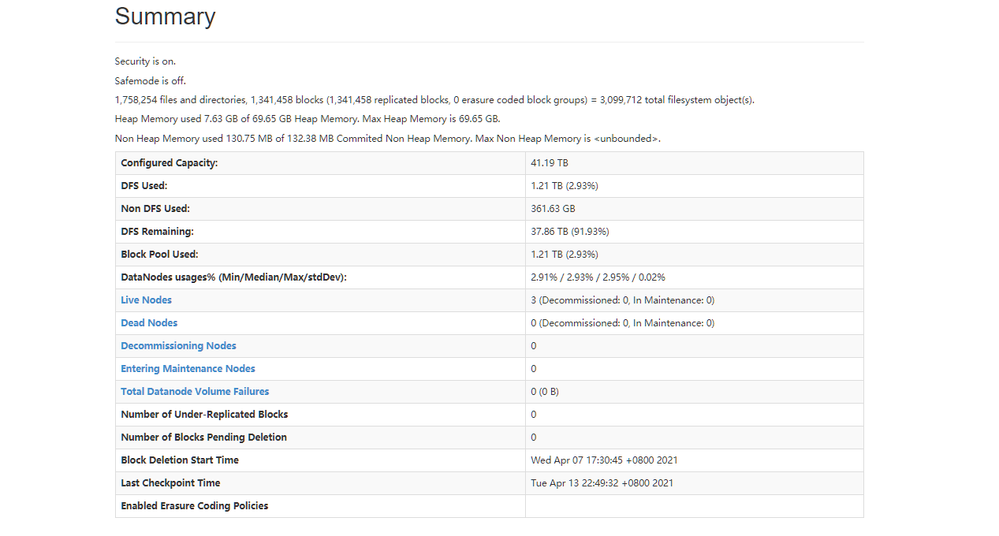

I set the namenode java heap size to 70GB where almost 9GB used, but I got the block infomation as below:

1,757,092 files and directories, 1,340,670 blocks (1,340,670 replicated blocks, 0 erasure coded block groups) = 3,097,762 total filesystem object(s).

Heap Memory used 8.74 GB of 69.65 GB Heap Memory. Max Heap Memory is 69.65 GB.

Non Heap Memory used 130.75 MB of 132.38 MB Commited Non Heap Memory. Max Non Heap Memory is <unbounded>.

at first, the value of heap memory used is changing between 1GB to 2GB, but the value changes between 6GB to 9 GB after a few days.I think it should be 3GB at most.

Can anyone help me to figure it out? Thanks very much.

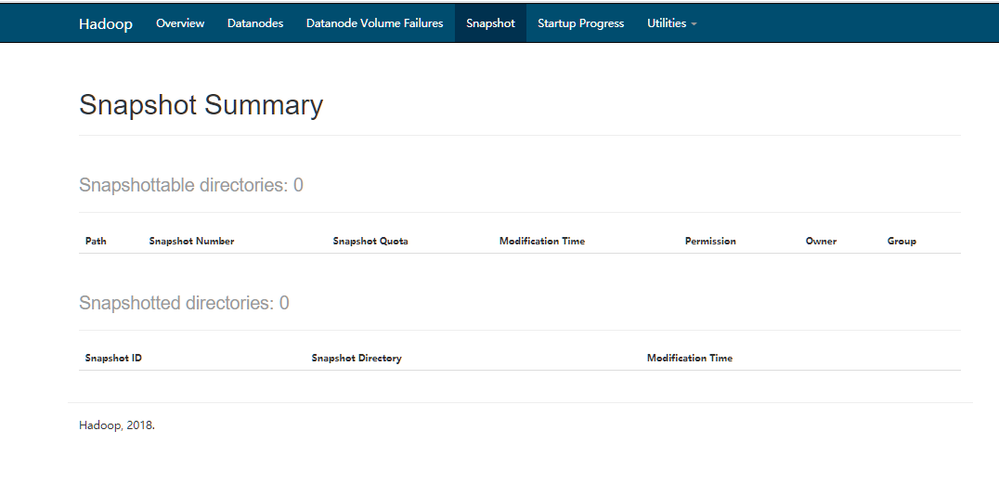

plus: I didnt find any snapshot objects.

Created 04-20-2021 11:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ROACH

Ideally we recommend 1gb heap per 1 million blocks.

Also , How much memory you actually need depends on your workload, especially on the number of files, directories, and blocks generated in each namespace. Type of hardware VM or bare metal etc also taken into account.

https://docs.cloudera.com/documentation/enterprise/6/6.3/topics/admin_nn_memory_config.html

Also have a look at examples of estimating namenode heap memory

If write intensive operations or snapshots operations are being performed on Cluster oftenly then 6-9 gb sounds fine. I would suggest to do grep for GC in Namenode logs and if you see long Pauses says for more than 3-5 seconds then its a good starting point to increase the heap size.

Does that answer your question. do let us know.

regards,

Created 04-22-2021 07:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @kingpin

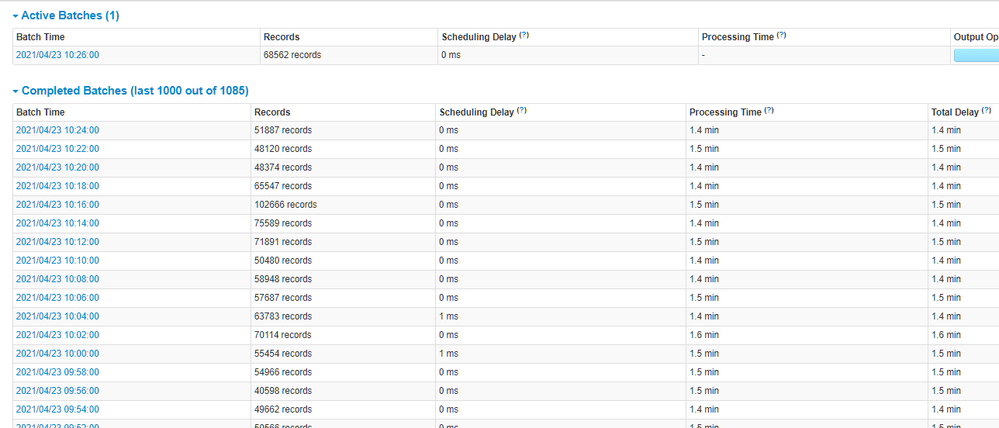

Thanks for your reply, I do have a sparkstreaming proccess for storing files to hdfs from kafka every two minutes. You can refer the screenshot below for the data volume.

The java heap is increasing every day. I think it will be over 70GB after 1 month, but the blocks is still less than 2 million.

Is there anyway to clean cache of javaheap?

The memory of java heap will be normal after rebooting.

sparkstreaming of car @kingpin

Thanks for your reply, I do have a sparkstreaming proccess for storing files to hdfs from kafka every two minutes. You can refer the screenshot below for the data volume.

The java heap is increasing every day. I think it will be over 70GB after 1 month, but the blocks is still less than 2 million.

Is there anyway to clean cache of javaheap?

The memory of java heap will be normal after rebooting.

sparkstreaming of consuming kakfa: