Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Can't use datanodes if data directory is on se...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Can't use datanodes if data directory is on separate disk.

- Labels:

-

Cloudera Manager

-

HDFS

Created 02-15-2017 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've just came accros this problem or maybe even a bug. I have a Cloudera managed HA cluster. If I set the datanodes directory somewhere on the same disk on which the datanode OS is running, then it all works as expected.

However, if I mount my six 2TB disks as /data/{01,02,03,04,05} and specify in Cloudera Manager that the DataNode Data Directory (dfs.data.dir, dfs.datanode.data.dir) to use /data/01/dfs/nn, /data/02/dfs/nn and so on to six, then I get this error when I try to copy a file to HDFS

17/02/15 15:27:11 WARN hdfs.DFSClient: DataStreamer Exception org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /tmp/b._COPYING_ could only be replicated to 0 nodes instead of minReplication (=1). There are 3 datanode(s) running and no node(s) are excluded in this operation. at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1622) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3351) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:683) at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.addBlock(AuthorizationProviderProxyClientProtocol.java:214) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:495) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2216) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2212) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2210) at org.apache.hadoop.ipc.Client.call(Client.java:1472) at org.apache.hadoop.ipc.Client.call(Client.java:1409) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230) at com.sun.proxy.$Proxy16.addBlock(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:413) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:256) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104) at com.sun.proxy.$Proxy17.addBlock(Unknown Source) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1812) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1608) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:772) put: File /tmp/b._COPYING_ could only be replicated to 0 nodes instead of minReplication (=1). There are 3 datanode(s) running and no node(s) are excluded in this operation.I see that there are files and directories created in /data/0x/dfs/dn so the process is able to write inside. Any help? Thanks!

Created 02-21-2017 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @lhebert for taking the time to answer.

I manages to fix it in the following way: during my initial setup I assigned roles to the different nodes, which then led to the error that I mentioned.Then I uninstalled my setup and created a POC cluster with the one click installer in order to see if this will work, which it did. So I uninstalled the POC cluster and setup my initial cluster again, this time I changed assignment of just a few of the roles and left the rest of the services running on the same node (which was the default assignment). Then the cluster was able to load properly after which I manually moved service roles around in the way I wanted them.

So it seems that the custom role assignment I did broke something.

Created on 02-16-2017 10:23 AM - edited 02-16-2017 10:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Based on the error conditions and what you have outlined that you have performed it appears as though you have reconfigured the data directories utilized by the HDFS services.

First and foremost in order for this to work you would need to ensure that the spaces are writeable and accessible by the HDFS DN/NN/JN processes.

In addition to that you generally cannot arbitrarily change the data storage location without taking additional steps. If for example you started services with everything pointed at storage on the primary disk then this is where the formatted Name Node Name and Data Node spaces lives. In order to attempt to do what you are doing you would need carry over the Name Node information and any existing Data Node block data from the previously configured setup. You can alternately refromat HDFS and reinitalize all of the services if there is no data to lose on the cluster.

https://www.cloudera.com/documentation/enterprise/latest/topics/cm_mc_hdfs_metadata_backup.html

https://www.cloudera.com/documentation/enterprise/latest/topics/admin_dn_storage_directories.html

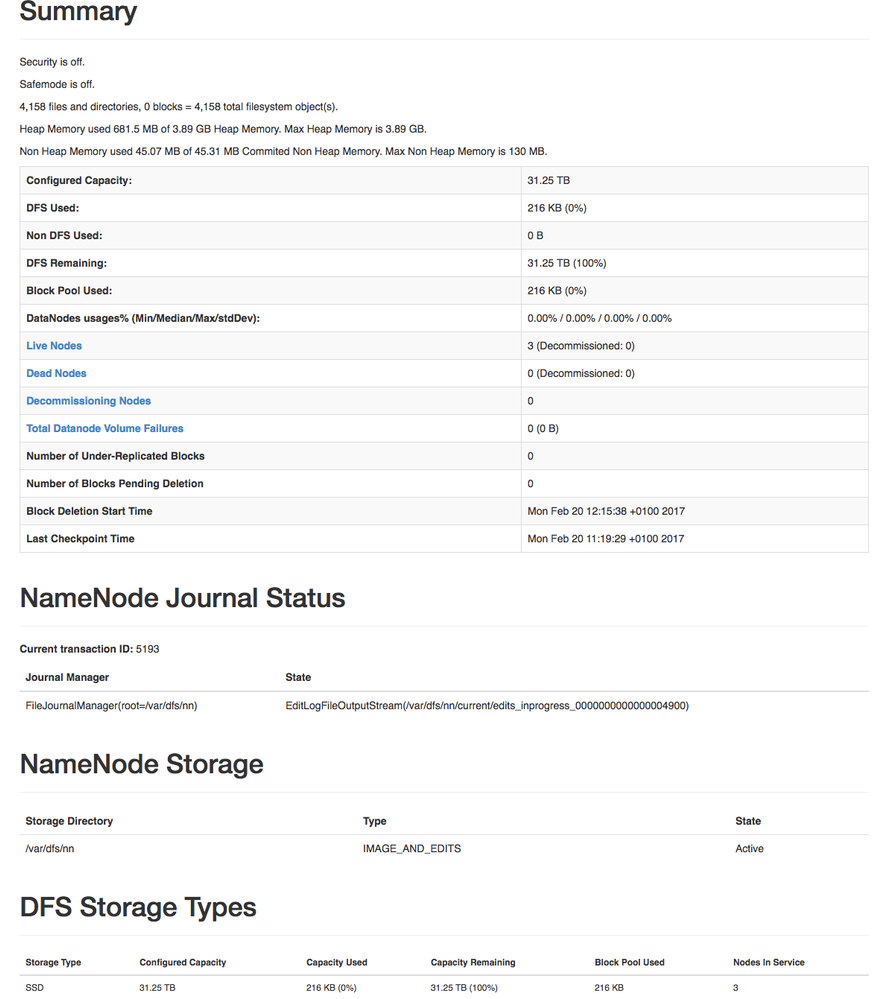

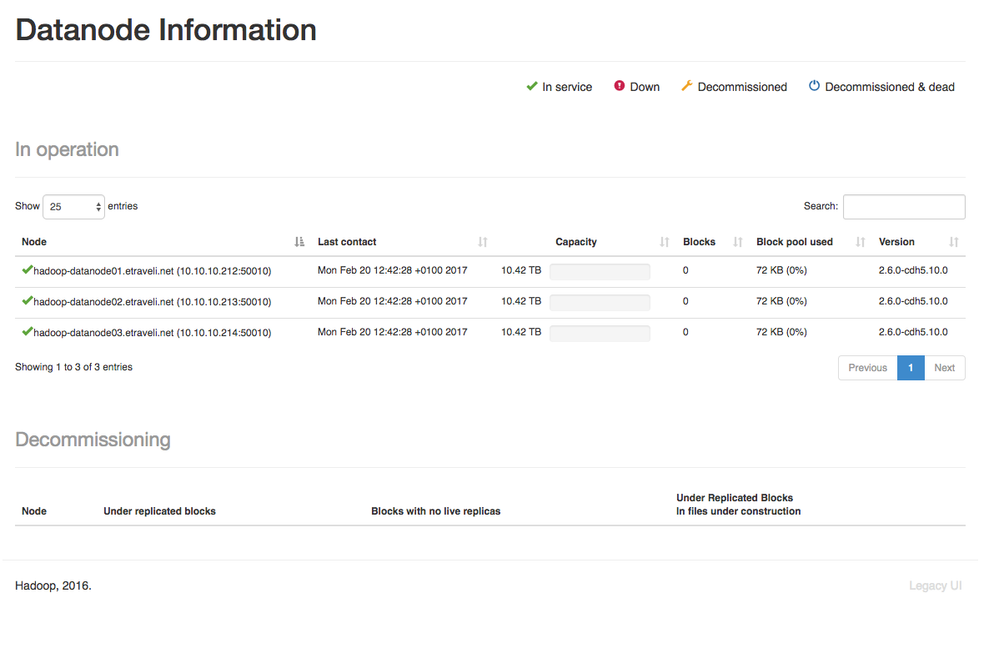

The stack trace appears to indicate that none of the data nodes are available to accept the data or there is a problem on the Name Node. You can review the state of HDFS by going to the Name Node UIs. You can find these easily by logging into cloudera manager, then selecting HDFS, and then using the available top level links or quick links.

Customer Operations Engineer | Security SME | Cloudera, Inc.

Created 02-20-2017 03:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just noticed that I'm using centos 7.3, but only up to centos 7.1 is supported according to the documentation. Could this be the problem?

Created on 02-20-2017 03:32 AM - edited 02-20-2017 07:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've reinstalled the whole cluster. On the first run the step "Installing Oozie ShareLib in HDFS" fails with " could only be replicated to 0 nodes instead of minReplication (=1). There are 3 datanode(s) running and no node(s) are excluded in this operation." If I try to copy any non empty file to HDFS I get the same error.

SElinux and firewalls are disabled. I put IP FQDN mappings in /etc/hosts file on each node. Ipv6 is also disabled. There is also almost 2TB space in each disk.

Each datanode have 6 directly attached disks which are formated with xfs and mounted on /data/01, /data/02 and so on. I also specify the datanode data directory as /data/01/dfs/dn, /data/02/dfs/dn and so on. Hadoop creates files inside those directories, but writing to HDFS fails with the error I specified above.

HDFS canary check also fails with the same error. What can possibly be wrong?

However, datanode ui is empty, don't know if its supposed to show something more:

This is what I see in the logs after enabling debuging (as you can see, no clear reason why hadoop won't use my storage):

2017-02-20 16:24:43,986 INFO org.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor: Rescanning after 30001 milliseconds

2017-02-20 16:24:43,988 INFO org.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor: Scanned 0 directive(s) and 0 block(s) in 3 millisecond(s).

2017-02-20 16:25:02,683 WARN org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Failed to place enough replicas, still in need of 1 to reach 1 (unavailableStorages=[], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}, newBlock=true) For more information, please enable DEBUG log level on org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy and org.apache.hadoop.net.NetworkTopology

2017-02-20 16:25:02,683 WARN org.apache.hadoop.hdfs.protocol.BlockStoragePolicy: Failed to place enough replicas: expected size is 1 but only 0 storage types can be selected (replication=1, selected=[], unavailable=[DISK], removed=[DISK], policy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]})

2017-02-20 16:25:02,683 WARN org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Failed to place enough replicas, still in need of 1 to reach 1 (unavailableStorages=[DISK], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}, newBlock=true) All required storage types are unavailable: unavailableStorages=[DISK], storagePolicy=BlockStoragePolicy{HOT:7, storageTypes=[DISK], creationFallbacks=[], replicationFallbacks=[ARCHIVE]}

2017-02-20 16:25:02,683 WARN org.apache.hadoop.security.UserGroupInformation: PriviledgedActionException as:hdfs (auth:SIMPLE) cause:java.io.IOException: File /tmp/.cloudera_health_monitoring_canary_files/.canary_file_2017_02_20-16_25_02 could only be replicated to 0 nodes instead of minReplication (=1). There are 3 datanode(s) running and no node(s) are excluded in this operation.

2017-02-20 16:25:02,683 INFO org.apache.hadoop.ipc.Server: IPC Server handler 20 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.addBlock from 10.1.40.120:55896 Call#245 Retry#0

java.io.IOException: File /tmp/.cloudera_health_monitoring_canary_files/.canary_file_2017_02_20-16_25_02 could only be replicated to 0 nodes instead of minReplication (=1). There are 3 datanode(s) running and no node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1622)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3351)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:683)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.addBlock(AuthorizationProviderProxyClientProtocol.java:214)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:495)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2216)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2212)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2210)

2017-02-20 16:25:13,986 INFO org.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor: Rescanning after 30000 milliseconds

2017-02-20 16:25:13,987 INFO org.apache.hadoop.hdfs.server.blockmanagement.CacheReplicationMonitor: Scanned 0 directive(s) and 0 block(s) in 1 millisecond(s).

2017-02-20 16:25:15,047 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 57 Total time for transactions(ms): 12 Number of transactions batched in Syncs: 4 Number of syncs: 53 SyncTimes(ms): 8

Created 02-21-2017 08:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

So the Datanode pages are usually pretty blank on information but the NameNode one are not. The screenshot you provided seems to show that the DataNodes are reporting into the NameNode.

There are two things I can think of here right at the moment.

1.) Make sure that there is nothing restricting communication between the nodes in your cluster. When a write occurs the first DataNode opens what is known as a write pipe to the two other nodes it wants to write replicas to. If it cannot open this write pipe replication may fail.

2.) I see errors related to Privleged Action exceptions. These can be related to failed Kerberos authentication or they might als be the result of a denial by sentry though errors related to sentry tend to appear differently in the logging. At this moment the [SIMPLE] mechanism refered to in the error log would seem to indicate and attempt to perform simple authentication in a kerberized environment. This can happen if kerberos authentication fails or if the kerberos mechanism is not selected for the component you are using.

Customer Operations Engineer | Security SME | Cloudera, Inc.

Created 02-21-2017 11:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @lhebert for taking the time to answer.

I manages to fix it in the following way: during my initial setup I assigned roles to the different nodes, which then led to the error that I mentioned.Then I uninstalled my setup and created a POC cluster with the one click installer in order to see if this will work, which it did. So I uninstalled the POC cluster and setup my initial cluster again, this time I changed assignment of just a few of the roles and left the rest of the services running on the same node (which was the default assignment). Then the cluster was able to load properly after which I manually moved service roles around in the way I wanted them.

So it seems that the custom role assignment I did broke something.