Support Questions

- Cloudera Community

- Support

- Support Questions

- Cannot Create Hive Connection Pool.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot Create Hive Connection Pool.

- Labels:

-

Apache Hive

-

Apache NiFi

Created on 11-02-2016 04:40 PM - edited 08-18-2019 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am getting errors while trying to connect to Hive in out Kerberized HADOOP cluster. Here is what i am doing..

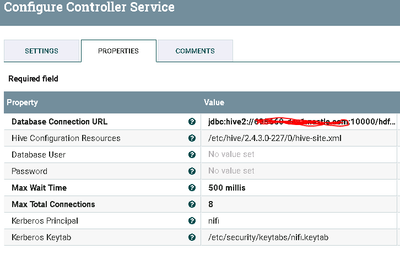

Nifi config properties:

# kerberos #

nifi.kerberos.krb5.file=/etc/krb5.conf

# kerberos service principle #

nifi.kerberos.service.principal=nifi/ourserver@ourdomain nifi.kerberos.service.keytab.location=/etc/security/keytabs/nifi.keytab

Configure Controller Service

as soon as i enable it i see some warnings..

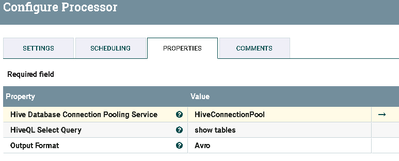

and getting these errors when i tried to use the connection in SelectHiveQL process

HiveConnectionPool[id=7c2b4a17-f772-1ea7-54c9-99cc4d8dea09] Error getting Hive connection

SelectHiveQL[id=d3b62ee6-0157-1000-b66f-364970fcfa98] Unable to execute HiveQL select query show tables due to org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create PoolableConnectionFactory (Could not open client transport with JDBC Uri: jdbc:hive2://myserver:10000/hdf_moat;principal=hive/myserver@mydomain: GSS initiate failed). No FlowFile to route to failure: org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create PoolableConnectionFactory (Could not open client transport with JDBC Uri: jdbc:hive2://myserver:10000/hdf_moat;principal=hive/mydomain: GSS initiate failed)

Regards,

Created 11-02-2016 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Hive processors share some code with the Hadoop processors (in terms of Kerberos, etc.), they expect "hadoop.security.authentication" to be set to "kerberos" in your config file(s) (core-site, hive-site, e.g.)

Created 11-02-2016 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Hive processors share some code with the Hadoop processors (in terms of Kerberos, etc.), they expect "hadoop.security.authentication" to be set to "kerberos" in your config file(s) (core-site, hive-site, e.g.)

Created on 11-02-2016 05:12 PM - edited 08-18-2019 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

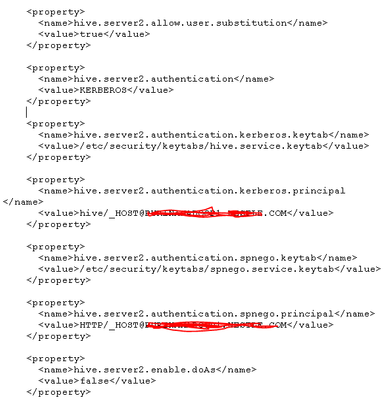

in those both files it's set as Kerberos , here are some properties from hive-site.xml. is there any other files that i need to be checking.

Created 11-02-2016 05:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your snippet above, the property set to "KERBEROS" is "hive.server2.authentication", not "hadoop.security.authentication". If "hadoop.security.authentication" is set to "kerberos" in your core-site.xml, ensure the path to your core-site.xml is in the Hive Configuration Resources property. That property accepts a comma-separated list of files, so you can include your hive-site.xml (as you've done in your above screenshot) as well as the core-site.xml file (which has the aforementioned property set).

Created on 11-02-2016 07:10 PM - edited 08-18-2019 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

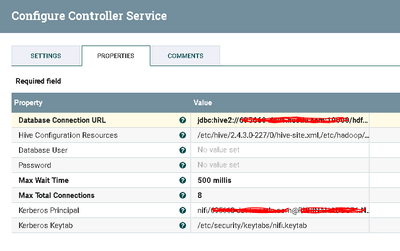

adding core-site.xml eliminated the warning. But i had to change the kerberos principal to fully qualified name , then it started working..thanks for the help..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I am facing issue still after adding below both hive-site.xml and core-site.xml.

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>

I am facing below error

org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL

jdbc:hive2://ux329tas101.ux.hostname.net:10000/default;principal=<principal name>;ssl=true

Could you please help me regarding this.

Regards,

Swadesh Mondal