Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HBase Master lease recovery takes more than 16...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HBase Master lease recovery takes more than 16mins

- Labels:

-

Apache HBase

Created 09-13-2023 09:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello all,

I am facing below issue, Can you pls help how to address -

I have cluster 16 datanodes in cluster divided into 2 zones. Zone1 and zone2.

Cluster: HDP3.1 and HBase 2.0.2

Zone1 has datanode 192.168.226.185 and 192.168.27.91

Zone2 has datanode 192.168.27.95

Hbase master is active is running in zone 1 and Standby in zone2.

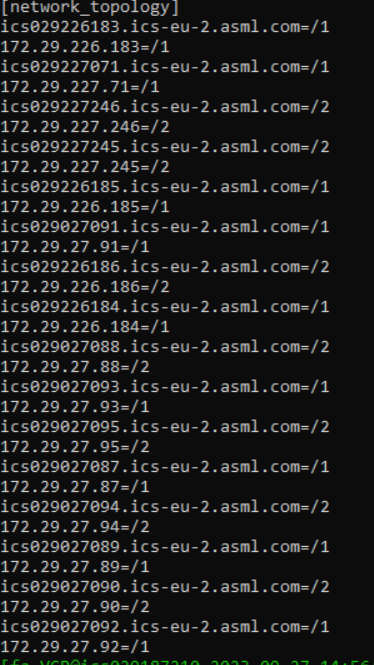

Our rack topology file is as below -

[network_topology]

ics168226185.ics-eu-2.example.com=/1

192.168.226.185=/1

ics168027091.ics-eu-2.example.com=/1

192.168.27.91=/1

ics168027095.ics-eu-2.example.com=/2

192.168.27.95=/2

We perform disaster recovery where we intentionally took network down for zone1. Now since zone1 active Hbase master was down, zone2 hbase master tries to take over. But it takes more than 16mins for hbase to come up. Below was the error seen in hbase master logs -

INFO org.apache.hadoop.hbase.util.FSHDFSUtils: Failed to recover lease, attempt=6 on file=hdfs://example-test-cluster/apps/hbase/data/MasterProcWALs/pv2-00000000000000010950.log after 965307ms

We tried to check why lease is being hold and we see below logs in namenode -

WARN org.apache.hadoop.hdfs.StateChange:DIR* NameSystem.internalReleaseLease:File/apps/hbase/data/MasterProcWALs/pv2-00000000000000010950.log has not been closed.Leaserecoveryisinprogress. RecoveryId=7619028 for block blk_1081354547_7617444

We tried to check datanode logs for the respective block id - blk_1081354547_7617444

WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-192.168.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[192.168.226.185:50010,null,null])

org.apache.hadoop.net.ConnectTimeoutException: Call From ics168027095.ics-eu-2.example.com/192.168.27.95 to ics168226185.ics-eu-2.example.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics168226185.ics-eu-2.example.com/192.168.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

It s seems that blk_1081354547_7617444 has 3 replicas which are stored on 192.168.27.95, 192.168.226.185 and 192.168.27.91

Why datanode from active zone 2 i.e 192.168.27.95 tries to reach Down datanode from zone1 192.168.226.185 to recover block?

Also from datanode logs we see block recovery status gets FINALIZED after 1 hour, they why hbase lease recovery takes 18~20 mins to recover?

I have seen pattern, whenever zone1 goes down and datanodes in zone1 has 2 copies of replicas unavailable then only this issue comes. Hbase lease recovery takes more than 16mins.

Can you guide how to debug/resolve this issue?

Created 09-26-2023 06:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sagarshimpi , I agree with the first part of your analysis that the master was waiting for 16 minutes to recover a pv2 log.

But looking at this error:

org.apache.hadoop.net.ConnectTimeoutException: Call From ics168027095.ics-eu-2.example.com/192.168.27.95 to ics168226185.ics-eu-2.example.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics168226185.ics-eu-2.example.com/192.168.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

It is not the datanode call to another datanode, but it should be the datanode call to the NameNode port, 8010 can be the custom port but you can check the configuration what is this port, I assume it is NameNode port. So I would look at NameNode logs and FailoverController logs to understand if the active NameNode was successfully failover to another NameNode at that time and how long it finished the failover.

Can we know the full topology of the allocation of the roles in your zone1 and zone2, we would like to know how you allocate NN, DN, JN, ZKFC, HMasters, RS, ZK.

Created on 09-26-2023 07:11 AM - edited 09-26-2023 07:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @willx Thank you for reply.

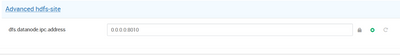

8010 is datanode ipc address in my cluster . Pls check snap below -

But still why its connecting to down datanode (ics168226185.ics-eu-2.example.com) for 16mins !!!

Created 09-26-2023 07:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so it's datanode not namenode, can we get the full error stack of this:

org.apache.hadoop.net.ConnectTimeoutException: Call From ics168027095.ics-eu-2.example.com/192.168.27.95 to ics168226185.ics-eu-2.example.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics168226185.ics-eu-2.example.com/192.168.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

also the full rack topology and the role allocation details.

Created 09-27-2023 05:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @willx

pls find below -

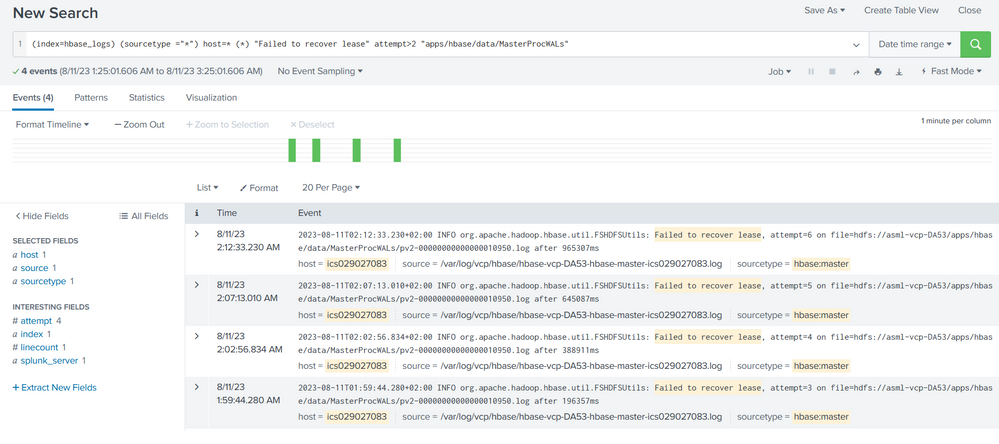

Hbase splunk query for masterprocwal -

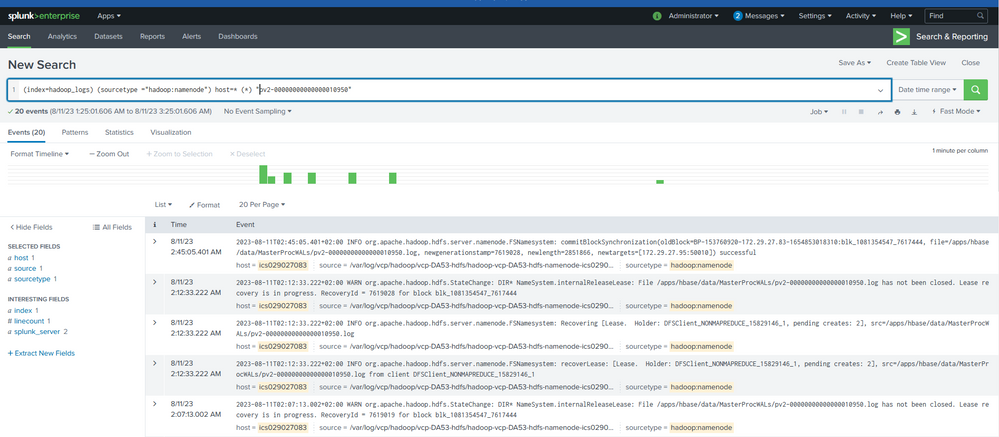

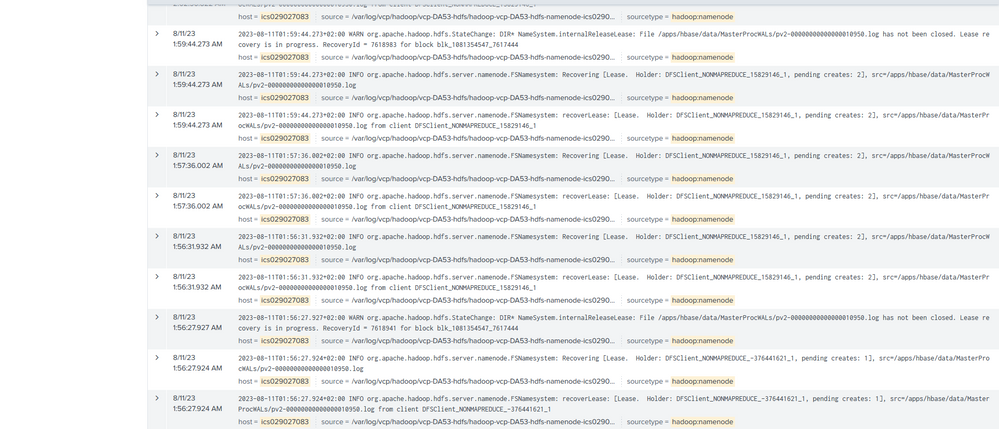

Namenode Logs for respective - pv-****.log

Created 09-27-2023 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Datanode Logs: ics029027095.ics-eu-2.asml.com/172.29.27.95

2023-08-11T02:57:52.973+02:00 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: recoverBlocks FAILED: RecoveringBlock{BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444; getBlockSize()=286; corrupt=false; offset=-1; locs=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]]}

java.io.IOException: Cannot recover BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, the following datanodes failed: [DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.syncBlock(BlockRecoveryWorker.java:314)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:188)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

2023-08-11T02:57:52.973+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: updateReplica: BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW], recoveryId=7619002, length=2851866, replica=FinalizedReplica, blk_1081354547_7619028, FINALIZED

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/finalized/subdir20/subdir9/blk_1081354547

2023-08-11T02:57:52.972+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), bestState=RBW, newBlock=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7619002 (length=2851866), participatingList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:57:52.972+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), isTruncateRecovery=false, syncList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null], block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RWR] node:DatanodeInfoWithStorage[172.29.27.91:50010,null,null]]

2023-08-11T02:57:52.967+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.226.185:50010,null,null])

org.apache.hadoop.net.ConnectTimeoutException: Call From ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029226185.ics-eu-2.asml.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:775)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:534)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:45:05.399+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: updateReplica: BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW], recoveryId=7619028, length=2851866, replica=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619028

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T02:45:05.399+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), bestState=RBW, newBlock=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7619028 (length=2851866), participatingList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:45:05.399+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), isTruncateRecovery=false, syncList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:45:05.398+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.27.91:50010,null,null])

java.net.NoRouteToHostException: No Route to Host from ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029027091.ics-eu-2.asml.com:8010 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:782)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:42:32.088+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.226.185:50010,null,null])

org.apache.hadoop.net.ConnectTimeoutException: Call From ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029226185.ics-eu-2.asml.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:775)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:534)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:29:44.570+02:00 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: recoverBlocks FAILED: RecoveringBlock{BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444; getBlockSize()=286; corrupt=false; offset=-1; locs=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]]}

java.io.IOException: Cannot recover BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, the following datanodes failed: [DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.syncBlock(BlockRecoveryWorker.java:314)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:188)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

2023-08-11T02:29:44.570+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to updateBlock (newblock=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7619019, datanode=DatanodeInfoWithStorage[172.29.27.95:50010,null,null])

java.io.IOException: rur.getRecoveryID() != recoveryId = 7619019, rur=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619028

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.updateReplicaUnderRecovery(FsDatasetImpl.java:2727)

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.updateReplicaUnderRecovery(FsDatasetImpl.java:2691)

at org.apache.hadoop.hdfs.server.datanode.DataNode.updateReplicaUnderRecovery(DataNode.java:2917)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$BlockRecord.updateReplicaUnderRecovery(BlockRecoveryWorker.java:88)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$BlockRecord.access$700(BlockRecoveryWorker.java:71)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.syncBlock(BlockRecoveryWorker.java:302)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:188)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

2023-08-11T02:29:44.570+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: updateReplica: BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW], recoveryId=7619019, length=2851866, replica=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619028

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T02:29:44.570+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), bestState=RBW, newBlock=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7619019 (length=2851866), participatingList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:29:44.570+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), isTruncateRecovery=false, syncList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:29:44.569+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.27.91:50010,null,null])

java.net.NoRouteToHostException: No Route to Host from ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029027091.ics-eu-2.asml.com:8010 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:782)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:27:11.259+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.226.185:50010,null,null])

org.apache.hadoop.net.ConnectTimeoutException: Call From ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029226185.ics-eu-2.asml.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:775)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:534)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:14:23.692+02:00 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: recoverBlocks FAILED: RecoveringBlock{BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444; getBlockSize()=286; corrupt=false; offset=-1; locs=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]]}

java.io.IOException: Cannot recover BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, the following datanodes failed: [DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.syncBlock(BlockRecoveryWorker.java:314)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:188)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

2023-08-11T02:14:23.691+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to updateBlock (newblock=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7618941, datanode=DatanodeInfoWithStorage[172.29.27.95:50010,null,null])

java.io.IOException: rur.getRecoveryID() != recoveryId = 7618941, rur=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619028

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.updateReplicaUnderRecovery(FsDatasetImpl.java:2727)

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.updateReplicaUnderRecovery(FsDatasetImpl.java:2691)

at org.apache.hadoop.hdfs.server.datanode.DataNode.updateReplicaUnderRecovery(DataNode.java:2917)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$BlockRecord.updateReplicaUnderRecovery(BlockRecoveryWorker.java:88)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$BlockRecord.access$700(BlockRecoveryWorker.java:71)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.syncBlock(BlockRecoveryWorker.java:302)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:188)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

2023-08-11T02:14:23.691+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: updateReplica: BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW], recoveryId=7618941, length=2851866, replica=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619028

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T02:14:23.691+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), bestState=RBW, newBlock=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7618941 (length=2851866), participatingList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:14:23.691+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 (length=286), isTruncateRecovery=false, syncList=[block:blk_1081354547_7617444[numBytes=2851866,originalReplicaState=RBW] node:DatanodeInfoWithStorage[172.29.27.95:50010,null,null]]

2023-08-11T02:14:23.691+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.27.91:50010,null,null])

java.net.NoRouteToHostException: No Route to Host from ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029027091.ics-eu-2.asml.com:8010 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:782)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.NoRouteToHostException: No route to host

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:12:35.608+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: update recovery id for blk_1081354547_7617444 from 7619019 to 7619028

2023-08-11T02:12:35.608+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: blk_1081354547_7617444, recoveryId=7619028, replica=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619019

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T02:12:35.608+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: NameNode at ics029027083.ics-eu-2.asml.com/172.29.27.83:8020 calls recoverBlock(BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, targets=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]], newGenerationStamp=7619028, newBlock=null, isStriped=false)

2023-08-11T02:11:50.383+02:00 WARN org.apache.hadoop.hdfs.server.protocol.InterDatanodeProtocol: Failed to recover block (block=BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, datanode=DatanodeInfoWithStorage[172.29.226.185:50010,null,null])

org.apache.hadoop.net.ConnectTimeoutException: Call From ics029027095.ics-eu-2.asml.com/172.29.27.95 to ics029226185.ics-eu-2.asml.com:8010 failed on socket timeout exception: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]; For more details see: http://wiki.apache.org/hadoop/SocketTimeout

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:775)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1502)

at org.apache.hadoop.ipc.Client.call(Client.java:1444)

at org.apache.hadoop.ipc.Client.call(Client.java:1354)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy24.initReplicaRecovery(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.InterDatanodeProtocolTranslatorPB.initReplicaRecovery(InterDatanodeProtocolTranslatorPB.java:83)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.callInitReplicaRecovery(BlockRecoveryWorker.java:565)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker.access$400(BlockRecoveryWorker.java:57)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$RecoveryTaskContiguous.recover(BlockRecoveryWorker.java:134)

at org.apache.hadoop.hdfs.server.datanode.BlockRecoveryWorker$1.run(BlockRecoveryWorker.java:604)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.net.ConnectTimeoutException: 20000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=ics029226185.ics-eu-2.asml.com/172.29.226.185:8010]

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:534)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:688)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:791)

at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:411)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1559)

at org.apache.hadoop.ipc.Client.call(Client.java:1390)

... 10 more

2023-08-11T02:07:14.599+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: update recovery id for blk_1081354547_7617444 from 7619002 to 7619019

2023-08-11T02:07:14.599+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: blk_1081354547_7617444, recoveryId=7619019, replica=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7619002

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T02:07:14.599+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: NameNode at ics029027083.ics-eu-2.asml.com/172.29.27.83:8020 calls recoverBlock(BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, targets=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]], newGenerationStamp=7619019, newBlock=null, isStriped=false)

2023-08-11T02:02:59.595+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: update recovery id for blk_1081354547_7617444 from 7618941 to 7619002

2023-08-11T02:02:59.595+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: blk_1081354547_7617444, recoveryId=7619002, replica=ReplicaUnderRecovery, blk_1081354547_7617444, RUR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

recoveryId=7618941

original=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T02:02:59.594+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: NameNode at ics029027083.ics-eu-2.asml.com/172.29.27.83:8020 calls recoverBlock(BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, targets=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]], newGenerationStamp=7619002, newBlock=null, isStriped=false)

2023-08-11T01:56:29.586+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: changing replica state for blk_1081354547_7617444 from RBW to RUR

2023-08-11T01:56:29.586+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: blk_1081354547_7617444, recoveryId=7618941, replica=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T01:56:29.586+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: blk_1081354547_7617444, recoveryId=7618941, replica=ReplicaBeingWritten, blk_1081354547_7617444, RBW

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= 2851866

getVolume() = /hadoop4/hdfs/data

getBlockURI() = file:/hadoop4/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547

bytesAcked=2851866

bytesOnDisk=2851866

2023-08-11T01:56:29.585+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: BlockRecoveryWorker: NameNode at ics029027083.ics-eu-2.asml.com/172.29.27.83:8020 calls recoverBlock(BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, targets=[DatanodeInfoWithStorage[172.29.27.95:50010,null,null], DatanodeInfoWithStorage[172.29.226.185:50010,null,null], DatanodeInfoWithStorage[172.29.27.91:50010,null,null]], newGenerationStamp=7618941, newBlock=null, isStriped=false)

2023-08-11T01:55:59.759+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: opWriteBlock BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444 received exception java.net.SocketTimeoutException: 60000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/172.29.27.95:50010 remote=/172.29.27.69:33920]

2023-08-11T01:55:59.759+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: PacketResponder: BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, type=HAS_DOWNSTREAM_IN_PIPELINE, downstreams=2:[172.29.226.185:50010, 172.29.27.91:50010] terminating

2023-08-11T01:55:59.759+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: PacketResponder: BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444, type=HAS_DOWNSTREAM_IN_PIPELINE, downstreams=2:[172.29.226.185:50010, 172.29.27.91:50010]: Thread is interrupted.

2023-08-11T01:55:59.758+02:00 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Exception for BP-153760920-172.29.27.83-1654853018310:blk_1081354547_7617444

java.net.SocketTimeoutException: 60000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/172.29.27.95:50010 remote=/172.29.27.69:33920]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at java.io.BufferedInputStream.fill(BufferedInputStream.java:246)

at java.io.BufferedInputStream.read1(BufferedInputStream.java:286)

at java.io.BufferedInputStream.read(BufferedInputStream.java:345)

at java.io.DataInputStream.read(DataInputStream.java:149)

at org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:210)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:211)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:528)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:971)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:891)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:173)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:107)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:290)

at java.lang.Thread.run(Thread.java:748)

Datanode Logs: ics029027091.ics-eu-2.asml.com/172.29.27.91

2023-08-11T02:57:52.972+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: changing replica state for blk_1081354547_7617444 from RWR to RUR

2023-08-11T02:57:52.971+02:00 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: initReplicaRecovery: blk_1081354547_7617444, recoveryId=7619002, replica=ReplicaWaitingToBeRecovered, blk_1081354547_7617444, RWR

getNumBytes() = 2851866

getBytesOnDisk() = 2851866

getVisibleLength()= -1

getVolume() = /hadoop1/hdfs/data

getBlockURI() = file:/hadoop1/hdfs/data/current/BP-153760920-172.29.27.83-1654853018310/current/rbw/blk_1081354547"172.29.27.95 -> Zone2 -> Up

172.29.226.185 -> Zone1 -> Down

172.29.27.91 -> Zone1 -> Down"Topology mapping data script as below -

Created 09-27-2023 06:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could be similar behavior like this jira? https://issues.apache.org/jira/browse/HDFS-14800

Please try to tune the below properties from hbase:

<property>

<name>hbase.lease.recovery.timeout</name>

<value>900000</value>

<description>How long we wait on dfs lease recovery in total before giving up.</description>

</property>

<property>

<name>hbase.lease.recovery.dfs.timeout</name>

<value>64000</value>

<description>How long between dfs recover lease invocations. Should be larger than the sum of the time it takes for the namenode to issue a block recovery command as part of

datanode; dfs.heartbeat.interval and the time it takes for the primary

datanode, performing block recovery to timeout on a dead datanode; usually

dfs.client.socket-timeout. See the end of HBASE-8389 for more.</description>

</property>

Created on 09-28-2023 09:32 PM - edited 09-28-2023 09:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @willx

I was referring to [HDFS-14758] Decrease lease hard limit - ASF JIRA (apache.org)

Not sure if i can reduce hbase.lease.recovery.timeout since even if i reduce to 10mins it will not solve the problem.

My question is - "since there is already good replica on UP datanode, why its connecting to dead datanode!! "

I came up with below findings. Pls check if you also think same.

1. IPC client retries Reducing this value from 50 to 10

-- Active datanode is trying to connect to dead datanode for 15mins. Reducing the retries can close the connect fast with dead datanode.

2. hbase.lease.recovery.timeout Reducing this from 15mins to 10mins/less

-- HBase has hard lease recovery setting in case of abrupt shutdown of Master. Reducing this timeout can recover/release lease sooner.

3. Replication Factor Increasing replication factor from 3 to 5 only for “MasterProcWAL” directory

-- Changing replication factor to greater number can increase chances of availability of good replica for recovery

4. Rack Topology Modify rack topology to logically distribute replicas from 2 zones to 3 zones.

-- As per rack topology concept data is distributed in 2 racks only. The existing state is as below. Hence when block recovery takes place, it tries to refer rack_topology file and finds both datanodes are dead from respective zone.

Adding logical rack3, will distribute the block replica across 3 different datanodes and chances of getting the block recovery will be high.