Support Questions

- Cloudera Community

- Support

- Support Questions

- Help understanding PutHDFS error in Nifi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Help understanding PutHDFS error in Nifi

- Labels:

-

Apache NiFi

Created on 06-02-2017 07:07 PM - edited 08-17-2019 11:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can someone help me understand an error in PutHDFS?

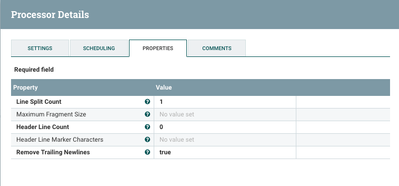

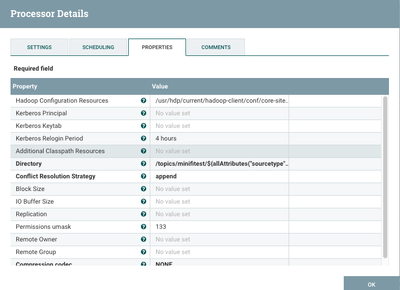

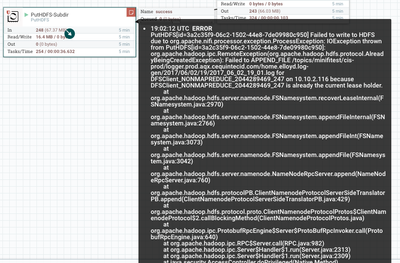

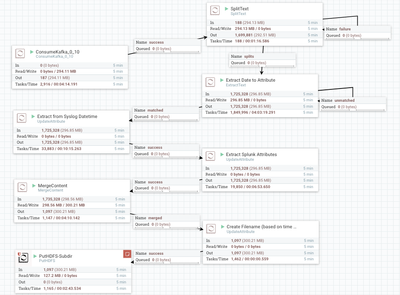

I currently have it set up to read from a Kafka topic and do transformations on it (including SplitText which seems to cause the problem because if I run this flow without SplitText it doesnt have this PutHDFS error - the purpose of SplitText is to prevent bleeding of events from one file into another incorrect file (I have them separated by minute)) I have included screenshots of the flow, config of SplitText and PutHDFS and the error along with below is the actual log from nifi-app.log

2017-06-02 18:58:03,870 INFO [Provenance Maintenance Thread-3] o.a.n.p.PersistentProvenanceRepository Successfully performed Expiration Action org.apache.nifi.provenance.expiration.FileRemovalAction@1ab1bf76 on Provenance Event file ./provenance_repository/68922860.prov.gz in 4 millis

2017-06-02 18:58:04,375 ERROR [Timer-Driven Process Thread-140] o.apache.nifi.processors.hadoop.PutHDFS PutHDFS[id=3a2c35f9-06c2-1502-44e8-7de09980c950] Failed to write to HDFS due to org.apache.nifi.processor.exception.ProcessException: IOException thrown from PutHDFS[id=3a2c35f9-06c2-1502-44e8-7de09980c950]: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.AlreadyBeingCreatedException): Failed to APPEND_FILE /topics/minifitest/cis-prod/logger.prod.aqx.cequintecid.com/home.elloyd.log-gen/2017/06/02/18/2017_06_02_18_57.log for DFSClient_NONMAPREDUCE_2044289469_247 on 10.10.2.116 because DFSClient_NONMAPREDUCE_2044289469_247 is already the current lease holder.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.recoverLeaseInternal(FSNamesystem.java:2970)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInternal(FSNamesystem.java:2766)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInt(FSNamesystem.java:3073)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFile(FSNamesystem.java:3042)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.append(NameNodeRpcServer.java:760)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.append(ClientNamenodeProtocolServerSideTranslatorPB.java:429)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:640)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2313)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2309)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2307)

: org.apache.nifi.processor.exception.ProcessException: IOException thrown from PutHDFS[id=3a2c35f9-06c2-1502-44e8-7de09980c950]: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.AlreadyBeingCreatedException): Failed to APPEND_FILE /topics/minifitest/cis-prod/logger.prod.aqx.cequintecid.com/home.elloyd.log-gen/2017/06/02/18/2017_06_02_18_57.log for DFSClient_NONMAPREDUCE_2044289469_247 on 10.10.2.116 because DFSClient_NONMAPREDUCE_2044289469_247 is already the current lease holder.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.recoverLeaseInternal(FSNamesystem.java:2970)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInternal(FSNamesystem.java:2766)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFileInt(FSNamesystem.java:3073)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.appendFile(FSNamesystem.java:3042)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.append(NameNodeRpcServer.java:760)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.append(ClientNamenodeProtocolServerSideTranslatorPB.java:429)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:640)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2313)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2309)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:230

Created 06-05-2017 01:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Eric Lloyd

I found HDFS-11367 which was reported for the similar issue you encountered.

By reading that JIRA and checking NiFi PutHDFS processor code that calls OutputStream.close method, I suspect there had been other exception such as TimeoutException and it causes AlreadyBeingCreatedException.

I see you have configured the 'Create Filename (UpdateAttribute)' processor to have timestamp in filename as minutes resolution, and PutHDFS with 'Append' conflict resolution mode. This might overwhelm HDFS by executing lots of append requests.

I'd suggest setting MergeContent with longer interval at its 'Run Schedule' such as 1 min or 30 seconds so that NiFi merge contents locally and perform less append operations. It would be not only safer but also more performant.

Created 06-05-2017 01:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Eric Lloyd

I found HDFS-11367 which was reported for the similar issue you encountered.

By reading that JIRA and checking NiFi PutHDFS processor code that calls OutputStream.close method, I suspect there had been other exception such as TimeoutException and it causes AlreadyBeingCreatedException.

I see you have configured the 'Create Filename (UpdateAttribute)' processor to have timestamp in filename as minutes resolution, and PutHDFS with 'Append' conflict resolution mode. This might overwhelm HDFS by executing lots of append requests.

I'd suggest setting MergeContent with longer interval at its 'Run Schedule' such as 1 min or 30 seconds so that NiFi merge contents locally and perform less append operations. It would be not only safer but also more performant.

Created 06-07-2017 04:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the help.

Your estimate on the Run Schedule was a bit high though. When I changed it to even 30 seconds, it bottlenecked badly right before MergeContent. You were right though - when I lowered it to 1 sec, I have very little bottleneck and the error is gone.