Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Lz0 is enabled now what?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Lz0 is enabled now what?

- Labels:

-

Apache Hadoop

Created 09-08-2016 07:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I've enabled lz0 compression as per HortonWorks guide... I've got 120TB of storage capacity so far and a defacto replication factor of 3. My data usage is at 75% and my manager is starting to wonder if lz0 can be used to compress the the file system "a la windows" where the file system is compressed but the data is accessible "as per usual" through the dfs path?

Any hint would be greatly appreciated....

Created 09-08-2016 11:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDFS does not need ext4, ext3 or xfs file system to function. It can seat in top of raw JBOD disks. If that is the case, there is no more opportunity of further compression. If in your case is in top of a file system that is questionable as a best practice. What is your situation?

Anyhow, there are other things you can do maximize even further your storage, e.g. ORC format.

Keep in mind that super-compression requires more and more cores available for processing. Storage is usually cheaper and a super-compression can bring also performance problems, CPU bottleneck etc. All in moderation.

Created on 09-29-2016 03:53 PM - edited 08-19-2019 01:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

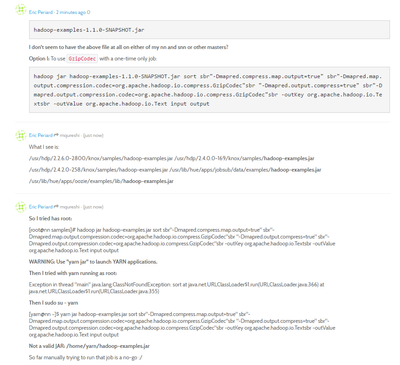

I went through the tutorial above for HDP 2.4.2 without success...

Created 09-29-2016 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I changed the to the right directory location, still the same error though 😕

Created 09-29-2016 04:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there no actual step by step guide on how to do that... Been searching for weeks, nothing concrete so far.

Created 09-29-2016 06:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I got the job to "start"

[ INFO ] [main] Task Id : attempt_1475173438027_0002_m_000000_0, Status : FAILED Error: Java heap space Container killed by the ApplicationMaster. Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 [ INFO ] [main] Task Id : attempt_1475173438027_0002_m_000000_1, Status : FAILED Error: Java heap space Container killed by the ApplicationMaster. Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 [ INFO ] [main] Task Id : attempt_1475173438027_0002_m_000000_2, Status : FAILED Error: Java heap space [ INFO ] [main] map 100% reduce 100% [ INFO ] [main] Job job_1475173438027_0002 failed with state FAILED due to: Task failed task_1475173438027_0002_m_000000 Job failed as tasks failed. failedMaps:1 failedReduces:0

Created 09-29-2016 06:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changed YARN Java heap size from 1Gb to 4... it still dies?

- « Previous

-

- 1

- 2

- Next »