Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi is responding SERVICE_UNAVAILABLE when a...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi is responding SERVICE_UNAVAILABLE when api request volume is little high

- Labels:

-

Apache NiFi

Created on 03-04-2024 10:35 PM - edited 03-05-2024 12:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello experts,

We are using Nifi for data ingestion via http - using 'HandleHttpRequest' processor.

Data is getting ingested continuously but once in a while when incoming volume is little high we get 'Service Unavailable - 503' error from nifi end point.

Below is the log we see in nifi app logs -

2024-03-04 11:20:25,558 WARN [StandardHttpContextMap-c2ffb1fd-2c09-3a90-0e5e-18384499605f] o.a.nifi.http.StandardHttpContextMap StandardHttpContextMap[id=c2ffb1fd-2c09-3a90-0e5e-18384499605f] Request from 10.13.2.204 timed out; responding with SERVICE_UNAVAILABLE

Imp configs :

[HandleHttpRequest processor]

Container Queue Size : 2000

[StandardHttpContextMap controller service]

Maximum Outstanding Requests : 8000

Request Expiration : 3min

nifi.web.jetty.threads=200 (in nifi.properties)

Maximum Timer Driven Thread Count = 200

Total number of running processors on this env : 4200+

This nifi is running on 8 core machine (ec2 instance)

When we look at the CPU usage during the issue we dont see a cpu usage is more than 40%

Can anyone help me to understand the issue and the fix?

Thanks

Mahendra

Created 03-06-2024 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hegdemahendra

The Service Unavailable response to a request received by the HandleHTTPRequest processor is most commonly the result of the FlowFile produced by the HandleHTTPRequest processor not being processed by a downstream HandleHTTPResponse processor before the configured Response Expiration configured in the StandardHTTPContextMap controller service. This aligns with the shared exception you shared from the NiFi logs. If you are seeing this exception prior to the 3 minutes expiration you set, it is possible your client is closing the connection due to some client timeout. That you could need to look at your client sending the requests to get details and options.

You mentioned you have 4200+ processors that are scheduled based on their individual configurations. When a processor is scheduled it requests a thread from the configured Maximum TimerDriven Thread Count pool of threads. So you can see that not all processor can execute concurrently which is expected. You also have only 8 cores so assuming hyper-threading you are looking at the ability to actually service only 16 thread concurrently. So what you have happing is time slicing where all your up to 200 concurrently scheduled threads are gets bits of time on the CPU cores. Good to see you looked at your core load average which is very important as it helps you determine what is a workable size for your thread pool. If you have a lot of cpu intensive processor executing often, your CPU load average is going to be high. For you I see a good managed CPU usage with some occasional spikes.

I brought up above as it directly relates to your processor scheduling. The HandleHTTPRequest processor creates a web server that accepts inbound requests. These request will stack-up within that web service as the processor executed threads read those and produce a FlowFile for each request. How fast this can happen depends on available threads and concurrent task configuration on HandleHTTPRequest processor scheduling tab. By default an added processor only has 1 concurrent task configured. If you set this to say 5, then the processor could potentially get allocated up to 5 threads to process request received by the HandleHTTPRequest processor.

Thought here is you might also be seeing service unavailable because the container queue is filling faster then the processor is producing the FlowFiles as another possibility.

Hope this information helps you in your investigation and solution for you issue.

If you found any of the suggestions/solutions provided helped you with your issue, please take a moment to login and click "Accept as Solution" on one or more of them that helped.

Thank you,

Matt

Created 03-05-2024 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MattWho - requesting your opinion here (if possible)

Created 03-06-2024 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hegdemahendra

The Service Unavailable response to a request received by the HandleHTTPRequest processor is most commonly the result of the FlowFile produced by the HandleHTTPRequest processor not being processed by a downstream HandleHTTPResponse processor before the configured Response Expiration configured in the StandardHTTPContextMap controller service. This aligns with the shared exception you shared from the NiFi logs. If you are seeing this exception prior to the 3 minutes expiration you set, it is possible your client is closing the connection due to some client timeout. That you could need to look at your client sending the requests to get details and options.

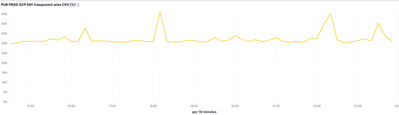

You mentioned you have 4200+ processors that are scheduled based on their individual configurations. When a processor is scheduled it requests a thread from the configured Maximum TimerDriven Thread Count pool of threads. So you can see that not all processor can execute concurrently which is expected. You also have only 8 cores so assuming hyper-threading you are looking at the ability to actually service only 16 thread concurrently. So what you have happing is time slicing where all your up to 200 concurrently scheduled threads are gets bits of time on the CPU cores. Good to see you looked at your core load average which is very important as it helps you determine what is a workable size for your thread pool. If you have a lot of cpu intensive processor executing often, your CPU load average is going to be high. For you I see a good managed CPU usage with some occasional spikes.

I brought up above as it directly relates to your processor scheduling. The HandleHTTPRequest processor creates a web server that accepts inbound requests. These request will stack-up within that web service as the processor executed threads read those and produce a FlowFile for each request. How fast this can happen depends on available threads and concurrent task configuration on HandleHTTPRequest processor scheduling tab. By default an added processor only has 1 concurrent task configured. If you set this to say 5, then the processor could potentially get allocated up to 5 threads to process request received by the HandleHTTPRequest processor.

Thought here is you might also be seeing service unavailable because the container queue is filling faster then the processor is producing the FlowFiles as another possibility.

Hope this information helps you in your investigation and solution for you issue.

If you found any of the suggestions/solutions provided helped you with your issue, please take a moment to login and click "Accept as Solution" on one or more of them that helped.

Thank you,

Matt

Created 03-08-2024 02:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much @MattWho !