Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to copy files from NiFi to HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to copy files from NiFi to HDFS

Created on 07-26-2019 05:01 AM - edited 08-17-2019 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

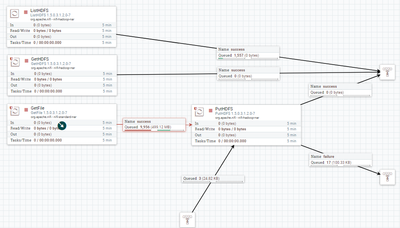

I used NiFi from HDF sandbox (PC A) to copy files to HDFS of HDP sandbox (PC B). NiFi can read files list from HDFS without any error. The flowchart is shown below:

However, NiFi can not get files or put files on HDFS, the errors are shown below:

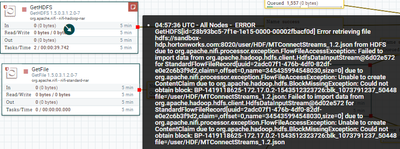

GetHDFS processor error:

PutHDFS processor error:

Can anyone help me? Thank you very much.

Created 07-28-2019 09:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The reason is by design NiFi as a client communicates with HDFS Namenode on port 8020 and it returns the location of the files using the data node which is a private address. Now that both your HDF and HDF are sandboxes I think you should switch both to host-only-adapter your stack trace will be a statement that the client can’t connect to the data node, and it will list the internal IP instead of 127.0.0.1. That causes the minReplication issue, etc.

Change the HDP and HDF sandbox VM network settings from NAT to Host-only Adapter.

Here are the steps:

1. Shutdown gracefully the HDF sandbox

2. Change Sandbox VM network from NAT to Host-only Adapter It will automatically pick your LAN or wireless save the config.

3. Restart Sandbox VM

4. Log in to the Sandbox VM and use ifconfig command to get its IP address, in my case 192.168.0.45

5. Add the entry in /etc/hosts on my host machine, in my case: 192.168.0.45 sandbox.hortonworks.com

6. Check connectivity by telnet: telnet sandbox.hortonworks.com 8020

7. Restart NiFi (HDF)

By default HDFS clients connect to DataNodes using the IP address provided by the NameNode. Depending on the network configuration this IP address may be unreachable by the clients. The fix is letting clients perform their own DNS resolution of the DataNode hostname. The following setting enables this behavior.

If the above still fails make the below changes in the hdfs-site.xml that NiFi is using set dfs.client.use.datanode.hostname to true in your

<property> <name>dfs.client.use.datanode.hostname</name> <value>true</value> <description>Whether clients should use datanode hostnames when connecting to datanodes. </description> </property>

Hope that helps

Created 07-26-2019 08:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you check running status/logs of datanode/namenode and copy-paste it here. Did ou add these 2 files to your nifi config Core-site.xml and hdfs-site.xml

Created on 07-28-2019 06:16 PM - edited 08-17-2019 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Geoffrey,

Thank you for your reply. Yes, I added these two files in NiFi. So ListHDFS processor in NiFi is able to show the list of files in HDFS. But PutHDFS can not put files in HDFS. I guess that the communication between NiFi and the namenode is ok, but something wrong between NiFi and the datanode. I can not know what configurations would affect that.

Core-site.xml and hdfs-site.xml are attached.

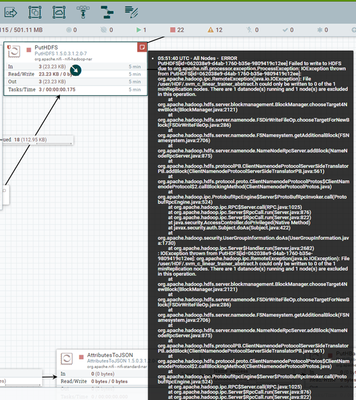

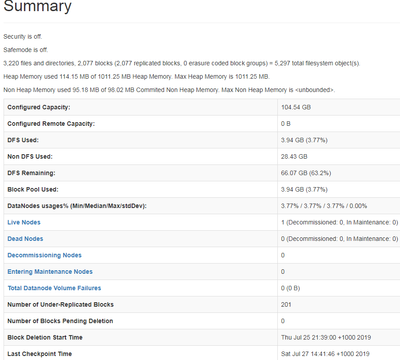

The statuses from Ambari are shown below:

Do you know where to get logs of Namenode and Datanode?

Created on 07-28-2019 02:24 AM - edited 08-17-2019 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

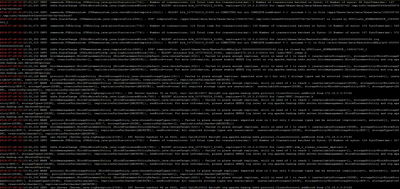

log of datanode:

log of namenode:

Created 07-28-2019 06:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-28-2019 09:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The reason is by design NiFi as a client communicates with HDFS Namenode on port 8020 and it returns the location of the files using the data node which is a private address. Now that both your HDF and HDF are sandboxes I think you should switch both to host-only-adapter your stack trace will be a statement that the client can’t connect to the data node, and it will list the internal IP instead of 127.0.0.1. That causes the minReplication issue, etc.

Change the HDP and HDF sandbox VM network settings from NAT to Host-only Adapter.

Here are the steps:

1. Shutdown gracefully the HDF sandbox

2. Change Sandbox VM network from NAT to Host-only Adapter It will automatically pick your LAN or wireless save the config.

3. Restart Sandbox VM

4. Log in to the Sandbox VM and use ifconfig command to get its IP address, in my case 192.168.0.45

5. Add the entry in /etc/hosts on my host machine, in my case: 192.168.0.45 sandbox.hortonworks.com

6. Check connectivity by telnet: telnet sandbox.hortonworks.com 8020

7. Restart NiFi (HDF)

By default HDFS clients connect to DataNodes using the IP address provided by the NameNode. Depending on the network configuration this IP address may be unreachable by the clients. The fix is letting clients perform their own DNS resolution of the DataNode hostname. The following setting enables this behavior.

If the above still fails make the below changes in the hdfs-site.xml that NiFi is using set dfs.client.use.datanode.hostname to true in your

<property> <name>dfs.client.use.datanode.hostname</name> <value>true</value> <description>Whether clients should use datanode hostnames when connecting to datanodes. </description> </property>

Hope that helps

Created on 07-29-2019 02:37 AM - edited 08-17-2019 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Geoffrey,

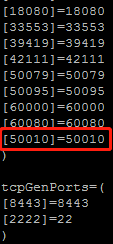

Thank you very much. The problem is solved. I found that 50010 port (data transfer port to the HDFS Datanode ) is not added to HDP docker container. But the 50070 port (port to the HDFS namenode) is added. So I can read the metadata of Files in HDFS with the ListHDFS processor but can not get from HDFS and put files to HDFS with the two processors.

I am using VirtualBox HDP sandbox, so I still use the default NAT network but changed the IP addresses to the current localhost IP at the setting of Port forwarding.

And access to docker host terminal:

root@sandbox-hdp.hortonworks.com 2200

Modified the sandbox proxy file generate-proxy-deploy-script.sh (/sandbox/proxy)

Add 50010 port as follow, then run the shell program, ./ generate-proxy-deploy-script.sh,

Then the proxy-deploy program is updated;

Then run the shell (./proxy-deploy.sh) to generate a proxy container and start it (docker start sandbox-proxy).

Run NiFi:

Files are loaded in HDFS at HDP and fetched from HDFS to NiFi local directory:

Created on 09-29-2021 10:22 AM - edited 09-29-2021 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm very new with HDP sandbox. I have same issue and I'm trying to solve it. I tried changing network settings from NAT to Host Only but it doesn't work, I have this while the VM is starting:

now I'm trying your solution, but what do you mean with "I still use the default NAT network but changed the IP addresses to the current localhost IP at the setting of Port forwarding". How can I do it?

Thank you so much

Created 09-29-2021 09:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@himock as this is an older post, you would have a better chance of receiving a resolution by starting a new thread. This will also be an opportunity to provide details specific to your environment that could aid others in assisting you with a more accurate answer to your question. You can link this thread as a reference in your new post.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 08-01-2024 12:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it works!!!