Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What processor can I use to handle delimiters ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What processor can I use to handle delimiters in a text file

- Labels:

-

Apache NiFi

Created 09-27-2016 10:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have text files that sometimes have various delimiters such as quotation marks, commas, and tabs etc what processor can I use to handle such delimiters and how to I configure it to handle such delimiters in my file? ConvertCsvToAvro "properties" section has similar properties I want to achieve.Thanks

Created 09-30-2016 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 09-28-2016 02:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you want to do with your text files? do you want to convert it to another format, if so what format?

There are many existing processors in NiFi that can manipulate text:

- ReplaceText can modify the content by finding a pattern and performing a replacement

- ExtractText can find patterns and extract them into attributes

- SplitText can split the lines of a text file

- ExecuteScript can apply a Groovy/Jython script to manipulate the text

It really depends what you want to do.

Created 09-28-2016 03:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Bryan Bende thanks for getting back at me.To answer your question:

What do you want to do with your text files?

I want to be able to move the flat files from sftp containing delimiters into a sql database using nifi. How nifi handles the delimiters in the flat file are of concern to me? Which one of the processors stated above is handling the delimiters in the text file? I posted a sample data flow of what I want to achieve and it been answered by you here: https://community.hortonworks.com/questions/57779/how-to-preventing-duplicates-when-ingesting-into-m...

Do you want to convert it to another format, if so what format?

No I do not want to convert it into another format.

Created 09-28-2016 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your flow from the other post you are getting a file from FetchSFTP, then splitting each line with SplitText, then using ExtractText to parse out the values, and ReplaceText to construct a SQL statement.

The ExtractText processor is the one that needs to understand the delimiter in order get the value of each column. Since your flow was working you must have already configured ExtractText with a pattern to parse the line right? So are you just asking how to handle more delimiters?

Created on 09-29-2016 12:34 AM - edited 08-18-2019 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Bryan Bende Thanks once again for getting back.I prefer answering your questions in this pattern:

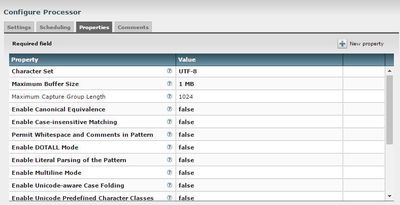

(1) Since your flow was working you must have already configured ExtractText with a pattern to parse the line right?

Yes I used regular expression(attached config image below)to parse the line but I do not think that is the best way to handle this.I would rather like to parse the lines using the delimiter.

(2)So are you just asking how to handle more delimiters?

Yes that will helpful.

Created 09-29-2016 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 09-29-2016 03:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

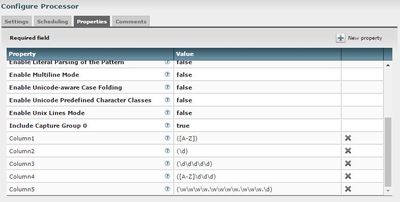

There isn't a specific processor that is made just for parsing delimited lines, mostly because you can do that with ExtractText already. You should only need one pattern to parse the whole line, lets say I have a simple CSV with 4 columns, you could have one property like this:

csv = (.+),(.+),(.+),(.+)

That will add attributes csv.1, csv.2, csv.3, csv.4 containing each respective column.

You could have different instances of ExtractText to handle the different types of delimiters, would need to route the data to each appropriately.

For a more user friendly option you could implement a custom processor like ParseCSV or ParseDelimited where you had a property to take the delimiter and then used some kind of parsing library, or your own code, to parse the line.

A second alternative is to write a Groovy or Jython script to do the parsing and use the ExecuteScript processor.

Created 09-29-2016 09:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Bryan Bende let's say I want to go the route where I use different ExtractText to handle different delimiters how I do about that?Am quite confused here(a vivid example will ve helpful). From my understanding, ExtractText processor will parse a file regardless of the delimiters of the file but what actually matters is the regular expression used to extract the data?correct me if wrong. I also tried replicating your example above, the ingestion of the flow file was successful but there was no data in data the databases tables.

Created 09-30-2016 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 09-30-2016 06:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure if this what you are looking for, but here is an example flow that generates data like:

A,B,C,D

1;2;3;4

It then splits it into two lines, routes the first line to an ExtractText setup to parse with commas, and routes the second line to a different ExtractText to parse semi-colons.