Support Questions

- Cloudera Community

- Support

- Support Questions

- incremental load from mysql to hdfs in hadoop

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

incremental load from mysql to hdfs in hadoop

- Labels:

-

Apache Hadoop

-

Apache Sqoop

Created on 03-18-2017 03:53 AM - edited 08-18-2019 04:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From mysql to hdfs directory.

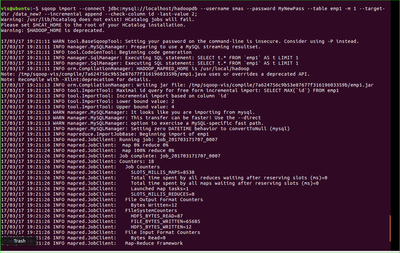

sqoop import --connect jdbc:mysql://localhost/hadoopdb --username smas --password MyNewPass --table emp1 -m 1 --target-dir /data_new7 --incremental append --check-column id -last-value 2

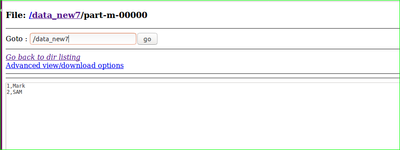

i have /date_new7/part-m-00000 also it didnot work ?

how to make sure that part-m-00000 is updated with 3rd row or id .

it is updating as a seperate table ? any suggestion ?

Created 03-18-2017 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

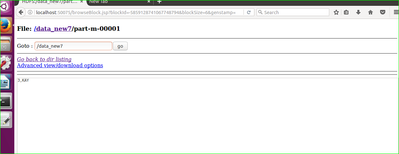

It worked. part-m-00001 is not a separate table, it's just another file in your import directory. If you create an external table on /date_new7, Hive will see a single table with 3 rows. Ditto for Map-reduce jobs taking /date_new7 as their input. If you end up with many small files you can merge them into one (from time to time) by using for example hadoop-streaming, see this example and set "mapreduce.job.reduces=1".

Created 03-18-2017 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It worked. part-m-00001 is not a separate table, it's just another file in your import directory. If you create an external table on /date_new7, Hive will see a single table with 3 rows. Ditto for Map-reduce jobs taking /date_new7 as their input. If you end up with many small files you can merge them into one (from time to time) by using for example hadoop-streaming, see this example and set "mapreduce.job.reduces=1".