Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: putDatabaseRecord really slow

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

putDatabaseRecord really slow

- Labels:

-

Apache NiFi

Created 03-06-2024 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

I am experiencing really slow ingestion with putDatabaseRecord process.

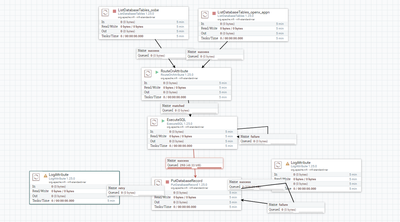

The flow is simple - query data from mySQL 5.6 and ingesting it into redshift.

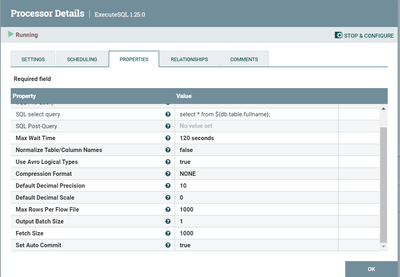

Currently im just testing it so I list only specific tables from those 2 databases and then I query 'select * from $db.tablename' - but the ingestion using putDatabaseRecord is terribly slow (100 rows 5min).

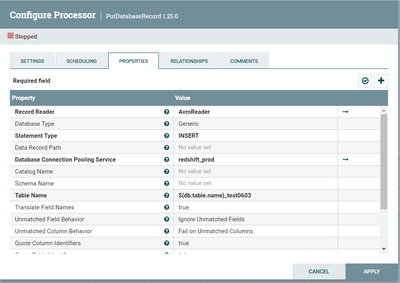

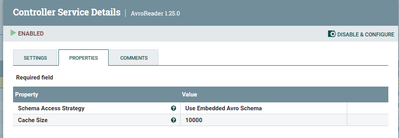

I am using AvroReader inside putDatabaseRecord but didnt make any changes to the avroreader - I just make sure flow files be small (100 rows).

Does AvroReader split the 100 rows into 100 SQL statements?

How do I fix this to have better performance?

Created 03-06-2024 09:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@LiranJP Welcome to the Cloudera Community!

To help you get the best possible solution, I have tagged our NiFi experts @mburgess @MattWho who may be able to assist you further.

Please keep us updated on your post, and we hope you find a satisfactory solution to your query.

Regards,

Diana Torres,Community Moderator

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 03-06-2024 01:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

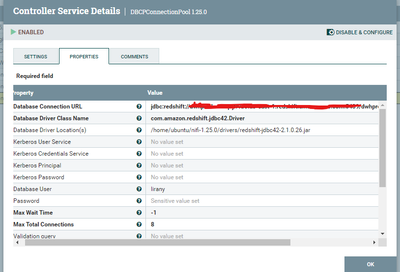

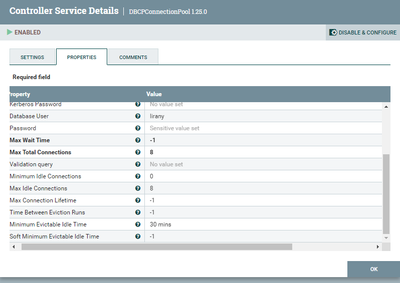

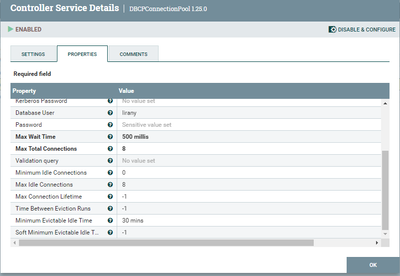

How is your DBCPConnectionPool configured?

Created on 03-06-2024 10:32 PM - edited 03-07-2024 12:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

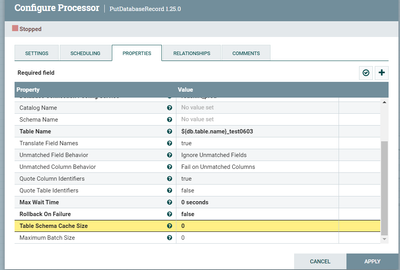

That's the redshift connection:

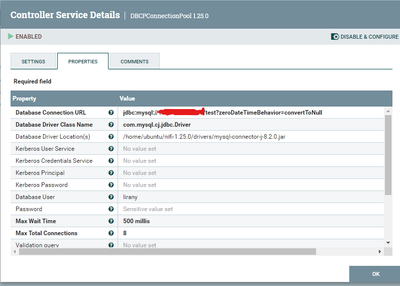

That's the mySQL connection:

I do understand that INSERTs on redshift might be slow and that's why people are using COPY command but 100 rows in 5min is weird.

I know that for BigQuery for example there's a dedicated processor in order to load data but couldn't find anything for RedShift so that's why I am using putDatabaseRecord.

Edit - After I loaded 100 rows I exported the same 100 rows into an INSERT SQL statement and run it directly on the redshift, this task took 1.1 second.

Edit2 - Since im the kind of guy that loves doing my job and to find solution I found this -

reWriteBatchedInserts=true;reWriteBatchedInsertsSize=102400;

I found this on some reddit post by chance, this is working way better now but I couldn't find some details regard those parameters to make them optimized and still that's not the best (still little bit slow).

I am not sure that's the solution so i'm still waiting for your input as well.

Also, if I would like to change it to drop the Avro/flow files into S3 and then execute COPY command from NIFI is it possible?

Created 03-09-2024 11:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mburgess Any update from your side?