Community Articles

- Cloudera Community

- Support

- Community Articles

- How to use Templating to make Data Engineering wo...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

04-25-2024

09:04 PM

- edited on

05-03-2024

02:30 AM

by

VidyaSargur

Overview

Once Data Engineers complete the development and testing of their workloads, moving to deployment can be a challenging task. This process often involves a lengthy checklist, including the modification of multiple configurations that differ across environments. For instance, the configuration spark.yarn.access.hadoopFileSystems must now point to the Data Lake or Data Store appropriate for the production environment. Data Engineers may also opt to use a "staging" environment to test job performance, necessitating changes to these configurations twice: initially from development to staging, and subsequently for production deployment.

A recommended practice in such cases is to use templating the configurations within the workload. This approach allows for easier management of deployments across different environments. In this article, we demonstrate a pattern of templating Data Engineering workloads in Cloudera Data Engineering in Airflow. This best practice allows workload deployment to be environment agnostic and minimizes the deployment effort when moving workloads across environments for example from development to staging to production.

Pre-requisites:

- Familiarity with Cloudera Data Engineering service ( see reference for further details)

- Familiarity with Airflow and Python

Methodology

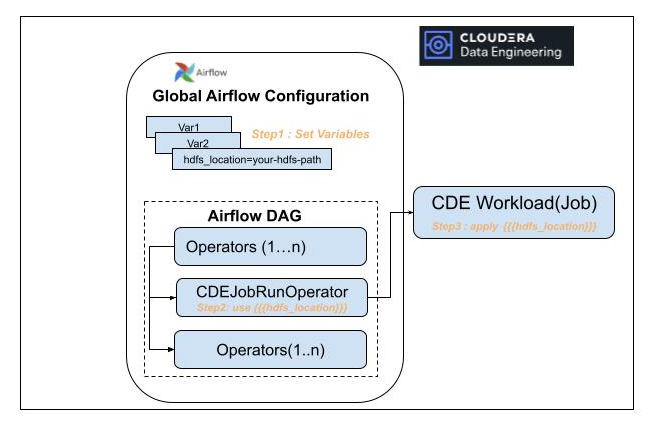

The diagram below demonstrates the pattern of templating for a workload orchestrated by Airflow in Cloudera Data Engineering ( CDE). Using this pattern allows all the jobs to get the correct value of the variables. For example, the purpose we template the hdfs_location, which could vary across deployment environments. Our goal is to ensure that by changing the hdfs_location in the Airflow settings of the specific environment, the jobs get automatically updated.

The three major steps in terms of setup:

- Step 1: Airflow Configuration Setup: we set the variables in Airflow Admin settings.

- Step 2: CDEJobRUNOperator modification: We use Jinja templating format to set up these variables inside the CDEJobRun operator to pass these variables to the CDE Job via the CDEJobRunOperator

- Step 3: CDE Job modification: we apply the variables in the workload e.g. a pyspark or scala job.

Let us work with a real-life example to understand how to execute these three steps in Cloudera Data Engineering(CDE).

Step 1: Airflow Configuration Setup

In Cloudera Data Engineering, the airflow configuration for the virtual cluster is accessed as follows:

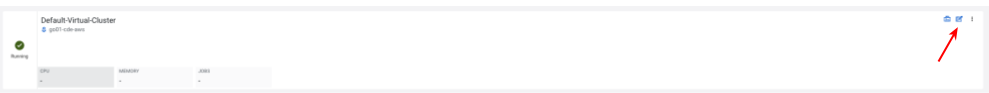

- Click your CDE Service and select the Virtual Cluster you would like to use

- Click on the Cluster Details icon clicking on the cluster details as shown below:

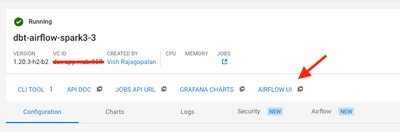

- Next, click on the Airflow UI as shown below to launch the Airflow application user interface:

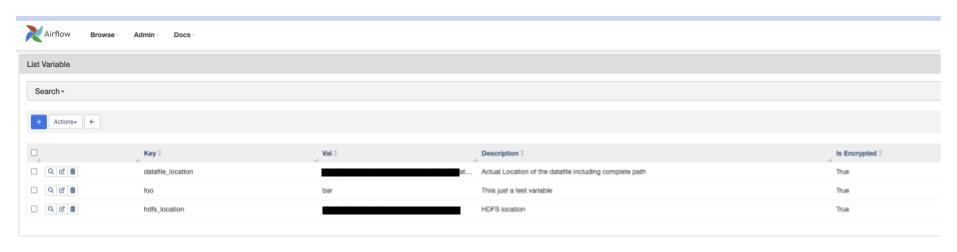

- In the Airflow User interface, click on the Admin menu and variable sub-menu to launch the variables menu page as below. This shows the variables configured in Airflow currently. To add a new variable, simply select the blue + Sign below to open the add variable page.

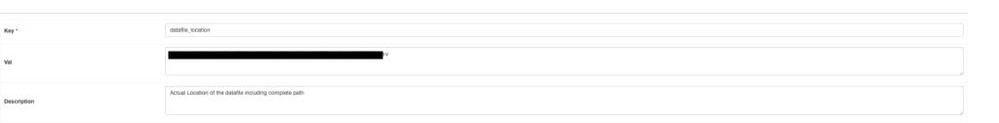

- Add a new variable called datafile_location to provide the location of the datafile for your spark application to read. You can specify the actual path of the variable and a description in the appropriate fields.

- You can use this same screen to edit the variable path when you want to make modifications by selecting the row and the edit option in front of the key.

This concludes the action in the Airflow configuration. Next, let us see how we should make a change to the Airflow Operator which calls the CDE Job in the next section

Step 2: CDEJobRunOperator modification

Important Note: You can modify the CDEJobRunOperator in two ways based on how you have generated your Airflow DAG.

- Option 1: If you have created the Airflow DAG through code, use the Manual CDEJobRunOperator Modification section.

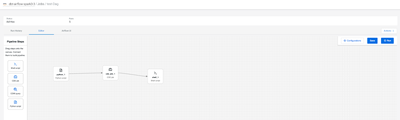

- Option 2: If you have used the CDE Airflow UI Editor for creating the Airflow job, then Modifying in Pipeline Editor Option ( Refer picture below for the Airflow UI Editor in CDE)

Option 1: Manual CDEJobRunOperator Modification

Note: Use this option only if you have coded your Airflow job manually and have NOT used the CDE pipeline Editor. If you have used the AirflowUI use option 2

In this step, we modify the CDEJobRunOperator to access the variables from the Airflow configurations and then pass them on to the CDE Job. To access variables from Airflow we need

- Use Jinja templating structure so variables must be encapsulated in {{<full-variable-name}}

- Use airflow object structure. So variables are always accessed as var.value.variable-name. Check references for more details on airflow variable naming and accessing methods

- So a sample way to access the variables defined in Airflow above inside the CDEJobRunOperator is provided below:

cde_job_1 = CDEJobRunOperator( job_name='some_data_exploration_job', variables={'datafile_location': '{{ var.value.datafile_location}}'}, overrides={'spark': {'conf': {'spark.yarn.access.hadoopFileSystems': '{{var.value.hdfs_location}}'}}}, trigger_rule='all_success', task_id='cde_job_1', dag=dag, )

This concludes the changes we need to make in a Job to access the variables set up in our Airflow configuration.

Option 2: Modifying in the Pipeline Editor

Note: Use this option ONLY if you have used the CDE pipeline Editor. If you have created an Airflow DAG code, then use Option 1 earlier.

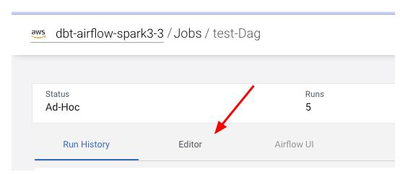

CDE provides a visual pipeline editor for generating simple workflows for those who prefer a drag-and-drop interface for creating simple Airflow pipelines. Please note that not all the operators from Airflow are supported here. If the Airflow Job and the CDEJobRunOperator were created using the pipeline, then we can set up the variables inside the pipeline. To set the variables select the Airflow Job that was created with Airflow UI and select Editor as shown below

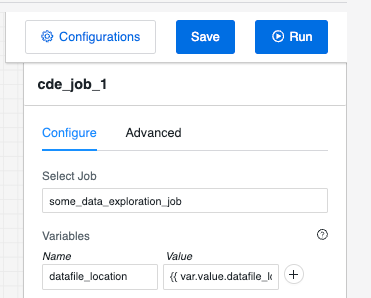

In the Editor, click on the CDEJobRunOperator and add the variable as shown below in the name and value

- In the Name text box enter: datafile_location

- In the Value text box enter: {{var.value.datafile_location}}

Important Note: The curly braces {{ and }} are mandatory to ensure the variable has the right template format.

Step 3: CDE Job Modification

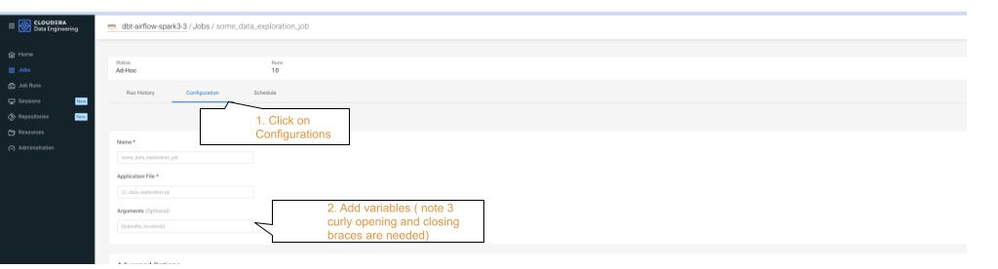

To access the variables inside the DAG we need to set up the CDE Job with the same variable names. There are two ways to do this. We can use the User Interface or the Command Line Interface. Here is a way to set up the variables in the User interface for datafile_location.

The picture above shows the changes that you need to make to your Job to accept arguments.

- First, click on the airflow job ( or create a new one)

- Add the argument and use the same variable name that you used in Airflow and CDEJobRunOperator

Important Note: Do not forget to add (three opening curly braces) {{{ variable-name}}} (closing curly braces) otherwise the variable will not get the value you need.

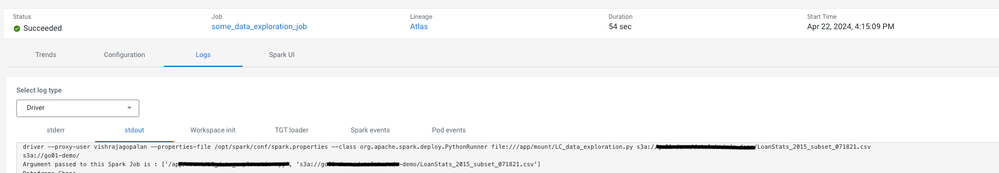

Finally, after these configurations, you can access the variables inside your CDE job by using the sys package. These variables are passed as arguments, and can therefore be accessed with the sys package. Here I have printed the Spark configuration and the variables inside a pyspark job that is called by the CDEJobRunOperator we defined earlier

import sys

print(f"Argument passed to this Spark Job is : {sys.argv}")

We can use this simple test to check if the variable value is captured as arguments. As you can see below the first value contains the data_location value we have set in airflow. We can now use this to get the variable value.

This concludes our 3 step process for templating our Cloudera Data Engineering Workloads. Using such a templating approach, we can deploy the code outside our development environment easily without code modifications. We only need to add or edit the variables in Airflow to enable the code to work in the new environment such as staging or production.