Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Datanodes report block count more than thresho...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Datanodes report block count more than threshold on datanode and Namenode

- Labels:

-

Cloudera Manager

-

HDFS

Created 04-21-2017 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have 3 node cluster running on CentOS 6.7. Since a week I can see warning on all 3 nodes block count more than threshold. My Namenode is also used as DataNode.

Its more or less like this on all 3 nodes.

Concerning : The DataNode has 1,823,093 blocks. Warning threshold: 500,000 block(s).

I know this means the problem of growing small files. I have website data (unstructured) on hdfs, they contain jpg, mpeg, css, js, xml, html types of data.

I dont know how to deal with this problem. Please help.

The output of the following command on NameNode is:

[hdfs@XXXXNode01 ~]$ hadoop fs -ls -R / |wc -l

3925529

Thanks,

Shilpa

Created 04-22-2017 11:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try any one or two or all the options

1. CM -> HDFS -> Actions -> Rebalance

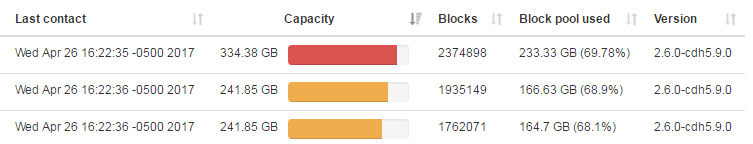

2. a. CM -> HDFS -> WebUI -> Namenode WebUI -> It will open a new page -> Datanodes menu -> Check the blocks count under each node

b. CM -> HDFS -> Configuration -> DataNode Block Count Thresholds -> Increase the block count threshold and it should be greater than step a

3. Deleted files from HDFS will be moved to trash and it will be automatically deleted, so make sure auto delete is working fine if not Purge the trash directory. Also delete some unwanted files from hosts to save some disk space.

Created 04-26-2017 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @saranvisa.

I tried to do rebalance but it did not help.

The number of blocks on each node is too high and my threshold is 500,000. Do you think adding some disk in the server and then changing the threshold would help?

Created 04-27-2017 07:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems in your case, you have to

1. add additional disk/node

2. try rebalance after that

3. changing the threshold to a reasonable value. Because this threshold will help you to fix the issue before it breaks

Created 04-27-2017 11:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ShilpaSinha what is the total size of your cluster and the total blocks.

Seems you are writing too much small files.

1- Adding more nodes or disk to handle number of blocks isn't the ideal solution.

2- If you have an enough memory in the namenode, and the storage is fine in the cluster, just add more memory for the namenode service.

3- If you have a scheduled jobs try to figure which job is writing too many files and reduce it's frequency.

4- The best solution to handle this issue is to use a compaction process as part of the job or a separated one.

Created 04-28-2017 07:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ShilpaSinha I am also kind of agree with @Fawze because there will be multiple options for the same issue

I've suggested to add addition node in my 2nd update as the total capacity of your cluster is very less (not even 1 TB). So if it is affordable for you better add additional node, also consider the suggestion from @Fawze to compress the files

As mentioned already you don't need to follow "either this or other option", you can try all the possible options to keep your environment better

Created 05-03-2017 10:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @saranvisa and @Fawze,

I have increased the RAM of my NN from 56GB 112GB and increased the heap and java memory of the configs. However I still face the issue. Due to some client work I cannot increase the disk untill this friday. Will update once i do that.

Thanks

Created 05-03-2017 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

memory for the NN service, 125GB is too much, increasing the memory willn't

solve the issue, but it will not overload the NN.

Created on 05-03-2017 10:20 AM - edited 05-03-2017 10:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My current main configs after increasing the memory are:

yarn.nodemanager.resource.memory-mb - 12GB

yarn.scheduler.maximum-allocation-mb - 16GB

mapreduce.map.memory.mb - 4GB

mapreduce.reduce.memory.mb - 4GB

mapreduce.map.java.opts.max.heap - 3GB

mapreduce.reduce.java.opts.max.heap - 3GB

namenode_java_heapsize - 6GB

secondarynamenode_java_heapsize - 6GB

dfs_datanode_max_locked_memory - 3GB

dfs blocksize - 128 MB

do you think I should change something?

Created 05-03-2017 12:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can even reduce

yarn.nodemanager.resource.memory-mb