Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS - Under-Replicated Blocks, missing Blocks

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS - Under-Replicated Blocks, missing Blocks

Created on 11-09-2015 08:19 PM - edited 09-16-2022 02:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI

my four node (1 Name , 3 Data node) cluster just recoverd from Hardrvie failure; I hade to re-formate three of data node drives ( all on node 4)and re mount it to designated location.

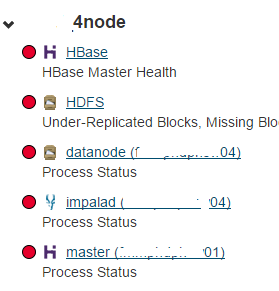

CDH manager shows following health issues and Impala , Hbase services are stopped.

hard drive failure was in node4, node 1 is master.

HDFS shows underreplicated blocks (total 1 block is missing and around 99% are under replicated);

I wonder how can I solve these issues. From my opinion hdfs should re-generate undereplicated blocks itself; if yes how long should it take? is there a way to experdite this process (make it faster)?

if No how can I manually re-generate such blocks?

thanks

Created 11-10-2015 09:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

following steps worked for me

- in my case the node (#4) had disk failures was not coming up as data node ; was stopped

- i tried starting it thougth services>hdfs>node4>start this data node but failed

- while looking at logs o found warnings about more than one drives having data inconcistency; theere were FATAL messages but those lead me to dead end while searching for solution.

- i went to all those mounted points(disks) and removed dfs by rm -rf /dfs on node; one of drive was unable to mount beacuse on that local location hdfs already made those folders; due to pervious attempts of starting datanode; i removed those folders as well and was able to mount. once mounted the new disk was clean free of any hadoop folders

- I found a file in /tmp/hsprfdata_hdfs which began with some numbers (was some kind of log); i renamed it as old_**** just in case I need it later; and let hdfs know that file is gone!!!

- i went back to CDH manager HDFS node 4 and tried restarting this datanode; it worked; all the disks have dfs and directories now; tmp location had that old_*** file removed and there is new file (some other number)

Created 11-10-2015 09:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

following steps worked for me

- in my case the node (#4) had disk failures was not coming up as data node ; was stopped

- i tried starting it thougth services>hdfs>node4>start this data node but failed

- while looking at logs o found warnings about more than one drives having data inconcistency; theere were FATAL messages but those lead me to dead end while searching for solution.

- i went to all those mounted points(disks) and removed dfs by rm -rf /dfs on node; one of drive was unable to mount beacuse on that local location hdfs already made those folders; due to pervious attempts of starting datanode; i removed those folders as well and was able to mount. once mounted the new disk was clean free of any hadoop folders

- I found a file in /tmp/hsprfdata_hdfs which began with some numbers (was some kind of log); i renamed it as old_**** just in case I need it later; and let hdfs know that file is gone!!!

- i went back to CDH manager HDFS node 4 and tried restarting this datanode; it worked; all the disks have dfs and directories now; tmp location had that old_*** file removed and there is new file (some other number)

Created 11-10-2015 11:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Congratulations on solving the issue. Thank you for sharing the steps in case someone else faces something similar.

Cy Jervis, Manager, Community Program

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.