Cloudera Data Analytics (CDA) Articles

- Cloudera Community

- Support

- Groups

- Cloudera Data Analytics (CDA)

- Community Articles

- Replace your failed Worker Node disks

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

06-10-2023

02:59 AM

- edited on

06-12-2023

11:20 PM

by

VidyaSargur

Summary

It is expected that you will experience worker node data disk failures whilst managing your CDP cluster. This blog takes you through the steps that you should take to gracefully replace the failed worker node disks with the least disruption to your CDP cluster.

Investigation

Cloudera Manager Notification

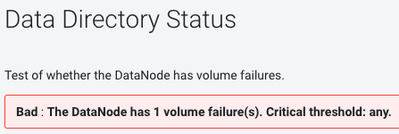

One easy method to identify that you have experienced a disk failure within your cluster is with the Cloudera Manager UI. You will see the following type of error:

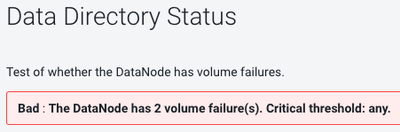

Cloudera Manager will also track multiple disk failures:

HDFS NameNode - DataNode Volume Failures

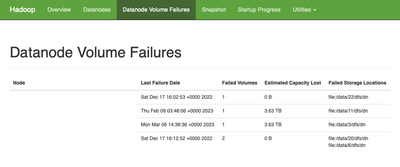

The failed disks within your cluster can also be observed from within the HDFS NameNode UI:

This is also useful to quickly identify exactly which storage locations have failed.

Confirming from the Command Line

Taking the last example from HDFS NameNode - DataNode Volume Failures, we can see that /data/20 & /data/6 are both failed directories.

The following interaction from the Command Line on the worker node will also confirm the disk issue:

|

[root@<WorkerNode> ~]# ls -larth /data/20 ls: cannot access /data/20: Input/output error [root@<WorkerNode> ~]# ls -larth /data/6 ls: cannot access /data/6: Input/output error [root@<WorkerNode> ~]# ls -larth /data/1 total 0 drwxr-xr-x. 26 root root 237 Sep 30 02:54 .. drwxr-xr-x. 3 root root 20 Oct 1 06:45 kudu drwxr-xr-x. 3 root root 16 Oct 1 06:46 dfs drwxr-xr-x. 3 root root 16 Oct 1 06:47 yarn drwxr-xr-x. 3 root root 29 Oct 1 06:48 impala drwxr-xr-x. 2 impala impala 6 Oct 1 06:48 cores drwxr-xr-x. 7 root root 68 Oct 1 06:48 . |

Resolution

Replace a disk on a Worker Node

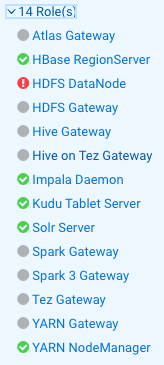

You will have a number of roles that are running on any single worker node host. This is an example of a worker node that is showing a failed disk:

Decommission the Worker Node

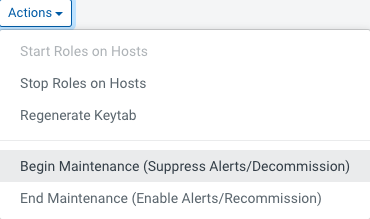

As there are multiple roles running on a worker node, it’s best to use the decommissioning process to gracefully remove the worker node from running services. This can be found by navigating to the host within Cloudera Manager and using “Actions > Begin Maintenance”

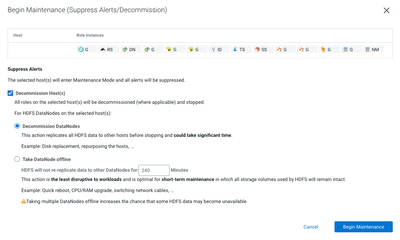

It will then take you to the following page:

Click “Begin Maintenance” and wait for the process to complete.

Expect this process to take hours on a busy cluster. The time the process takes to complete is dependent upon:

- The number of regions that the HBase RegionServer is hosting

- The number of blocks that the HDFS DataNode is hosting

- The number of tablets that the Kudu TabletServer is hosting

Replace and Configure the disks

Once the worker node is fully decommissioned, the disks are ready to be replaced and configured physically within your datacenter by your infrastructure team.

Every cluster is going to have its own internal processes to configure the newly replaced disks. Let’s go through an example of how this work can be verified for reference.

List the attached block devices

|

[root@<WorkerNode> ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 3.7T 0 disk /data/1 sdb 8:16 0 3.7T 0 disk /data/2 sdc 8:32 0 3.7T 0 disk /data/3 sdd 8:48 0 3.7T 0 disk /data/4 sde 8:64 0 3.7T 0 disk /data/5 sdf 8:80 0 3.7T 0 disk /data/6 sdg 8:96 0 3.7T 0 disk /data/7 sdh 8:112 0 3.7T 0 disk /data/8 sdi 8:128 0 3.7T 0 disk /data/9 sdj 8:144 0 3.7T 0 disk /data/10 sdk 8:160 0 3.7T 0 disk /data/11 sdl 8:176 0 3.7T 0 disk /data/12 sdm 8:192 0 3.7T 0 disk /data/13 sdn 8:208 0 3.7T 0 disk /data/14 sdo 8:224 0 3.7T 0 disk /data/15 sdp 8:240 0 3.7T 0 disk /data/16 sdq 65:0 0 3.7T 0 disk /data/17 sdr 65:16 0 3.7T 0 disk /data/18 sds 65:32 0 3.7T 0 disk /data/19 sdt 65:48 0 3.7T 0 disk /data/20 sdu 65:64 0 3.7T 0 disk /data/21 sdv 65:80 0 3.7T 0 disk /data/22 sdw 65:96 0 3.7T 0 disk /data/23 sdx 65:112 0 3.7T 0 disk /data/24 sdy 65:128 0 1.8T 0 disk ├─sdy1 65:129 0 1G 0 part /boot ├─sdy2 65:130 0 20G 0 part [SWAP] └─sdy3 65:131 0 1.7T 0 part ├─vg01-root 253:0 0 500G 0 lvm / ├─vg01-kuduwal 253:1 0 100G 0 lvm /kuduwal ├─vg01-home 253:2 0 50G 0 lvm /home └─vg01-var 253:3 0 100G 0 lvm /var |

List the IDs of the block devices

|

[root@<WorkerNode> ~]# blkid /dev/sdy1: UUID="4b2f1296-460c-4cbc-8aca-923c9309d4fe" TYPE="xfs" /dev/sdy2: UUID="af9c4c79-21b9-4d02-9453-ede88b920c1f" TYPE="swap" /dev/sdy3: UUID="j9n4QD-60xB-rqpQ-Ck3y-s2m0-FdSo-IGWrN9" TYPE="LVM2_member" /dev/sdb: UUID="4865e719-e77c-4d1e-b1e0-80ae1d0d6e82" TYPE="xfs" /dev/sdc: UUID="59ae0b91-3cfc-4c53-a02f-e20bdf0ac209" TYPE="xfs" /dev/sdd: UUID="b80473e0-bce8-413c-9740-934e8ed7006e" TYPE="xfs" /dev/sda: UUID="684e32c8-eeb2-4215-b861-880543b1f96b" TYPE="xfs" /dev/sdg: UUID="0f0d12ac-7d93-4c76-9f5c-ac6b43f2eaff" TYPE="xfs" /dev/sde: UUID="06c0e908-dd67-4a42-8615-7b7335a7e0f6" TYPE="xfs" /dev/sdf: UUID="9346fa04-dc1a-4dcc-8233-a5cb65495998" TYPE="xfs" /dev/sdn: UUID="8f05d1dd-94d1-4376-9409-d5683ad4c225" TYPE="xfs" /dev/sdo: UUID="5e0413d1-0b82-4ec1-b3f9-bb072db39071" TYPE="xfs" /dev/sdh: UUID="08063201-f252-49dd-8402-042afbea78a2" TYPE="xfs" /dev/sdl: UUID="1e5ace85-f93c-46f7-bf65-353f774cfeaa" TYPE="xfs" /dev/sdk: UUID="195967b5-a1a0-43bb-9a33-9cf7a36fdcb6" TYPE="xfs" /dev/sdq: UUID="db81b056-587e-47a6-844e-2d952278324b" TYPE="xfs" /dev/sdr: UUID="45b4cf68-6f10-4dc7-8128-c2006e7aba5d" TYPE="xfs" /dev/sds: UUID="a8e591e9-33c8-478a-b580-aeac9ad4cf44" TYPE="xfs" /dev/sdi: UUID="a0187ae0-7598-44c4-805c-ef253dea6e7a" TYPE="xfs" /dev/sdm: UUID="720836d8-ddd6-406d-a33f-f1b92f9b40d5" TYPE="xfs" /dev/sdv: UUID="df4bdd58-e8d2-4bdb-8255-b9c7fcfe8999" TYPE="xfs" /dev/sdw: UUID="701f3516-03bc-461b-930c-ab34d0b417d7" TYPE="xfs" /dev/sdu: UUID="5e1bd2f3-8ccc-4ba1-a0f7-bb55c8246d72" TYPE="xfs" /dev/sdj: UUID="264b85f8-9740-418b-a811-20666a305caa" TYPE="xfs" /dev/sdt: UUID="53f2f06e-71e9-4796-86a3-2212c0f652ea" TYPE="xfs" /dev/sdp: UUID="e6b984c0-6d85-4df2-9a7d-cc1c87238c49" TYPE="xfs" /dev/mapper/vg01-root: UUID="18bc42fe-dbfd-4005-8e13-6f5d2272d9a7" TYPE="xfs" /dev/sdx: UUID="53e4023f-583a-4219-bfd2-1a94e15f34ef" TYPE="xfs" /dev/mapper/vg01-kuduwal: UUID="a1441e2f-718b-42eb-b398-28ce20ee50ad" TYPE="xfs" /dev/mapper/vg01-home: UUID="fbc8e522-64da-4cc3-87b6-89ea83fb0aa0" TYPE="xfs" /dev/mapper/vg01-var: UUID="93b1537f-a1a9-4616-b79a-cab9a1e39bf1" TYPE="xfs" |

View the /etc/fstab

|

[root@<WorkerNode> ~]# cat /etc/fstab /dev/mapper/vg01-root / xfs defaults 0 0 UUID=4b2f1296-460c-4cbc-8aca-923c9309d4fe /boot xfs defaults 0 0 /dev/mapper/vg01-home /home xfs defaults 0 0 /dev/mapper/vg01-kuduwal /kuduwal xfs defaults 0 0 /dev/mapper/vg01-var /var xfs defaults 0 0 UUID=af9c4c79-21b9-4d02-9453-ede88b920c1f swap swap defaults 0 0 UUID=684e32c8-eeb2-4215-b861-880543b1f96b /data/1 xfs noatime,nodiratime 0 0 UUID=4865e719-e77c-4d1e-b1e0-80ae1d0d6e82 /data/2 xfs noatime,nodiratime 0 0 UUID=59ae0b91-3cfc-4c53-a02f-e20bdf0ac209 /data/3 xfs noatime,nodiratime 0 0 UUID=b80473e0-bce8-413c-9740-934e8ed7006e /data/4 xfs noatime,nodiratime 0 0 UUID=06c0e908-dd67-4a42-8615-7b7335a7e0f6 /data/5 xfs noatime,nodiratime 0 0 UUID=9346fa04-dc1a-4dcc-8233-a5cb65495998 /data/6 xfs noatime,nodiratime 0 0 UUID=0f0d12ac-7d93-4c76-9f5c-ac6b43f2eaff /data/7 xfs noatime,nodiratime 0 0 UUID=08063201-f252-49dd-8402-042afbea78a2 /data/8 xfs noatime,nodiratime 0 0 UUID=a0187ae0-7598-44c4-805c-ef253dea6e7a /data/9 xfs noatime,nodiratime 0 0 UUID=264b85f8-9740-418b-a811-20666a305caa /data/10 xfs noatime,nodiratime 0 0 UUID=195967b5-a1a0-43bb-9a33-9cf7a36fdcb6 /data/11 xfs noatime,nodiratime 0 0 UUID=1e5ace85-f93c-46f7-bf65-353f774cfeaa /data/12 xfs noatime,nodiratime 0 0 UUID=720836d8-ddd6-406d-a33f-f1b92f9b40d5 /data/13 xfs noatime,nodiratime 0 0 UUID=8f05d1dd-94d1-4376-9409-d5683ad4c225 /data/14 xfs noatime,nodiratime 0 0 UUID=5e0413d1-0b82-4ec1-b3f9-bb072db39071 /data/15 xfs noatime,nodiratime 0 0 UUID=e6b984c0-6d85-4df2-9a7d-cc1c87238c49 /data/16 xfs noatime,nodiratime 0 0 UUID=db81b056-587e-47a6-844e-2d952278324b /data/17 xfs noatime,nodiratime 0 0 UUID=45b4cf68-6f10-4dc7-8128-c2006e7aba5d /data/18 xfs noatime,nodiratime 0 0 UUID=a8e591e9-33c8-478a-b580-aeac9ad4cf44 /data/19 xfs noatime,nodiratime 0 0 UUID=53f2f06e-71e9-4796-86a3-2212c0f652ea /data/20 xfs noatime,nodiratime 0 0 UUID=5e1bd2f3-8ccc-4ba1-a0f7-bb55c8246d72 /data/21 xfs noatime,nodiratime 0 0 UUID=df4bdd58-e8d2-4bdb-8255-b9c7fcfe8999 /data/22 xfs noatime,nodiratime 0 0 UUID=701f3516-03bc-461b-930c-ab34d0b417d7 /data/23 xfs noatime,nodiratime 0 0 UUID=53e4023f-583a-4219-bfd2-1a94e15f34ef /data/24 xfs noatime,nodiratime 0 0 |

Recommission the Worker Node

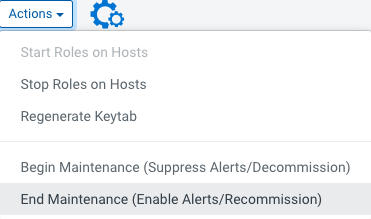

Once the disk(s) has been suitably replaced, it’s time to use the recommissioning process to gracefully reintroduce the worker node back into the cluster. This can be found by navigating to the host within Cloudera Manager and using “Actions > End Maintenance”

After the node has completed its recommission cycle, follow the guidance in the next sections to perform local disk rebalancing where appropriate.

Address local disk HDFS Balancing

Most clusters utilize HDFS. This service has a local disk balancer that you can make use of. Please find some helpful guidance within the following - Rebalance your HDFS Disks (single node)

Address local disk Kudu Balancing

If you are running Kudu within your cluster, you will need to rebalance the existing Kudu data on the local disks of the worker node. Please find some helpful guidance within the following - Rebalance your Kudu Disks (single node)