Community Articles

- Cloudera Community

- Support

- Community Articles

- A Reference Architecture for Enterprise Data Wareh...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 05-12-2017 02:15 AM - edited 08-17-2019 01:00 PM

Intro

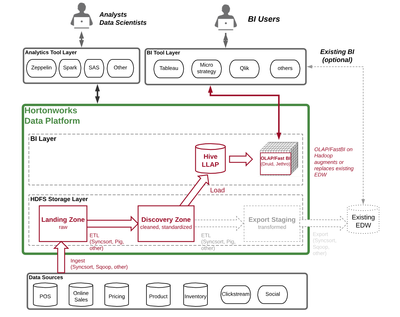

Scalable cheap storage and parallel processing are at the foundation of Hadoop. These capabilities allow Hadoop to play a key role in complementing and optimizing traditional Enterprise Data Warehouse (EDW) workloads. In addition, recent technologies like Hive LLAP (in-memory, long-running execution threads) with ACID merge and AtScale proprietary software (virtual OLAP cubes with aggregation cacheing in HDFS) now for the first time allow fast BI directly against TB- and PB-sized data on Hadoop using well-known BI tools (e.g Tableau).

This article describes reference architectures for three use cases of EDW optimization:

- Active archiving: archiving aged EDW data on Hadoop for cheap storage and for exploratory and BI analysis.

- EDW offload: offloading staging data and ETL transformations to Hadoop and exporting the final data structures back to existing EDW system (for cheap storage and faster ETL on Hadoop, as well as faster BI queries on existing EDW).

- BI on Hadoop: Business Intelligence tooling against TB and PB data on Hadoop, with the possibility of retiring existing EDW.

The Problem

- EDW systems like Teradata and Neteeza cost > 50x – 100x more per GB to store data compared to HDFS

- Most of the data in EDW systems (typically up to 70%) is staged for transformation to final tables used for BI queries. BI users do not directly query this staged data. This staged data is extremely costly to store.

- Aged data either sits expensively on the EDW or is archived to cheaper systems like tape where it is inaccessible for BI users and analysts.

- Transformation of staging data is typically long-duration, often more than a day for one transformation job.

- Most of the CPU in EDW systems (typically > 50%) is used to process these transformations. This long-running background CPU usage lowers the performance of BI queries run at the same time. These BI queries are the reason for the EDW, but typically do not perform optimally.

- EDW schema-on-write requirement stresses the ability to load modern data sources like semi-structured social data

Reference Architectures

Note that any of the below architectures can be implemented alone or a combination can be implemented together, depending on your needs and strategic roadmap. Also for each diagram below, red represents EDW optimization data architecture and black represents existing data architecture.

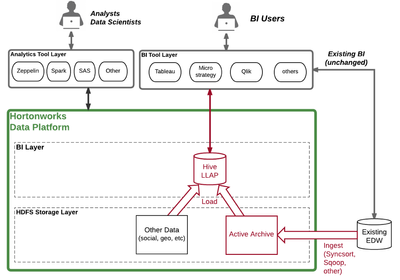

Use Case 1: Active Archiving

In this use case aged data is offloaded to Hadoop instead of being stored on the EDW or on archival storage like tape. Offload can be via tools such as Sqoop (native to Hadoop) or an ETL tool like Syncsort DMX-h (proprietary, integrated with Hadoop framework including YARN and map-reduce).

Benefits:

- Aged data from EDW is now stored more cheaply

- Aged data from archival systems like tape are now accessible to querying

- EDW data is now combined with new data sources (like geospatial, social or clickstream) in the lake. The combination of these sources allow greater analytical capabilities like enriched data or customer 360 analysis for both BI users and data scientists.

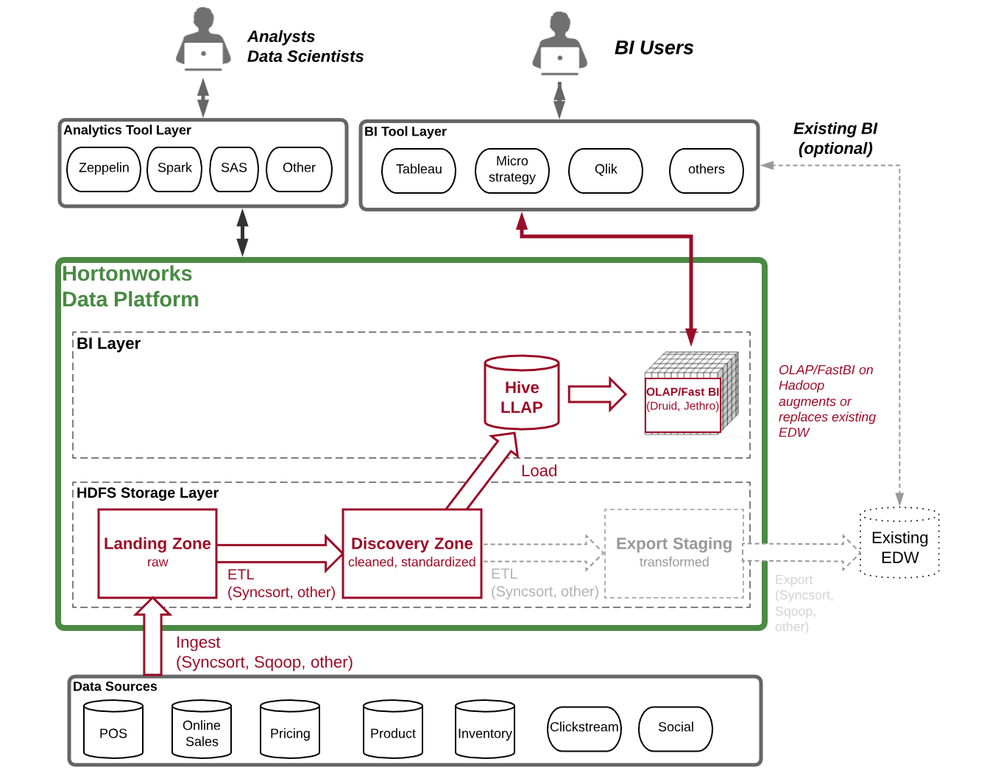

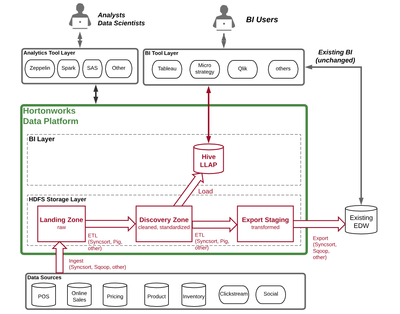

Use Case 2: EDW Offloading

In this use case both staging data and ETL are offloaded from the EDW to hadoop. Raw data is stored on the lake and processed into cleaned and standardized data used for Hive LLAP tables. Cleaned and standardized data is transformed into structures that are exported to the existing EDW. BI users continue to use the existing EDW unchanged, unaware that the plumbing beneath has changed.

Benefits:

- Raw data is centralized in the lake, available to data scientists and for repurposing in other use cases. Raw data is retained because storage is cheap.

- New (EDW) data sources are ingested to the lake, leading to greater analytical capabilities as described above.

- ETL is significantly faster on Hadoop because of parallel batch processing. ETL times are reduced from parts of days to minutes or hours.

- ETL is removed from existing EDW. This frees significant CPU resulting in noticeably faster BI queries making BI users happier.

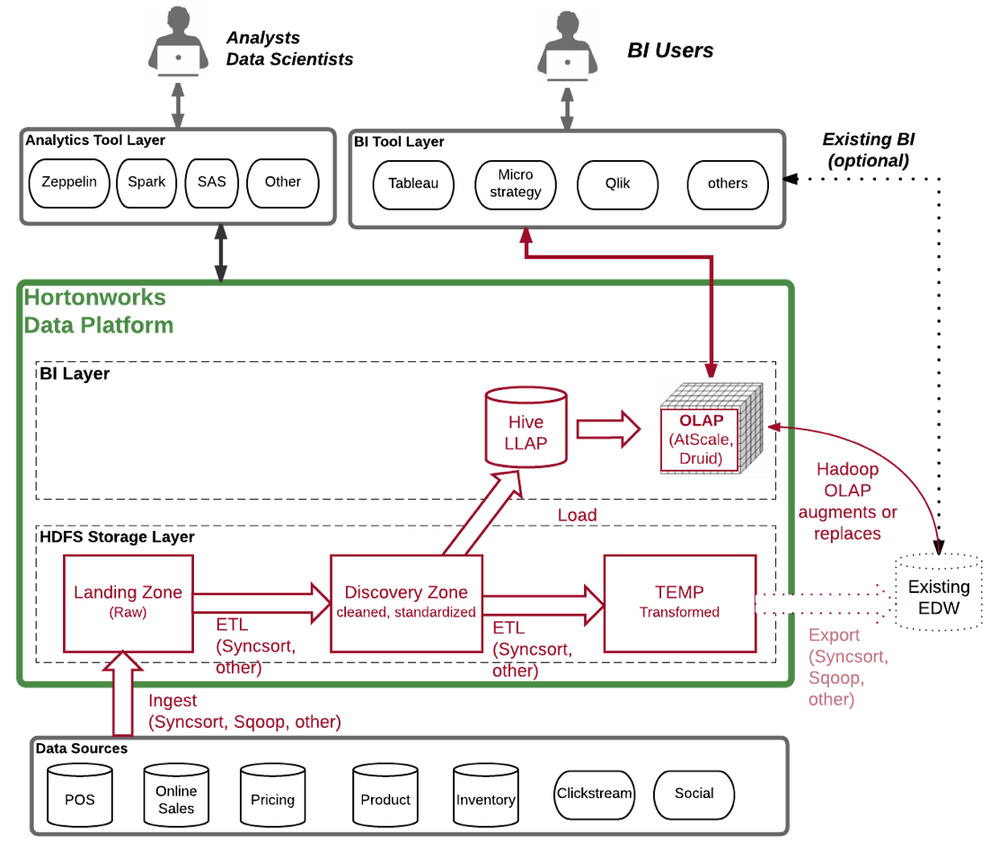

Use Case 3: BI on Hadoop

This use case is identical to EDW offload as per above but the EDW is either fully replaced by or augmented by OLAP on Hadoop. For greenfield environments, replacement (i.e. prevention) of OLAP is particularly attractive.

Benefits:

- Same as previous use case

- OLAP queries are run directly against data in the lake. OLAP in the lake can be against larger volumes of data than traditional OLAP and can include enriched data and new data sources (e.g. geolocation, social, clickstream).

- OLAP in the lake can replace or prevent implementation of expensive and constrained traditional OLAP systems.

Notes on OLAP on Hadoop:

- Jethro and Druid are both viable technologies for Fast Bi / OLAP on Hadoop.

- Druid is open source and best implemented against OLAP cubes with relatively few drill-downs/roll-ups. This is because each aggregation of the cube is hard-coded in Druid statements. This makes complex or rapidly evolving OLAP cubes difficult to develop, test and maintain.

- Jethro is proprietary software and a certified partner of Hortonworks. It is integrated with Hive LLAP. It is best for fully implemented BI initiatives, because of its "set-and-forget" implementation. This greatly reduces the role of IT in the BI program and thus allows user needs to be met more rapidly.

Conclusion

Traditional Enterprise Data Warehouses are feeling the strain of the modern Big Data era: these warehouses are unsustainably expensive; the majority of their data storage and processing is typically dedicated to prep work for BI queries, and not the queries themselves (the purpose of the warehouse); it is difficult to store varied data like semi-structured social and clickstream; they are constrained by how much data volume can be stored, for both cost and scaling reasons.

Hadoop's scalable inexpensive storage and parallel processing can be used to optimize existing EDWs by offloading data and ETL to this platform. Moreover, recent technologies like Hive LLAP and Druid or Jethro allow you to transfer your warehouse to Hadoop and run your BI tooling (Tableau, MicroStrategy, etc) directly against TBs and PBs of data on Hadoop. The reference architectures in this article show how to architect your data on and off of Hadoop to achieve significant gains in cost, performance and Big Data strategy. Why wait?

References

Hive LLAP and ACID merge

- https://cwiki.apache.org/confluence/display/Hive/LLAP

- https://hortonworks.com/blog/announcing-apache-hive-2-1-25x-faster-queries-much/

- https://www.youtube.com/watch?v=EjkOIhdOqek

Syncsort DMX-h

Jethro

Druid