Community Articles

- Cloudera Community

- Support

- Community Articles

- Apache NiFi 1.10: Support for Parquet RecordReader

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

11-07-2019

09:16 AM

- edited on

02-12-2020

06:14 AM

by

SumitraMenon

With the release of Apache NiFi 1.10 (http://nifi.apache.org/download.html), a new feature is available whereby you can configure a Parquet Record reader to read incoming Parquet formatted files.

Apache NiFi Record processing allows you to read and deal with a set of data as a single unit. By supplying a schema that matches the incoming data, you can perform record processing as if the data is a "table", and easily convert between formats (CSV, JSON, Avro, Parquet, XML). Before record processing was added to Apache NiFi, you would have had to split the file line by line, perform the transformation and then merge everything back together into a single output. This is extremely inefficient. With Record processing, we can do everything to a large unit of data as a single step which improves the speed by many factors.

Apache Parquet is a columnar storage format (https://parquet.apache.org/documentation/latest/), and the format includes the schema for the data that it stores. This is a great feature that we can use with Record processing in Apache NiFi to supply the schema automatically to the flow.

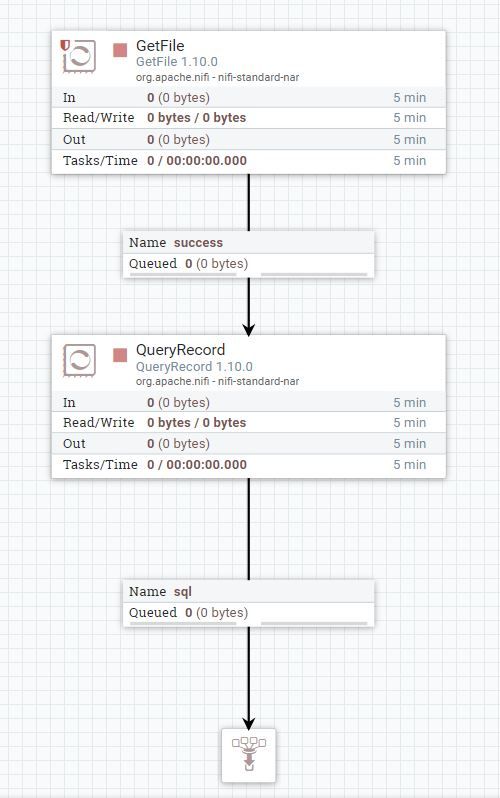

Here is an example flow:

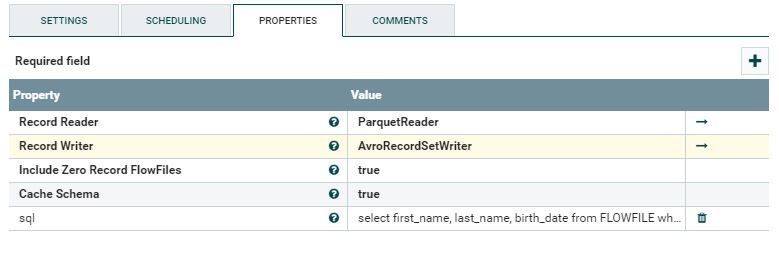

In this flow, I am reading my incoming .parquet stored files, and passing that through my QueryRecord processor. The processor has been configured with a ParquetReader. I'm using the AvroRecordSetWriter for output, but you can use also CSV,JSON,XML record writer instead:

The QueryRecord is a special processor that allows you to run SQL queries against your Flowfile, where the output is a new Flowfile with the output of the SQL query:

The raw SQL code:

Select first_name, last_name, birth_date from FLOWFILE where gender = 'M' and birth_date like '1965%'

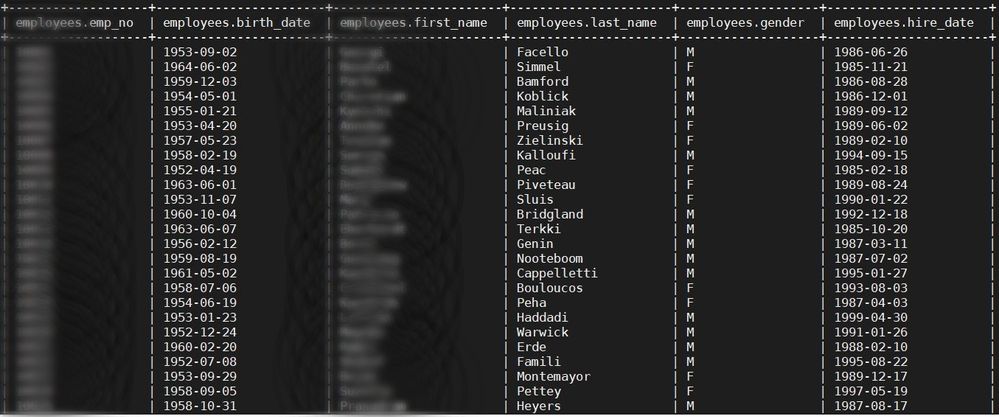

The input in my Parquet file looks like this:

You can see it has rows for years other than 1965, Males and Females, as well as other columns not listed in the SQL query.

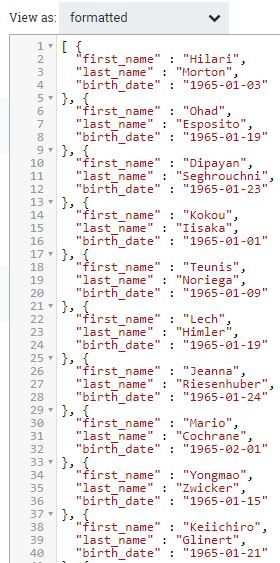

Once running it through my flow, I am left with the result of the SQL query, matching my search criteria (birth year = 1965 and only Males), with the three columns selected (first_name, last_name, birth_year):

Depending on your RecordWriter, you can format the output as JSON, CSV, XML or Avro, and carry on with further processing.

Created on 01-08-2020 08:41 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Anyone wishing to work with these Parquet Readers in a previous version of NiFi should take a look at my post here:

Created on 02-04-2020 11:38 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@wengelbrecht do you know version of parquet this reader is supposed to support?

Created on 02-04-2020 11:57 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I'm not a 100% sure, but looking at NiFi 1.11.0, I can see the following list of JAR files:

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-column-1.10.0.jar

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-format-2.4.0.jar

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-encoding-1.10.0.jar

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-common-1.10.0.jar

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-avro-1.10.0.jar

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-jackson-1.10.0.jar

./nifi-parquet-nar-1.11.0.nar-unpacked/NAR-INF/bundled-dependencies/parquet-hadoop-1.10.0.jar

Created on 02-04-2020 12:28 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@wengelbrecht thank you that is exactly what i needed to see. I am having an issue with the parquet-hadoop-1.10 and need to get a 1.12 version working in NiFi and Hive....