Community Articles

- Cloudera Community

- Support

- Community Articles

- Automate deployment of HDF 3.1 clusters using Amba...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-17-2018 10:28 AM - edited 08-17-2019 08:51 AM

Summary:

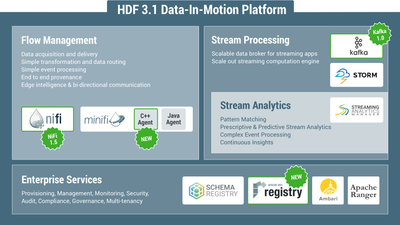

The release of HDF 3.1 brings about a significant number of improvements in HDF: Apache Nifi 1.5, Kafka 1.0, plus the new NiFi registry. In addition, there were improvements to Storm, Streaming Analytics Manager, Schema Registry components. This article shows how you can use ambari-bootstrap project to easily generate a blueprint and deploy HDF clusters to both either single node or development/demo environments in 5 easy steps. To quickly setup a single node setup, a prebuilt AMI is available for AWS as well as a script that automates these steps, so you can deploy the cluster in a few commands.

Steps for each of the below option are described in this article:

- A. Single-node prebuilt AMI on AWS

- B. Single-node fresh install

- C. Multi-node fresh install

A. Single-node prebuilt AMI on AWS:

Steps to launch the AMI

- 1. Launch Amazon AWS console page in your browser by clicking here and sign in with your credentials. Once signed in, you can close this browser tab.

- 2. Select the AMI from ‘N. California’ region by clicking here. Now choose instance type: select ‘m4.2xlarge’ and click Next

Note: if you choose a smaller instance type from the above recommendation, not all services may come up

- 3. Configure Instance Details: leave the defaults and click ‘Next’

- 4. Add storage: keep at least the default of 100 GB and click ‘Next’

- 5. Optionally, add a name or any other tags you like. Then click ‘Next’

- 6. Configure security group: create a new security group and select ‘All traffic’ to open all ports. For production usage, a more restrictive security group policy is strongly encouraged. As an instance only allow traffic from your company’s IP range. Then click ‘Review and Launch’

- 7. Review your settings and click Launch

- 8. Create and download a new key pair (or choose an existing one). Then click ‘Launch instances’

- 9. Click the shown link under ‘Your instances are now launching’

- 10. This opens the EC2 dashboard that shows the details of your launched instance

- 11. Make note of your instance’s ‘Public IP’ (which will be used to access your cluster). If it is blank, wait 1-2 minutes for this to be populated. Also make note of your AWS Owner Id (which will be the initial password to login)

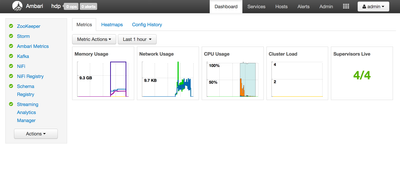

- 12. After 5-10 minutes, open the below URL in your browser to access Ambari’s console: http://<PUBLIC IP>:8080. Login as user:admin and pass:your AWS Owner Id (see previous step)

- 13. At this point, Ambari may still be in the process of starting all the services. You can tell by the presence of the blue ‘op’ notification near the top left of the page. If so, just wait until it is done.

(Optional) You can also monitor the startup using the log as below:

- Open SSH session into the VM using your key and the public IP e.g. from OSX:

ssh -i ~/.ssh/mykey.pem centos@<publicIP>

- Tail the startup log:

tail -f /var/log/hdp_startup.log

- Once you see “cluster is ready!” you can proceed

- 14. Once the blue ‘op’ notification disappears and all the services show a green check mark, the cluster is fully up.

Other related AMIs

- HDP 2.6.4 vanilla AMI (ami-764d4516): Hortonworks HDP 2.6.4 single node cluster running Hive/Spark/Druid/Superset installed via Ambari. Built Feb 18 2018 using HDP 2.6.4.0-91 / Ambari 2.6.1.3-3. Ambari password is your AWS ownerid

- HDP 2.6.4 including NiFi and NiFi registry from HDF 3.1 (ami-e1a0a981): HDP 2.6.4 plus NiFi 1.5 and Nifi Registry - Ambari admin password is StrongPassword. Built Feb 17 2018

- HDP 2.6 plus HDF 3.0 and IOT trucking demo reference app. Details here

Note: Above AMIs are available on US West (N. California) region of AWS

B. Single-node HDF install:

Launch a fresh CentOS/RHEL 7 instance with 4+cpu and 16GB+ RAM and run below. Do not try to install HDF on a env where Ambari or HDP are already installed (e.g. HDP sandbox or HDP cluster)

export host_count=1 curl -sSL https://gist.github.com/abajwa-hw/b7c027d9eea9fbd2a2319a21a955df1f/raw | sudo -E sh

Once launched, the script will install Ambari and use it to deploy HDF cluster

Note: this script can also be used to install multi-node clusters after step #1 below is complete i.e. after the agents on non-AmabriServer nodes are installed and registered

Other related scripts

1. Automation to setup HDP 2.6.x plus NiFi from HDF 3.1

export host_count=1 curl -sSL https://gist.github.com/abajwa-hw/bbe2bdd1ed6a0f738a90dd4e07480e3b/raw | sudo -E sh

C. Multi-node HDF install:

0. Launch your RHEL/CentOS 7 instances where you wish to install HDF. In this example, we will use 4 m4.xlarge instances. Select an instance where ambari-server should run (e.g. node1)

1. After choosing a host where you would like Ambari-server to run, first let's prepare the other hosts. Run below on all hosts where Ambari-server will not be running (e.g. node2-4). This will run pre-requisite steps, install Ambari-agents and point them to Ambari-server host:

export ambari_server=<FQDN of host where ambari-server will be installed>; #replace this export install_ambari_server=false export ambari_version=2.6.1.0 curl -sSL https://raw.githubusercontent.com/seanorama/ambari-bootstrap/master/ambari-bootstrap.sh | sudo -E sh ;

2. Run remaining steps on host where Ambari-server is to be installed (e.g. node1). The below commands run pre-reqs and install Ambari-server

export db_password="StrongPassword" # MySQL password export nifi_password="StrongPassword" # NiFi password - must be at least 10 chars export cluster_name="HDF" # cluster name export ambari_services="ZOOKEEPER STREAMLINE NIFI KAFKA STORM REGISTRY NIFI_REGISTRY AMBARI_METRICS" #choose services export hdf_ambari_mpack_url="http://public-repo-1.hortonworks.com/HDF/centos7/3.x/updates/3.1.0.0/tars/hdf_ambari_mp/hdf-ambari-mpack-3.1.0.0-564.tar.gz" export ambari_version=2.6.1.0 #install bootstrap yum install -y git python-argparse cd /tmp git clone https://github.com/seanorama/ambari-bootstrap.git #Runs pre-reqs and install ambari-server export install_ambari_server=true curl -sSL https://raw.githubusercontent.com/seanorama/ambari-bootstrap/master/ambari-bootstrap.sh | sudo -E sh ;

3. On the same node, install MySQL and create databases and users for Schema Registry and SAM

sudo yum localinstall -y https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm sudo yum install -y epel-release mysql-connector-java* mysql-community-server # MySQL Setup to keep the new services separate from the originals echo Database setup... sudo systemctl enable mysqld.service sudo systemctl start mysqld.service #extract system generated Mysql password oldpass=$( grep 'temporary.*root@localhost' /var/log/mysqld.log | tail -n 1 | sed 's/.*root@localhost: //' ) #create sql file that # 1. reset Mysql password to temp value and create druid/superset/registry/streamline schemas and users # 2. sets passwords for druid/superset/registry/streamline users to ${db_password} cat < mysql-setup.sql ALTER USER 'root'@'localhost' IDENTIFIED BY 'Secur1ty!'; uninstall plugin validate_password; CREATE DATABASE registry DEFAULT CHARACTER SET utf8; CREATE DATABASE streamline DEFAULT CHARACTER SET utf8; CREATE USER 'registry'@'%' IDENTIFIED BY '${db_password}'; CREATE USER 'streamline'@'%' IDENTIFIED BY '${db_password}'; GRANT ALL PRIVILEGES ON registry.* TO 'registry'@'%' WITH GRANT OPTION ; GRANT ALL PRIVILEGES ON streamline.* TO 'streamline'@'%' WITH GRANT OPTION ; commit; EOF #execute sql file mysql -h localhost -u root -p"$oldpass" --connect-expired-password < mysql-setup.sql #change Mysql password to StrongPassword mysqladmin -u root -p'Secur1ty!' password StrongPassword #test password and confirm dbs created mysql -u root -pStrongPassword -e 'show databases;'

4. On the same node, install Mysql connector jar and then HDF mpack. Then restart Ambari so it recognizes HDF stack:

sudo ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

sudo ambari-server install-mpack --mpack=${hdf_ambari_mpack_url} --verbose

sudo ambari-server restart

At this point, if you wanted you could use Ambari install wizard to install HDF you can do that as well. Just open http://<Ambari host IP>:8080 and login and follow the steps in the doc. Otherwise, to proceed with deploying via blueprints follow the remaining steps.

4. On the same node, provide minimum configurations required for install by creating configuration-custom.json. You can add to this to customize any component's property that is exposed by Ambari

cd /tmp/ambari-bootstrap/deploy/

tee configuration-custom.json > /dev/null << EOF

{

"configurations": {

"ams-grafana-env": {

"metrics_grafana_password": "${db_password}"

},

"streamline-common": {

"jar.storage.type": "local",

"streamline.storage.type": "mysql",

"streamline.storage.connector.connectURI": "jdbc:mysql://$(hostname -f):3306/streamline",

"registry.url" : "http://localhost:7788/api/v1",

"streamline.dashboard.url" : "http://localhost:9089",

"streamline.storage.connector.password": "${db_password}"

},

"registry-common": {

"jar.storage.type": "local",

"registry.storage.connector.connectURI": "jdbc:mysql://$(hostname -f):3306/registry",

"registry.storage.type": "mysql",

"registry.storage.connector.password": "${db_password}"

},

"nifi-registry-ambari-config": {

"nifi.registry.security.encrypt.configuration.password": "${nifi_password}"

},

"nifi-ambari-config": {

"nifi.security.encrypt.configuration.password": "${nifi_password}"

}

}

}

EOF

5. Then run below as root to generate a recommended blueprint and deploy the cluster install. Make sure to set host_count to the total number of hosts in your cluster (including Ambari server)

sudo su cd /tmp/ambari-bootstrap/deploy/ export host_count=<Number of total nodes> export ambari_stack_name=HDF export ambari_stack_version=3.1 export ambari_services="ZOOKEEPER STREAMLINE NIFI KAFKA STORM REGISTRY NIFI_REGISTRY AMBARI_METRICS" ./deploy-recommended-cluster.bash

You can now login into Ambari at http://<Ambari host IP>:8080 and sit back and watch your HDF cluster get installed!

Notes:

a) This will only install Nifi on a single node of the cluster by default

b) Nifi Certificate Authority (CA) component will be installed by default. This means that if you wanted to, you could enable SSL to be enabled for Nifi out of the box by including a "nifi-ambari-ssl-config" section in the above configuration-custom.json:

"nifi-ambari-ssl-config": {

"nifi.toolkit.tls.token": "hadoop",

"nifi.node.ssl.isenabled": "true",

"nifi.security.needClientAuth": "true",

"nifi.toolkit.dn.suffix": ", OU=HORTONWORKS",

"nifi.initial.admin.identity": "CN=nifiadmin, OU=HORTONWORKS",

"content":"<property name='Node Identity 1'>CN=node-1.fqdn, OU=HORTONWORKS</property><property name='Node Identity 2'>CN=node-2.fqdn, OU=HORTONWORKS</property><property name='Node Identity 3'>node-3.fqdn, OU=HORTONWORKS</property>"

},

Make sure to replace node-x.fqdn with the FQDN of each node running Nifi

c) As part of the install, you can also have an existing Nifi flow deployed by Ambari. First, read in a flow.xml file from existing Nifi system (you can find this in flow.xml.gz). For example, run below to read the flow for the Twitter demo into an env var

twitter_flow=$(curl -L https://gist.githubusercontent.com/abajwa-hw/3a3e2b2d9fb239043a38d204c94e609f/raw)

Then include a "nifi-ambari-ssl-config" section in the above configuration-custom.json when you run the tee command - to have ambari-bootstrap include the whole flow xml into the generated blueprint:

"nifi-flow-env" : {

"properties_attributes" : { },

"properties" : {

"content" : "${twitter_flow}"

}

}

d) In case you would like to review the generated blueprint before it gets deployed, just set the below variable as well:

export deploy=false

.... The blueprint will be created under /tmp/ambari-bootstrap*/deploy/tempdir*/blueprint.json

Sample blueprint

Sample generated blueprint for 4 node cluster is provided for reference here:

{

"Blueprints": {

"stack_name": "HDF",

"stack_version": "3.1"

},

"host_groups": [

{

"name": "host-group-3",

"components": [

{

"name": "NIFI_MASTER"

},

{

"name": "DRPC_SERVER"

},

{

"name": "METRICS_GRAFANA"

},

{

"name": "KAFKA_BROKER"

},

{

"name": "ZOOKEEPER_SERVER"

},

{

"name": "STREAMLINE_SERVER"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "SUPERVISOR"

},

{

"name": "NIMBUS"

},

{

"name": "ZOOKEEPER_CLIENT"

},

{

"name": "NIFI_REGISTRY_MASTER"

},

{

"name": "REGISTRY_SERVER"

},

{

"name": "STORM_UI_SERVER"

}

]

},

{

"name": "host-group-2",

"components": [

{

"name": "METRICS_MONITOR"

},

{

"name": "SUPERVISOR"

},

{

"name": "ZOOKEEPER_SERVER"

}

]

},

{

"name": "host-group-1",

"components": [

{

"name": "METRICS_MONITOR"

},

{

"name": "SUPERVISOR"

},

{

"name": "NIFI_CA"

}

]

},

{

"name": "host-group-4",

"components": [

{

"name": "METRICS_MONITOR"

},

{

"name": "SUPERVISOR"

},

{

"name": "METRICS_COLLECTOR"

},

{

"name": "ZOOKEEPER_SERVER"

}

]

}

],

"configurations": [

{

"nifi-ambari-config": {

"nifi.security.encrypt.configuration.password": "StrongPassword"

}

},

{

"nifi-registry-ambari-config": {

"nifi.registry.security.encrypt.configuration.password": "StrongPassword"

}

},

{

"ams-hbase-env": {

"hbase_master_heapsize": "512",

"hbase_regionserver_heapsize": "768",

"hbase_master_xmn_size": "192"

}

},

{

"nifi-logsearch-conf": {}

},

{

"storm-site": {

"topology.metrics.consumer.register": "[{\"class\": \"org.apache.hadoop.metrics2.sink.storm.StormTimelineMetricsSink\", \"parallelism.hint\": 1, \"whitelist\": [\"kafkaOffset\\..+/\", \"__complete-latency\", \"__process-latency\", \"__execute-latency\", \"__receive\\.population$\", \"__sendqueue\\.population$\", \"__execute-count\", \"__emit-count\", \"__ack-count\", \"__fail-count\", \"memory/heap\\.usedBytes$\", \"memory/nonHeap\\.usedBytes$\", \"GC/.+\\.count$\", \"GC/.+\\.timeMs$\"]}]",

"metrics.reporter.register": "org.apache.hadoop.metrics2.sink.storm.StormTimelineMetricsReporter",

"storm.cluster.metrics.consumer.register": "[{\"class\": \"org.apache.hadoop.metrics2.sink.storm.StormTimelineMetricsReporter\"}]"

}

},

{

"registry-common": {

"registry.storage.connector.connectURI": "jdbc:mysql://ip-172-31-21-233.us-west-1.compute.internal:3306/registry",

"registry.storage.type": "mysql",

"jar.storage.type": "local",

"registry.storage.connector.password": "StrongPassword"

}

},

{

"registry-logsearch-conf": {}

},

{

"streamline-common": {

"streamline.storage.type": "mysql",

"jar.storage.type": "local",

"streamline.storage.connector.connectURI": "jdbc:mysql://ip-172-31-21-233.us-west-1.compute.internal:3306/streamline",

"streamline.dashboard.url": "http://localhost:9089",

"registry.url": "http://localhost:7788/api/v1",

"streamline.storage.connector.password": "StrongPassword"

}

},

{

"ams-hbase-site": {

"hbase.regionserver.global.memstore.upperLimit": "0.35",

"hbase.regionserver.global.memstore.lowerLimit": "0.3",

"hbase.tmp.dir": "/var/lib/ambari-metrics-collector/hbase-tmp",

"hbase.hregion.memstore.flush.size": "134217728",

"hfile.block.cache.size": "0.3",

"hbase.rootdir": "file:///var/lib/ambari-metrics-collector/hbase",

"hbase.cluster.distributed": "false",

"phoenix.coprocessor.maxMetaDataCacheSize": "20480000",

"hbase.zookeeper.property.clientPort": "61181"

}

},

{

"ams-env": {

"metrics_collector_heapsize": "512"

}

},

{

"kafka-log4j": {}

},

{

"ams-site": {

"timeline.metrics.service.webapp.address": "localhost:6188",

"timeline.metrics.cluster.aggregate.splitpoints": "kafka.network.RequestMetrics.ResponseQueueTimeMs.request.OffsetFetch.98percentile",

"timeline.metrics.host.aggregate.splitpoints": "kafka.network.RequestMetrics.ResponseQueueTimeMs.request.OffsetFetch.98percentile",

"timeline.metrics.host.aggregator.ttl": "86400",

"timeline.metrics.service.handler.thread.count": "20",

"timeline.metrics.service.watcher.disabled": "false"

}

},

{

"kafka-broker": {

"kafka.metrics.reporters": "org.apache.hadoop.metrics2.sink.kafka.KafkaTimelineMetricsReporter"

}

},

{

"ams-grafana-env": {

"metrics_grafana_password": "StrongPassword"

}

},

{

"streamline-logsearch-conf": {}

}

]

}

Sample cluster.json for 4 node cluster:

{

"blueprint": "recommended",

"default_password": "hadoop",

"host_groups": [

{

"hosts": [

{

"fqdn": "ip-172-xx-xx-x3.us-west-1.compute.internal"

}

],

"name": "host-group-3"

},

{

"hosts": [

{

"fqdn": "ip-172-xx-xx-x2.us-west-1.compute.internal"

}

],

"name": "host-group-2"

},

{

"hosts": [

{

"fqdn": "ip-172-xx-xx-x4.us-west-1.compute.internal"

}

],

"name": "host-group-4"

},

{

"hosts": [

{

"fqdn": "ip-172-xx-xx-x1.us-west-1.compute.internal"

}

],

"name": "host-group-1"

}

]

}

What next?

- Now that your cluster is up, you can explore what Nifi's Ambari integration means: https://community.hortonworks.com/articles/57980/hdf-20-apache-nifi-integration-with-apache-ambarir....

- Next, you can enable SSL for Nifi: https://community.hortonworks.com/articles/58009/hdf-20-enable-ssl-for-apache-nifi-from-ambari.html