Community Articles

- Cloudera Community

- Support

- Community Articles

- Create a Hive Script to Validate Tables

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 10-12-2015 10:30 AM - edited 08-17-2019 02:11 PM

Introduction

This wiki page describes the script validationHiveTablesLinuxMode.sh (GitHub: https://github.com/sourygnahtw/hadoopUtils/blob/master/scripts/hive/validationHiveTablesLinuxMode.sh).

The purpose of the script is to compare one Hive table with another, typically to validate a table generated by a Hive transformation against a source-of-truth (sqooped) table.

Motivations

My current project is the typical Hadoop project that consists of offloading a ETL process currently done in a relational database (SQLserver) to a Hadoop process.

That involves rewriting all the (many) SQL queries in the ETL process to HiveQL queries.

Some criteria of success for such project are:

- is the new Hive ETL workflow faster than the old one?

- have the output tables of the new workflow exactly the same data as in the original workflow?

- (does the customer pay less licenses and hardware with this new workflow?)

So, getting sure that the HiveQL queries written in such ETL offloading project is a key subject. Hence the need of a validation tool.

At the beginning of the current project, no validation tool existed and we were doing all the validation "manually": looking at the data in the SQLserver table and checking that they were the same in the generated Hive tables. Due to the high number of columns in each table and the number of rows (and also the fact that I already wear glasses...), this approach was quite limited.

I found no tool on the internet to do such validation. So the script described here was the first tool to do the desired validation (but not the last: Ryan Merriman, another fellow worker on the same project, has developed in java a better validation tool, although a bit more complicated to deploy).

How to use the script

Basic execution

Let's suppose that we want to validate the table "footable" and that this table is in the Hive database "bardatabase".

The process to do the validation is:

- First get a source-of-truth "footable" table in another Hive database in the same Hadoop cluster. Since this is an offloading project, we can suppose that this source-of-truth table is in the relational database. So we need to sqoop it to Hive:

- Create in Hive the "source-of-truth" database. For instance: "create database sot;"

- Create the source-of-truth "footable" table, taking care that the DDL is the same as the generated table (if the DDL is a bit different, there will be a problem with the validation. That is why we explicitly create the DDL instead of letting Sqoop creating the table for us). The easiest way to do it is to get directly the DDL from the table to validate: "use sot; create table footable like bardatabase.footable;" .

- Sqoop the table from the relational database. Ensure that the sqoop process has no error.

- If you want to validate a lot of tables, you will have to sqoop many tables. So you might be interested in the following article: Sqoop: fetching lot of tables in parallel

- Edit the 2 variables at the beginning of the script:

- origDatabase=sot

- resultDatabase=bardatabase

- On a Linux server that has access to the cluster (typically an edge node), execute the command: ./validationHiveTablesLinuxMode.sh footable

- Check the result of the validation. If the script has found some errors, you can get more information by executing the "vimdiff" command that is shown on the standard output.

Example of result

Let's show the result of a similar basic execution:

[sourygna@donzelot ~]$ ./validationHiveTablesLinuxMode.sh input_table1

#### Comparing tovalidate.input_table1 with sot.input_table1

ERROR: the number of rows is different from orig table (7868 rows) to result table (7869 rows)

Number of differences: 2 (out of 7868 rows)

To see the differences visually: vimdiff -o -c "windo set wrap foldcolumn=0" /data/sourygna/validation/tovalidate_sot/input_table1/{orig,result}/sorted.gzThe first line on the standard output describes the comparison performed by the script (this helps when you want to have the script validating many tables). The second line shows an important ERROR message. This message only appears when the number of rows does not match between each table, implying an important problem (for instance, some JOINs with problems). The third line summaries the number of differences. In our case, we see that we have less than 0.1% of the rows with some errors. Of course, the goal is to have 0 error. But still, the current number is far better than 75% of errors for instance. The last line only appears when there are some errors. It gives the vimdiff command that you must execute to have more insights on the errors.

Let's then execute this command:

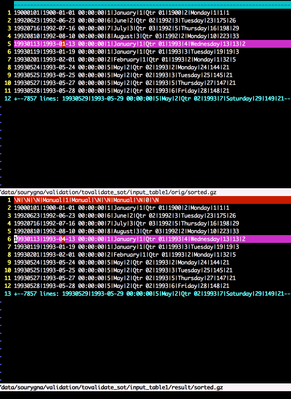

vimdiff -o -c "windo set wrap foldcolumn=0" /data/sourygna/validation/tovalidate_sot/input_table1/{orig,result}/sorted.gzWe would get a similar output:

The screen is separated in 2 parts: above you can see the data of the "orig" source-of-truth table, and below the data of the Hive generated table. In each part, each line represents a row of the table. For instance, in the source-of-truth table we can clearly see the first 11 rows. The rest of the rows in the table (7857 rows) have been folded by vimdiff because there are exactly identical to the rows in the table to validate.

The columns are separated by "|" (if such character is used in your data, then you will have to modify that character in the script).

Vimdiff helps us to see the differences using the blue,red and pink colours:

- The first row in the result table (the line with the Null (\N) characters and the "Manual" values) is totally in red. This is because this line does not exist in the source-of-truth table (the "ERROR" message we saw before referred to that line). In the first half of the screen (that shows the original table), we can see a "blue line" that has been inserted by vimdiff to show where that line would be if the 2 tables were identical.

- The 6th line in the result table (which correspond to the 5th line) in the source-of-truth table is in pink. That means that this line exists in both table but that it has some differences. The differences appear here in red: this corresponds to a problem with the month in the second column.

What motivates me to use vimdiff, is that is shows clearly the differences. And not only the differences but the whole row where the difference appeared and also some "good" rows. With such context, it is much easier for me to understand where is the error and then correct my Hive query.

Advanced execution

Let's suppose that we now have 3 tables to validate: table1, table2 and table3.

Let's also assume that the table2 has 2 special columns:

- the column bar1 is a timestamp when the query has been executed

- the column bar2 has a random value

So obviously, it makes no sense to try to validate those 2 columns. Should we do this, then the script would show 100% of rows in error for table2. What we have to do, is to exclude those 2 columns from our validation process. To exclude this, on the command line after the name of the table, we will put the list of those columns prefixed by the ":" character. Each column in this list must be separated by a "," (and if you have the bad luck to have some columns whose names have some spaces, substitute the spaces by ".").

Since we want to validate 3 tables, we will place 3 arguments on the command line:

./validationHiveTablesLinuxMode.sh table1 table2:bar1,bar2 table3

The script will first validate table1, then table2 (without trying to validate the 2 excluded columns) and finally table3.

Requisistes for a validation tool

Here are the requisites I consider important for a validation tool:

- Don't put some load on the customer's database

- Check all the rows, and all the columns if possible

- Possibility to remove certain columns (for instance, those that use "current timestamp" or the columns that are surrogate keys)

- Don't rely on indexes to compare the rows (in my project, many tables have no indexes)

- Don't suppose there is no repeated rows

- Offer a visual and comprehensive way to see the errors

- Try to handle big tables and lot of data

We can see that the script I have developed covered most of those aspects:

- Sqoop all the tables in a "source of truth" read-only Hive database. Further analysis will only be done against that Hive database (requisite 1)

- With Hive, the script does an "INSERT OVERWRITE" of both tables ("source of truth" & results) to the Linux filesystem (current problem: everything is done on the edge node: that does not scale...)

- during the Hive "insert overwrite" command, we can remove columns (requisite 3)

- sort all (requisite 2,4,5) the rows

- Comparison is done by:

- wc -l : to see if the number of rows match

- diff: to count the errors

- vimdiff (requisite 6)

- divide large file/tables into chunks, to make vimdiff faster (requisite 7 a bit covered)

Some few more notes

Parameters in the script

Apart from the variables origDatabase and resultDatabase, there are other parameters that you might want to change:

- tez.queue.name : to specify the Yarn queue to use instead of "default"

- baseDir: this directory on the Linux server where you execute the script will contain not only the reports, the logs but also the (temporary) data of the Hive tables that you compare. So it is important to get sure that you have enough space in the corresponding partition. You also might need to delete some of the data in that directory from time to time (data of the tables is compressed but it might still take some space if you do a lot of validations).

- splitNumLines: If a file/table is bigger than splitNumLines, then we split it into chunks (each chunk having splitNumLines lines at most), in order to make easier and faster the comparisons with vimdiff. vimdiff can takes some time if the tables are big (I have mainly executed this script with 64GB of RAM in the server) so you might need to decrease that number if it takes too much time.

Problems with float, double and decimals

If some columns use numerical values that are not integer (or bigInteger), then you might have a problem because the representation of the float "0.2" might not be the same between Hadoop cluster and the relational database.

So for those columns you might see a lot of "small rounding differences".

The script does not provide any solution to cope that problem. What I sometime do, is first to check with vimdiff if all the differences are small (that is for instance: 0.20001 and 0.2). If such, I then execute another time the validation script on the same table, excluding the columns with those numerical values I have manually checked.

Logging and reports

The script keeps track of all your validation activities in the baseDir directory.

In that directory, you will find a directory for each couple (source of truth database, database to validate).

In each of those subdirectories, you will find:

- the file globalReport: that lists the validations that have been performed for that couple of database (which day/time, which arguments, results)

- a directory for each table that has been validated. The content of that directory is:

- tmp directory, where you find the Hive commands executed and its logs

- orig and result directories, to store the compressed data of the tables (if both table are identical, the script then deletes the data in the result directory)

- orig.old and result.old directories, to store the compressed data of the tables of the precedent validation (the script only keeps 1 "backup")

Created on 11-23-2017 05:16 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Recently I have developed a new program that allows to do the same (Hive comparisons) in a much more efficient way (totally scalable, better visualization of the differences, skew consideration etc). Please consider this new approach instead of the old one I published in this article 2 years ago:

https://github.com/bolcom/hive_compared_bq

Code is again OpenSource so I welcome feedbacks, suggestions of new features and also contributions 🙂

Created on 07-31-2018 11:08 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That's a very neat script. It comes with a cost of copying the data to local first & then doing the comparison. Imagine the data in TBs, even few hundred GBs can make the whole script go nuts.

Created on 06-28-2019 05:53 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the Script, it solves most of my automation problems where i need to compare hive tables.

few things i am trying to modify and its not working for me.

we have cluster with hive installed on multi node (load balancer is enabled for HS2) , and we are using beeline (instead of hive cli) to execute queries to get data locally. as cluster is enabled for load balancer, it is executing two queries in two different nodes and local data is now in two nodes and script not able to get the actual data and failing.

not sure how to make it work only on single node using beeline.

Cluster is kerbrose, sentry and hs2 enabled for load balancer