Community Articles

- Cloudera Community

- Support

- Community Articles

- Deploying IBM DSX on AWS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-05-2018 12:10 AM - edited 09-16-2022 01:42 AM

Guide to deploying a 3-node DSX Local Cluster on AWS

Part 1: Deploying AWS Cluster

On your EC2 dashboard click on launch instance.

Step 1: Choose an Amazon Machine Image (AMI)

Select AWS Marketplace and search for Centos -> Chose the Centos version you need (I chose CentOS 7 (x86_64) - with Updates HVM)

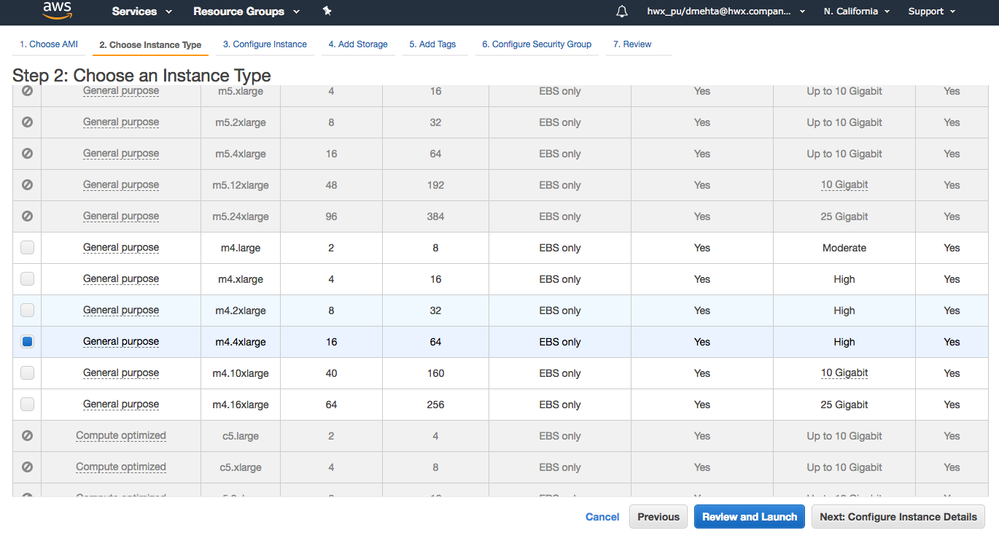

Step 2: Choose an Instance Type.

Select m4.4xlarge or m4.2xlarge

Step 3: Configure Instance Details

Set Number of Instances as 3

Step 4: Add Storage

You need to add Root storage minimum 50GB and additional EBS storage of around 500GB( if you deploying for just test purpose 300GB is fine). DSX usually require high IOPS so provisioned SSD are preferred. But be advised that provisioned SSD are expensive due to high IOPS.

Step 5: Add Tags Optional

Step 6: Configure Security Group

You need to set up a custom security group, which should include -

- Custom TCP rule, port 6443 and range 172.31.0.0/16 -> used for internal connection

- Allow all connection between nodes

- Allow restricted connection from outside.

Step 7: Review and Instance Launch

You would be required to create a key-pair that will allow you to access the cluster over SSH.

Part 2: Prepare nodes to Install DSX.

After the nodes have been launched successfully, set one node as a master node. Follow the steps below to prepare the nodes for installation

Step 1. Invoking root login on each node .

- ssh into each of the three nodes. Use the following commands -

sudo su sudo sed -i '/^#PermitRootLogin.*/s/^#//' /etc/ssh/sshd_config cat /etc/ssh/sshd_config | grep PermitRootLogin

- Now, edit the ~/.ssh/authorized_keys to get rid of "no-port-forwarding,no-agent-forwarding,no-X11-forwarding,command="echo 'Please login as the user \"centos\" rather than the user \"root\".';echo;sleep 10"

Step 2. Configure password-less ssh between all the nodes

- Creating a ssh key

ssh-keygen<br>

- copy content from ~/.ssh/id_rsa.pub to ~/.ssh/authorized_keys of all nodes.

- Test ssh connection between all the nodes including self, Note: Use the private ip of each node to ssh.

Step 3. Create two partitions for install and data.

- Create two partitions using fdisk utility. You can create partitions of any size but usually advised that you have >200GB of space for the both partitions. More details here - https://content-dsxlocal.mybluemix.net/docs/content/local/requirements.html

- confirm the creation of partitions by lsblk

Step 4. Format the two partitions

Format the partitions to mkfs.xfs -

mkfs.xfs -f -n ftype=1 -i size=512 -n size=8192 /dev/xvdb1 #//data mkfs.xfs -f -n ftype=1 -i size=512 -n size=8192 /dev/xvdb2 #//install

Step 5. Create directories /install and /data

Step 6. Mount the new partitions onto the locations

mount /dev/xvdb1 /data mount /dev/xvdb2 /install

Note: make following entries to /etc/fstab to ensure the partitions are mounted even on reboot.

/dev/xvdb1 /data auto defaults,nofail,comment=cloudconfig 0 2 /dev/xvdb2 /install auto defaults,nofail,comment=cloudconfig 0 2

Step 7. Install required packages

sudo yum install -y epel-release sudo yum update -y sudo yum install -y wget python-pip fio screen

Step 8. Improve Disk IO by warming the disks (optional)

fio --filename=/dev/xvdb --rw=read --bs=128k --iodepth=32 --ioengine=libaio --direct=1 --name=volume-initialize

Note: This will take ~40 minutes to complete

Part 3: Create Load balancers

1. Internal TCP Load balancer for Nodes

Step 1: Configure Load Balancer

Step 2: Configure Routing

Make sure the port is set to 6443.

Step 3: Register Targets register nodes in your cluster

Review and launch the load balancer. Once the ELB has been provisioned, get the IP address attached to the ELB from the DNS name - ping DNS_Name 2. Also, make sure that the target nodes has been attached to the ELB. Note: You might get warning the nodes are not healthy but its fine.

2. External Load Balancer to access cluster over HTTPS

Step 1: Configure Load Balancer

Note that here we are using port 443.

Step 2: Configure Security Settings

You can either choose your own ACM certificate or you can upload a certificate to ACM. Note: This step will work only once you have kicked off the installation. In this scenario you are creating this load balancer after the installation has been kicked off. This is good too. One way to create a ACM certificate is -

cd /etc/kubernetes/ssl

For private key use apiserver-key.pem

For certificate body use apiserver.pem

For certificate chain use ca.pem

Step 3: Configure Security Groups

Now you can configure your security group, make sure you are using same security group you earlier used to spin your instances.

Step 4: Configure Routing

Make sure to use HTTPS and set path for health check as /auth/login/login.html

Step 5: Register Targets

Review and Create the Load Balancer.

Part 4: Running DSX Installer

Step 1: Run pre-installation check

Before running installation you want to make sure that the nodes pass some basic tests. You can get the script here. Run the script, see if there are any errors while the script ran. Note: It might complain about the number of cores but you can ignore it.

Step 2: Get installer on /install folder

Download the DSX installer(optional), not needed if you have it locally. Change its permission to execute.

Step 3: Create Config file for DSX Installation

Before running the installer we would like to create a config file called as wdp.conf. This file can be created as -

your_installer --get-conf-key --three-nodes

This will create wdp.conf file. Now edit this file by replacing "load_balancer_ip_address" for "virtual_ip_address" and add the ip address of TCP load balancer. Also you would need to add 2 options to this file - Suppress Warning, No Path check. These options will suppress any warnings that you get during installation. Any errors can be later referred in the error logs.

load_balancer_ip_address=IP addr of TCP lb ssh_key=/root/.ssh/id_rsa ssh_port=22 node_1=internal ip address of master node node_path_1=/install node_data_1=/data node_2=internal ip address of node2 node_path_2=/install node_data_2=/data node_3=internal ip address of node3 node_path_3=/install node_data_3=/data suppress_warning=true no_path_check=true

Step 4: Kick in the installation

Set off the installer. Note: We recommend using screen option as installation takes ~2 hr so you can restore the session if it gets disconnected from local machine.

Your_Installer --three-nodes

Part 5: Launch DSX and check the setup

After the installation has completed launch the DSX on your web browser. The ip address listed on your installer screen will not works. You will need to get the IP address of the external load balancer by ping DNS Name.

https://your_dns_ip_address/auth/login/login.html

Note: You will see this warning message when you load the url, you can ignore this warning and proceed.

You should see above screen once you proceed to next step. Default username and password for first login will be - admin and password