Community Articles

- Cloudera Community

- Support

- Community Articles

- Flexing your Compute Muscle with Kubernetes [Part ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-11-2018 04:24 PM - edited 08-17-2019 05:26 AM

Article

This is the first of the two articles (see Part 2) where we will at look at Kubernetes (K8s) as the container orchestration and scheduling platform for a grid like architectural variation of the distributed pricing engine discussed here.

Kubernetes @ Hortonworks

But wait … before we dive in … why are we talking about K8s here?

Well, if you missed KubeCon + CloudNativeCon, find out more!

Hortonworks joined CNCF with an increased commitment to cloud native solutions for big data management. Learn more about the Open Hybrid Architecture Initiative led by Hortonworks to enable consistent data architectures on-prem and/or across cloud providers and stay tuned to discover more about how K8s is used across the portfolio of Hortonworks products – DPS, HDF and HDP to propel big data’s cloud native journey!

The Application Architecture

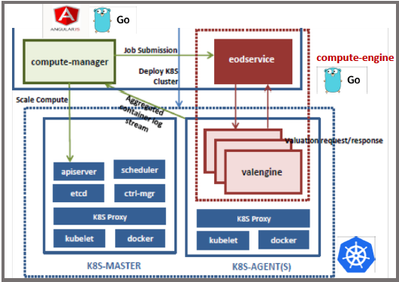

With that said, this prototype is a stateless compute application – a variation of the Dockerized Spark on YARN version described here - that comprises of a set of Dockerized microservices written in Go and AngularJS orchestrated with Kubernetes as depicted below:

The application has two components:

compute-engine

A simple client-server application written in Go. eodservice simulates the client that submits valuation requests to a grid of remote compute engine (server) instances - valengine. The valengine responds to requests by doing static pricing compute using QuantLib library through a Java wrapper library - computelib. The eodservice and valengine communicate with each other using Kite RPC (from Koding).

eodservice breaks job submissions into batches with a max size of 100 (pricing requests) and the computelib prices them in parallel.

compute-manager

A web-based management user interface for the compute-engine that helps visualize the K8s cluster, pod deployment and state and facilitates the following operational tasks:

- Submission of valuation jobs to the compute engine

- Scaling of compute

- Aggregated compute engine log stream

The backend is written in Go and frontend with AngularJS

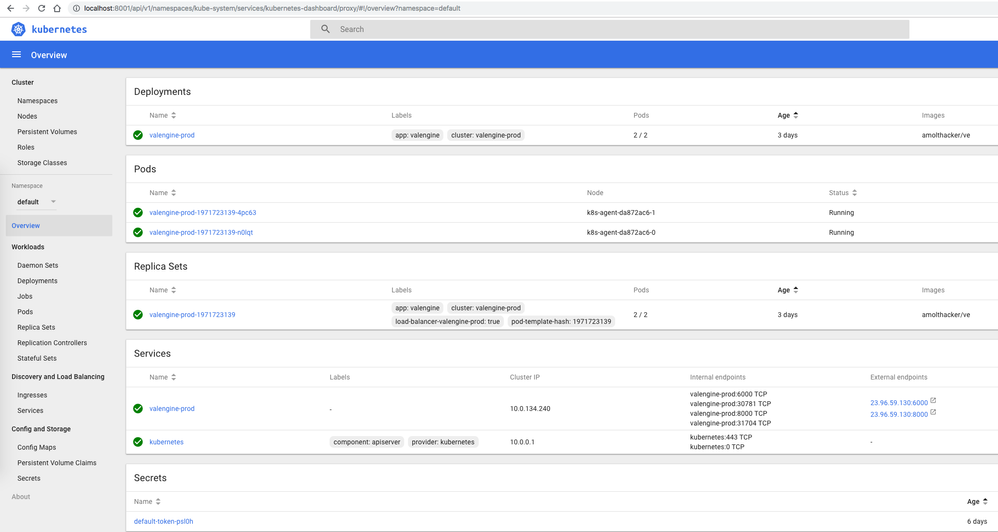

The App on K8s

So how is this deployed, scheduled and orchestrated with K8s?

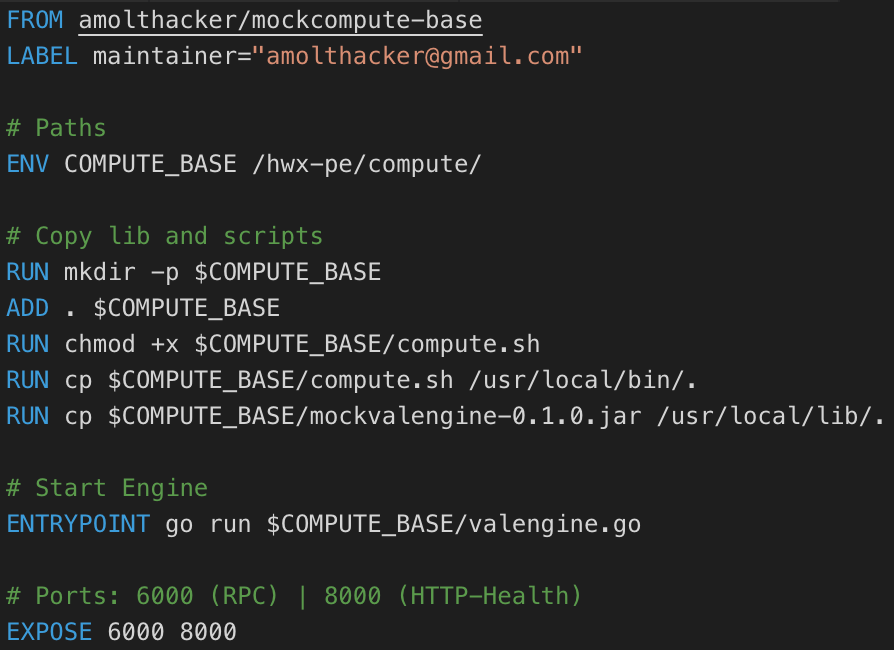

To begin with, The compute-engine's docker image builds on top of a pre-baked image of the computelib

FROM amolthacker/mockcompute-base LABEL maintainer="amolthacker@gmail.com" # Paths ENV COMPUTE_BASE /hwx-pe/compute/ # Copy lib and scripts RUN mkdir -p $COMPUTE_BASE ADD . $COMPUTE_BASE RUN chmod +x $COMPUTE_BASE/compute.sh RUN cp $COMPUTE_BASE/compute.sh /usr/local/bin/. RUN cp $COMPUTE_BASE/mockvalengine-0.1.0.jar /usr/local/lib/. # Start Engine ENTRYPOINT go run $COMPUTE_BASE/valengine.go # Ports: 6000 (RPC) | 8000 (HTTP-Health) EXPOSE 6000 8000

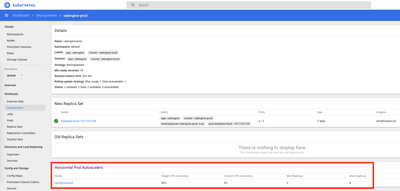

The stateless valengine pods are compute-engine Docker containers exposing ports 6000 for RPC comm and 8000 (HTTP) for health check that are rolled out with the ReplicaSets controller through a Deployment and

Front by Service (valengine-prod) of type LoadBalancer so as to expose the Service using the cloud platform’s load balancer – in this case Azure Container Service (ACS) with Kubernetes orchestrator.

This way the jobs are load balanced across the set of available valengine pods.

Note: ACS has recently been deprecated and impeding retirement in favor of aks-engine for IaaS Kubernetes deployments and Azure Kubernetes Service (AKS) for a managed PaaS option.

Additionally, the Deployment is configured with a Horizontal Pod Autoscaler (HPA) for auto-scaling the valengine pods (between 2 and 8 pods) once a certain CPU utilization threshold is reached.

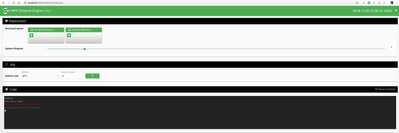

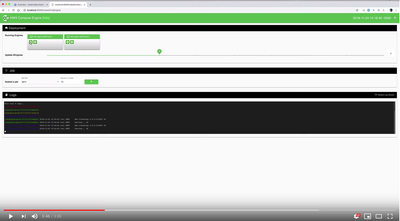

One can use the compute-manager’s web interface to

- submit compute batches

- stream logs on the web console and

- watch the engines auto-scale based on the compute workload

The Demo - https://youtu.be/OFkEZKlHIbg

The demo will:

- First go through deployment specifics

- Submit a bunch of compute jobs

- Watch the pods auto-scale out

- See the subsequent job submissions balanced across the scaled-out compute engine grid

- See the compute engine grid scale back in after a period of reduced activity

The Details

Prerequisites

- Azure CLI tool (az)

- K8s CLI Tool (kubectl)

First, we automate the provisioning of K8s infrastructure on Azure through an ARM template

$ az group deployment create -n hwx-pe-k8s-grid-create -g k8s-pe-grid --template-file az-deploy.json --parameters @az-deploy.parameters.json

Point kubectl to this K8s cluster

$ az acs kubernetes get-credentials --ssh-key-file ~/.ssh/az --resource-group=k8s-pe-grid --name=containerservice-k8s-pe-grid

Start the proxy

$ nohup kubectl proxy 2>&1 < /dev/null &

Deploy the K8s cluster – pods, ReplicaSet, Deployment and Service using kubectl

$ kubectl apply -f k8s/

Details on K8s configuration here.

We then employ the compute-manager and the tooling and library dependencies it uses to:

- Stream logs from the valengine pods onto the web console

- Talk to the K8s cluster using v1 APIs through the Go client

- Submit compute jobs to the compute engine’s eodservice

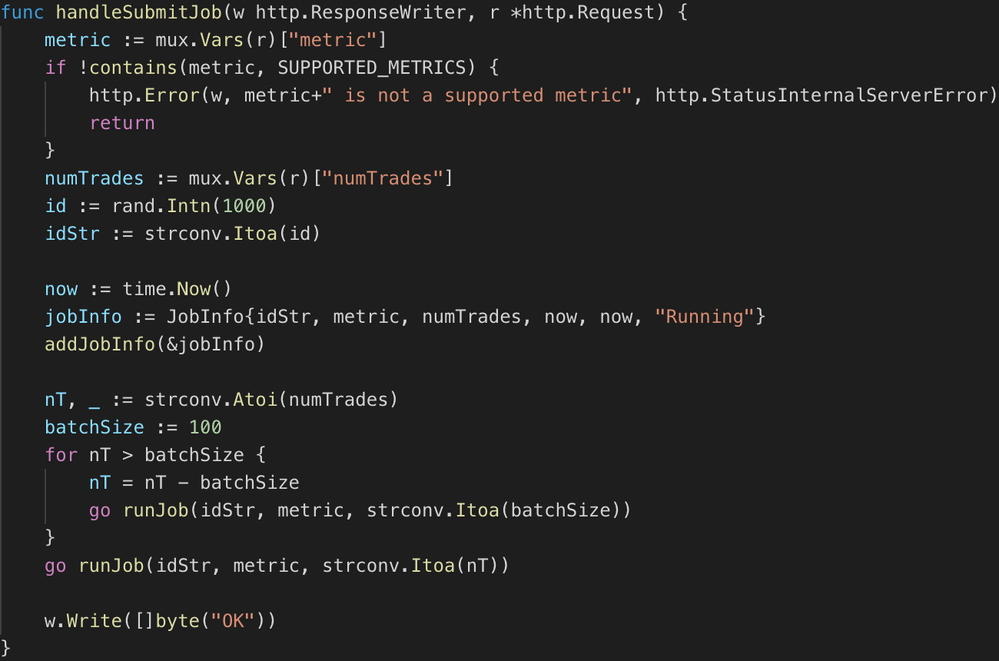

The compute-manager uses Go’s concurrent constructs such as goroutines and channels

func handleSubmitJob(w http.ResponseWriter, r *http.Request) {

metric := mux.Vars(r)["metric"]

if !contains(metric, SUPPORTED_METRICS) {

http.Error(w, metric+" is not a supported metric", http.StatusInternalServerError)

return

}

numTrades := mux.Vars(r)["numTrades"]

id := rand.Intn(1000)

idStr := strconv.Itoa(id)

now := time.Now()

jobInfo := JobInfo{idStr, metric, numTrades, now, now, "Running"}

addJobInfo(&jobInfo)

nT, _ := strconv.Atoi(numTrades)

batchSize := 100

for nT > batchSize {

nT = nT - batchSize

go runJob(idStr, metric, strconv.Itoa(batchSize))

}

go runJob(idStr, metric, strconv.Itoa(nT))

w.Write([]byte("OK"))

}

func cMapIter() <-chan *JobInfo {

c := make(chan *JobInfo)

f := func() {

cMap.Lock()

defer cMap.Unlock()

var jobs []*JobInfo

for _, job := range cMap.item {

jobs = append(jobs, job)

c <- job

}

close(c)

}

go f()

return c

}

for concurrent asynchronous job submission, job status updates and service health checks.

For more, check out the repo.

Coming Up Next …

Hope you enjoyed a very basic introduction to stateless micro-services with Kubernetes and a celebration of Hortonworks’ participation in CNCF.

Next, in Part 2, we will look at another alternative architecture for this compute function leveraging some of the more nuanced Kubernetes functions, broader cloud native stack to facilitate proxying and API management and Autoscaling with Google Kubernetes Engine (GKE).