Community Articles

- Cloudera Community

- Support

- Community Articles

- HiveServer2 configurations deep dive

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-15-2018 04:58 PM - edited 08-17-2019 06:58 AM

HiveServer2 is a thrift server which is a thin Service layer to interact with the HDP cluster in a seamless fashion.

It supports both JDBC and ODBC driver to provide a SQL layer to query the data.

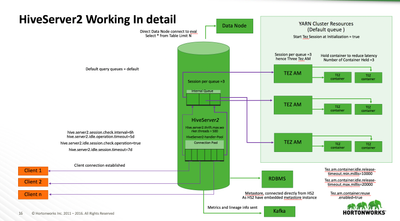

An incoming SQL query is converted to either TEZ or MR job, the results are fetched and send back to client. No heavy lifting work is done inside the HS2. It just acts as a place to have the TEZ/MR driver, scan metadata infor and apply ranger policy for authorization.

HiveServer 2 maintains two type pools

1. Connection pool : Any incoming connection is handled by the HiveServer2-handler thread and is kept in this pool which is unlimited in nature but restricted by number of threads available to service an incoming request . An incoming request will not be accepted if there are no free HiveServer2-handler thread to service the request. The total no of threads that can be spawnned with the HS2 is controlled by parameter hive.server2.thrift.max.worker.thread.

2. Session pool (per queue) : this is the number of concurrent sessions that can be active. Whenever a connection in connection pool executes a SQL query, and empty slot in the Session queue is found and the sql statement is executed. The queue is maintained for each queue defined in "Default query queue". In this example only Default Queue is defined with the session per queue =3. More than 3 concuurent queries (Not connections) will have to wait until one of the slots in the session pool is empty.

* There can be N connections alive in HS2 but at any point in time it can only execute 3 queries rest of the queries will have to wait.

HIveServer 2 Connection overview.

1. HS2 listens on port 10000 and accepts connections from client on this port.

2. HS2 has connections opened to Kafka to write metric and lineage information which is consumed by Ambari metrics service and Atlas.

3. HS2 also has connects to DataNodes directly time to time to service request like "Select * from Table Limit N"

4. HS2 also connects to NodeManager where the TEZ AM are spawned to service the SQL query.

5. In The process it is talking to NameNode and Resource Manager, and a switch during a HA will impact the connection.

6. HiveServer2 overall is talking to ATLAS, SOLR, KAFKA, NameNode, DataNode, ResourceManager, NodeManager, MYSQL(overall)

For HiveServer2 to be more interactive and Faster following parameters are set

1. Start Tez Session at Initilization = true: what it does is at time of Bootstrapping(start, restart) of HS2 it allocates TEZ AM for every session. (Default Query Queue = default, Session per queue = 3, hence 3 TEZ AM will be spawnned. If there were 2 queues in Default Query Queue = default, datascience and Session per queue = 3 then 6 TEZ AM will be spawned.)

2. Every TEZ AM can also be forced to spawn fix number of container (pre-warm) at time of HS2 bootstrapping.

Hold container to reduce latency = true, Number of container held =3 will ensure Every TEZ AM will hold 2 containers to execute a SQL query .

3. Default Query Queue = default, Session per queue = 3, Hold container to reduce latency = true, Number of container held =3

HS2 will spawn 3 TEZ AM for every session. For Every TEZ AM 2 containers will be spawned. hence overall 9 conatiners (3 TEZ AM + 6 containers) can be seen running in Resource Manager UI.

4. For the TEZ to be more efficient and save the time for bootstrapping following parameters needs to be configured in TEZ configs. The parameter prevents the AM to die immediately after job execution. It wait between min- max to service another query else it kills itself freeing YARN resources.

Tez.am.container.reuse.enabled=true

Tez.am.container.idle.release-timeout.min.millis=10000

Tez.am.container.idle.release-timeout.max.millis=20000

Every connection has a memory footprint in HS2 as

1. Every connection scans the metastore to know about table.

2. Every connection reserves memory to hold metrics and TEZ driver

3. Every connection will reserve memory to hold the output of TEZ execution

Hence if a lot of connections are alive then HS2 might become slower due to lack of heap space, hence depending on the number of connections Heap space needs to be tuned. 40 connections will need somewhere around 16 GB of HS2 heap. Anything more than this need fine tuning of GC and horizontal scalling.

The client connections can be disconnected based on the idle state based on the following parameter

hive.server2.session.check.interval=6h

hive.server2.idle.operation.timeout=5d

hive.server2.idle.session.check.operation=true

hive.server2.idle.session.timeout=7d

HiveServer2 has embedded metastore which Interacts with the RDBMS to store the table schema info. The Database is available to any other service through HiveMetaStore. HiveMetastore is not used by HiveServer2 directly.

Problems and solution

1. client have a frequent a timeout : HS2 can never deny a connection hence timeout is a parameter set of client and not on HS2. Most of the Tools have 30 second as timeout, increase it to 90-150 seconds depending on your cluster usage pattern.

2. HS2 becomes unresponsive: This can happen if HS2 is undergoing frequent FULL GC or unavailability of heap.

Its time to bump up the heap and horizontally scale.

3. HS2 has large number of connections : HS2 accepts connections on port 10000 and also connects to RDBMS, DataNodes (for direct queries ) and NodeManager (to connect to AM) hence always tries to analyse is it lot of incoming or outgoing connections.

4. As HS2 as a service is interactive its always good to provide HS2 a good size in terms of young Gen.

5. In case SAS is interacting with HS2 a 20 GB heap where 6 -8 GB is provided to youngen is a good practice. Use ParNewGC for young Gen and ConcurrentMarandSweepGC for Tenured gen.

To know in details about the connection establishment phase of HiveServer2