Community Articles

- Cloudera Community

- Support

- Community Articles

- How to Migrate a Standalone NiFi into a NiFI Clust...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-08-2016 01:51 PM - edited 08-17-2019 01:31 PM

**** This article only pertains to HDF 1.x or Apache NiFi 0.x baseline versions. HDF 2.x+ and Apache NiFi 1.x went through a major infrastructure change in the NiFi core.

Converting a Standalone NiFi instance into a NiFi Cluster

The purpose of this article is to cover the process of taking standalone NiFi instance and converting it into a NiFi cluster. Often times as a NiFi user we start simple by creating a new dataflow on a single installed instance of NiFi. We may find ourselves doing this to save on resources during the build and test phase or because at the time a single server or VM provide all the resources required. Later we want to add in the fault tolerance that a NiFi cluster provides or resources become constrained as our dataflow grows requiring additional resources that a NiFi cluster also provides. It is possible to take all that work done on that single Instance and convert it for use in NiFi Cluster. Your single NiFi instance could easily become one of the attached Nodes to that cluster as well.

The “work” I am referring to would be the actual dataflow design you implemented on the NiFi graph/canvas, any controller-services or reporting tasks you created, and all the templates you created. There are some additional considerations that will also be covered in this article that deal with file/resource dependencies of your NiFi installation. This article will not cover how to setup either NiFi Nodes or the NiFi Cluster Manger (NCM). It will simply explain what steps need to be done to prepare these items for installation in to a NiFi cluster you have already setup but have not started yet.

The biggest difference between a NCM and node or standalone instance is where each stores its flow.xml and templates. Both standalone instances and cluster nodes rely on a flow.xml.gz file and a templates directory. The NCM on the other hand relies only on a flow.tar file. Since the NCM is the manager of the NiFi cluster, it will need to have its flow.tar created before it can be started or you will end up starting up with nothing on your graph/canvas.

The flow.xml.gz file:

Your existing standalone NiFi instance will have a flow.xml.gz file located in the conf directory (default configuration) of your NiFi software installation. If it is not found there, you can verify the location it was changed to by looking in the nifi.properties file. This file is by far the most important file in your NiFi instance. The flow.xml.gz file will include everything that you have configured/added to the NiFi graph/canvas. It will also include any and all of your configured controller-services and reporting-tasks created for your NiFi. The flow.xml.gz file is nothing more then a compressed xml file.

The NCM of your NiFi cluster does not use a flow.xml.gz file but rather relies on the existence of a flow.tar file. So you can’t just copy the flow.xml.gz file from your standalone instance into the conf directory of your NCM and have everything work.

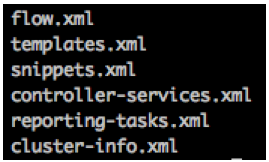

The flow.tar consists of several files:

The good news is while we do need to create the flow.tar, we do not need to create all of the files that go inside of it. NiFi will create the missing pieces for you. All we need to do is get your flow.xml inside a tar named flow.tar. You can so this by following the below procedure:

- 1.Get a copy of your flow.xml.gz file from your standalone instance.

- 2.Uncompress it using the gunzip command:

- gunzip flow.xml.gz

- 3.Tar it up using the tar command:

- tar –cvf flow.tar flow.xml

Now you have your flow.tar needed for your NiFi cluster NCM.

What about any templates I created on my standalone instance? How do I get them loaded in to my NCMs flow.tar file?

The TEMPLATES directory:

If you created templates on your standalone instance, you will need to import those it to your cluster after it is running and has at least one connected node. If you do not have any templates, this section of the guide can be skipped.

On your standalone NiFi instance there is a templates directory located inside the conf directory (default configuration). It will contain an xml file ending with .template for every template you created. These files cannot be just placed inside the flow.tar file like we did with the flow.xml. The templates.xml file inside the flow.tar is not actually xml but rather a binary file. You can either use a custom java jar to create that binary templates.xml file or you can import these manually later after your cluster is up and running. So make sure you save off a copy of your templates directory since you will need it no matter which path you choose.

Option 1: (Use custom java code to create binary templates.xml file)

Copy the attached java jar file (templates.jar.tar.gz) to the system containing the saved off templates directory from your standalone instance. Unpack the file using the command: tar -xvzf templatejar.tar.gz

- Run the following command to create the

templates.xml file:

- java -cp template.jar combine <path to templates

dir>/*

* note the star, it needs a list of files, not just a directory. ** It will create a templates.xml file in the current directory

- java -cp template.jar combine <path to templates

dir>/*

- Take the templates.xml file created and add it

to the flow.tar file you already created using the following command:

- tar –rvf flow.tar templates.xml

- Place this flow.tar file in the conf directory of your NCM instance.

Option 2: (Manually add templates after Cluster is running)

Once you have your NiFi cluster up and running, you can import each template from the saved off templates directory just like you would import any other template shared with you.

- Click on the templates icon

in the upper right corner of the UI.

- Select the

button in the upper right.

- Select one of the xxxx.template files you saved off.

- Select the

button to import the file.

- The file will be put inside the flow.tar and placed in the templates directory on each of your nodes

- Repeat steps 2 – 4 for every, template file.

What about the controller-services and reporting-tasks I created on my standalone instance?

Controller-services and Reporting-tasks

At the beginning I mentioned how your flow.xml.gz file contained any controller services and/or reporting tasks you may have created through the UI. Then a little later I show you that the flow.tar has separate controller-services.xml and reporting-tasks.xml files in it. The flow.xml used to create your flow.tar still has this information; in fact that is where it still needs to be. These two xml files in the flow.tar are where any controller services or reporting tasks you create in the UI for the “Cluster Manager” specifically will be kept. All the reporting tasks and controller service in the flow.xml will run on every node in your cluster.

There may be controller services that you originally created on your standalone instance that make better sense running in the NCM now that you are clustered. You can create new “Cluster Manager” controller services and delete the “node” controller services after your NiFi cluster is running. The following controller services should be run on the “Cluster Manager”:

- -DistributedMapCacheServer

- -DistributedSetCacheServer

There are also controller services that may need to run on both nodes and your NCM. The following is a good example:

- -StandardSSLContextService

- Some other controller services that run on the NCM may need this service to function if they are configured to run securely using SSL.

Bottom line here is nothing needs to be done before your NiFi cluster is started with regards to either controller services or reporting tasks.

Now for the good news… How does my existing standalone instance differ from a node in my new NiFi cluster?

Standalone NiFi versus Cluster Node

You now have everything needed to get your NCM up running with the templates and flow from your standalone NiFi. What do I do with my standalone instance now? Structurally, there is no difference between a standalone NiFi instance and a NiFi Node. A Standalone instance can easily become just another node in your cluster by configuring the clustering properties at the bottom of its nifi.properties file and restarting it. In fact we could just use this standalone instance to replicate as many nodes as you want.

There are a few things that you need to remember when doing this. Some will apply no matter what while others depend on your specific configuration:

The things that always matter:

- Every node must have it own copy of every file

declared in any processor, controller-service or reporting task.

- For example some processors like MergeContent allow you to configure a Demarcation file located somewhere on your system. That file would need to exist in the same directory location on every Node.

- Every Node must have its nifi.properties file

updated so that the following properties are populated with unique values for

each node:

- nifi.web.http.host= or nifi.web.https.host= (if secure)

- Every Node must have the same value as the NCM

for the following nifi.properties property:

- nifi.sensitive.props.key=

*** This value must match what was used on your standalone instance when the flow was created. This value was used to encrypt all passwords in your processors. NiFi will not start if it cannot decrypt those passwords.

- nifi.sensitive.props.key=

- If the NCM exists on the same system as one of

your Nodes (common practice since NCM is a light weight NiFi instance)

- NCM and Node on same machine cannot share any ports numbers. They must be unique in the nifi.properties files.

The things that might matter:

If your NiFi is configure to run securely (https) or you are using the StandardSSLContextService, there are some additional considerations since the same identical dataflow runs on every node.

- SSL based controller services:

Every server/VM used for a NiFi cluster is typically assigned its own server certificate that is used for SSL authentication. We have to understand that in a cluster every Node is running the exact same dataflows and controller services. So for example if you have a StandardSSLContextService configure to use a keystore located at /opt/nifi/certs/server1.jks with password ABC, every Node will expect to find a server1.jks file in the same place on each server and that the password is ABC. Since each system gets a unique certificate, how do we work around this issue? We cannot work around the password issue, so every certificate and keystore must be created using the same password. However, every key can be unique. We can create a symbolic link that maps a generic keystore name to the actual keystore on each system

System1:

keystore.jks à /opt/nifi/certs/server1.jks

System2:

keystore.jks à /opt/nifi/certs/server2.jks

And so on….

We can now configure our StandardSSLContextService to use keystore.jks and the matching password that every keystore/certificate was created with.

- Modify security settings in each nodes nifi.properties file.

The nifi.properties file on every node will need to have the keystore and truststore properties modified to point to the particular nodes keystores and truststores.

In addition the authority provider will need to be changed. In a standalone NiFi instance the authority provider specified by the property nifi.security.user.authority.provider= is set to file-provider. In a secure cluster, the NCM should be set to cluster-ncm-provider while each of the nodes should have it set to cluster-node-provider. Making these changes will also require modifying the associated authority-providers.xml file.

- Modify the authority-providers.xml file:

The Authority-providers.xml file is where you configure both the cluster-ncm-provider and the cluster-node-provider. There will already be commented out sections in this fie for both. The cluster-ncm-provider will need to be configured to bind to port and told where to find your authorized-users.xml file (this file should have been copied from your standalone instance). The cluster-node-provider simply needs to be configured with the same port number used for the ncm provider above.

Summary:

The above process may sound very involved, but it really isn’t that bad. We can sum up these steps in the following high-level checklist:

The conversion checklist:

- Save off all the templates you may have created on your standalone instance.

- Create flow.tar using flow.xml.gz from your standalone instance.

- If you have templates, use provided templates.jar to convert them for inclusion in the above flow.tar file

- Configured NCM and all Nodes nifi.properties file security properties section with same value for nifi.sensitive.props.key, unique keystores, and proper nifi.security.user.authority.provider.

- All processors, controller-services, and reporting-tasks file dependencies exist in same directory paths on every Node.

- Use symbolic links so that all keystores/truststores have identical filenames on every node. (If using SSL enabled controller-services or processors)

- Use same keystore/truststore passwords on every Node. (If using SSL enabled controller-services or processors)

- After the cluster is running, import each of the saved off templates (only necessary if you did not use templates.jar to create templates.xml file)