Community Articles

- Cloudera Community

- Support

- Community Articles

- How to setup Hive Warehouse Connector in CML (CDP ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

05-19-2020

12:22 PM

- edited on

09-02-2020

04:00 PM

by

cjervis

This article explains how to setup Hive Warehouse Connector (HWC), in CDP Public Cloud CML (tested with CDP Public Cloud runtime 7.1).

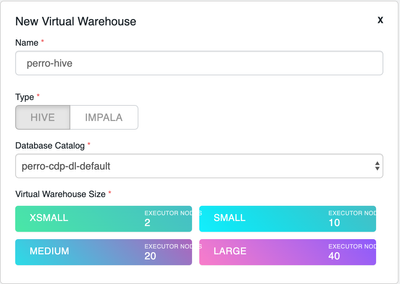

Step 1 - Start a Virtual Warehouse

- Navigate to Data Warehouses in CDP.

- If you haven't activated your environment, ensure you have activated it first.

- Once activated, provision a new Hive Virtual Warehouse:

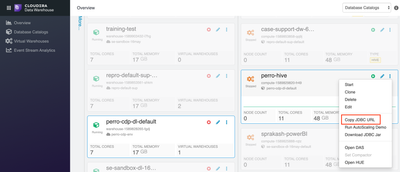

Step 2 - Find your HiveServer2 JDBC URL

- Once your Virtual Warehouse has been created, find it in the list of Virtual Warehouses.

- Click the Options icon (three vertical dots) for your Virtual Warehouse.

- Select the Copy JDBC URL option and note what is copied to the clipboard:

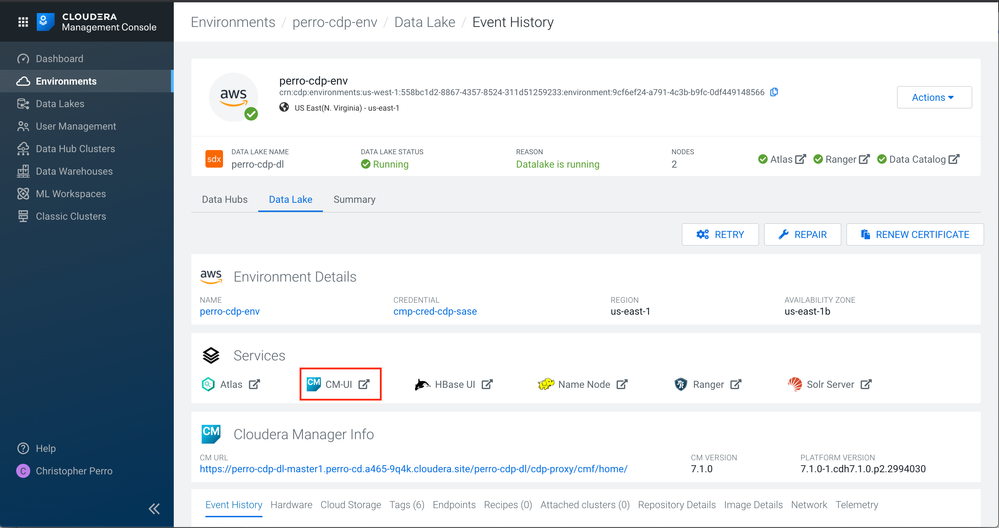

Step 3 - Find your Hive Metastore URI

- Navigate back to your environment Overview page and select the Data Lake tab.

- Click on the CM-UI Service:

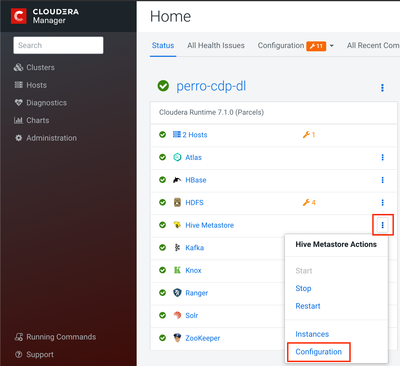

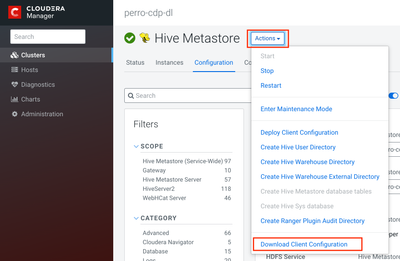

- Click on the Options icon (three vertical dots) for the Hive Metastore Service and click Configuration:

- Click the Actions dropdown menu and select the Download Client Configuration item:

- Extract the downloaded zip file, open the hive-site.xml file, and find the value for the hive.metastore.uris configuration. Make a note this value.

Step 4 - Find the Hive Warehouse Connector Jar

A recent update has made this step unnecessary.

Navigate to your CML Workspace, select your Project, and launch a Python Workbench session.In either the terminal or Workbench, find the hive warehouse connector jar (HWC):

find / -name *hive-warehouse-connector*

*Note: CML no longer allows "access" to /dev/null - so redirecting errors to that location no longer works. The above command will contain a lot of "Permission Denied" output, but the jar you're looking for should be somewhere mixed in - likely in /usr/lib.

Step 5 - Configure your Spark Session

The Overview Page for the Hive Warehouse Connector provides details and current limitations. The Configuration Page details the two modes described below. Note: fine-grained Ranger access controls are bypassed in the High-Performance Read Mode (i.e. LLAP / Cluster Mode).

JDBC / Client Mode

from pyspark.sql import SparkSession

from pyspark_llap import HiveWarehouseSession

spark = SparkSession\

.builder\

.appName("PythonSQL-Client")\

.master("local[*]")\

.config("spark.yarn.access.hadoopFileSystems","s3a:///[STORAGE_LOCATION]")\

.config("spark.hadoop.yarn.resourcemanager.principal", "[Your_User]")\

.config("spark.sql.hive.hiveserver2.jdbc.url", "[VIRTUAL_WAREHOUSE_HS2_JDBC_URL];user=[Your_User];password=[Your_Workload_Password]")\

.config("spark.datasource.hive.warehouse.read.via.llap", "false")\

.config("spark.datasource.hive.warehouse.read.jdbc.mode", "client")\

.config("spark.datasource.hive.warehouse.metastoreUri", "[Hive_Metastore_Uris]")\

.config("spark.datasource.hive.warehouse.load.staging.dir", "/tmp")\

//No longer necessary .config("spark.jars", "[HWC_Jar_Location]")\

.getOrCreate()

hive = HiveWarehouseSession.session(spark).build()

LLAP / Cluster Mode

Note: LLAP / Cluster Mode doesn't require the HiveWarehouseSession, though you are free to use it for consistency between the modes.

from pyspark.sql import SparkSession

from pyspark_llap import HiveWarehouseSession

spark = SparkSession\

.builder\

.appName("PythonSQL-Cluster")\

.master("local[*]")\

.config("spark.yarn.access.hadoopFileSystems","s3a:///[STORAGE_LOCATION]")\

.config("spark.hadoop.yarn.resourcemanager.principal", "[Your_User]")\

.config("spark.sql.hive.hiveserver2.jdbc.url", "[VIRTUAL_WAREHOUSE_HS2_JDBC_URL];user=[Your_User];password=[Your_Workload_Password]")\

.config("spark.datasource.hive.warehouse.read.via.llap", "true")\

.config("spark.datasource.hive.warehouse.read.jdbc.mode", "cluster")\

.config("spark.datasource.hive.warehouse.metastoreUri", "[Hive_Metastore_Uris]")\

.config("spark.datasource.hive.warehouse.load.staging.dir", "/tmp")\

//No longer necessary .config("spark.jars", "[HWC_Jar_Location]")\

.config("spark.sql.hive.hwc.execution.mode", "spark")\

.config("spark.sql.extensions", "com.qubole.spark.hiveacid.HiveAcidAutoConvertExtension")\

.getOrCreate()

hive = HiveWarehouseSession.session(spark).build()

Step 6 - SQL All the Things

Add your Spark SQL...

JDBC / Client Mode

from pyspark.sql.types import *

#This table has column masking and row level filters in Ranger. The below query, using the HWC, has the policies applied.

hive.sql("select * from masking.customers").show()

#This query, using plain spark sql, will not have the column masking or row level filter policies applied.

spark.sql("select * from masking.customers").show()

LLAP / Cluster Mode

from pyspark.sql.types import *

#This table has column masking and row level filters in Ranger. Neither are applied in the below due to LLAP/Cluster Mode High Performance Reads

hive.sql("select * from masking.customers").show()

spark.sql("select * from masking.customers").show()