Community Articles

- Cloudera Community

- Support

- Community Articles

- Offloading Mainframe Data into Hadoop

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 05-09-2016 10:30 PM - edited 08-17-2019 12:31 PM

Mainframe Offload is becoming the talk of most of the organizations , as they are trying to reduce the cost and move into the latest next generation technologies. Organizations are running critical business applications on mainframe systems that are generating and processing huge volume of data which are tagged with pretty high maintenance cost. With Big Data becoming more famous and every industry is trying to leverage the capability of the Open Source Technologies, organizations are now trying move some or all of their applications to the Open Source. Since the open source systems platforms like Hadoop ecosystems have become more robust , flexible , cheaper , better performing than the traditional systems, the current trend in offloading the legacy systems is becoming more popular. Keeping this in mind , in this article we will discuss about one of the topics, how to offload the data from the the legacy systems like Mainframes into the next generation technologies like Hadoop.

The successful Hadoop journey typically starts with new analytic applications, which lead to a Data Lake. As more and more applications are created that derive value from the new types of data from sensors/machines, server logs, clickstreams and other sources, the Data Lake forms with Hadoop acting as a shared service for delivering deep insight across a large, broad, diverse set of data at efficient scale in a way that existing enterprise systems and tools can integrate with and complement the Data Lake journey

As most of you know, Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. The Benefits of Hadoop is the computing power, flexibility, low cost, horizontally scalable , fault tolerant and lots more..

There are multiple options which are used in the industry to move the data from Mainframe Legacy Systems to the Hadoop Ecosystems. I would be discussing the top 3 methods / options which can be leveraged by the customers

Customers have had the most success with Option 1.

Option 1: Syncsort + Hadoop (Hortonworks Data Platform)

Syncsort is a Hortonworks Certified Technology Partner and has over 40 years of experience helping organizations integrate big data…smarter. Syncsort plays well in customer who currently does not use informatica in-house and who are trying to offload mainframe data into Hadoop.

Syncsort integrates with Hadoop and HDP directly through YARN, making it easier for users to write and maintain MapReduce jobs graphically. Additionally, through the YARN integration, the processing initiated by DMX-h within the HDP cluster will make better use of the resources and execute more efficiently.

Syncsort DMX-h was designed from the ground up for Hadoop - combining a long history of innovation with significant contributions Syncsort has made to improve Apache Hadoop. DMX-h enables people with a much broader range of skills — not just mainframe or MapReduce programmers — to create ETL tasks that execute within the MapReduce framework, replacing complex manual code with a powerful, easy-to-use graphical development environment. DMX-h makes it easier to read, translate, and distribute data with Hadoop.

Synsort supports mainframe record formats including fixed, variable with block descriptor and VSAM, it has an easy to use graphical development environment, which makes development easy and faster with very less coding. It has the capabilities including the import of Cobol copybooks and able to do the data transformations like EBCDIC to ASCII conversions on the fly without coding and seamlessly connects to all sources and targets of hadoop. It enables the developers to the build once and reuse many times and there is no need to install any software on the mainframe systems.

For more details please check the below links:

Synsort DMX-H - http://www.syncsort.com/en/Products/BigData/DMXh

Hortonworks Data Platform (Powered by Apache Hadoop) - http://hortonworks.com/products/hdp/

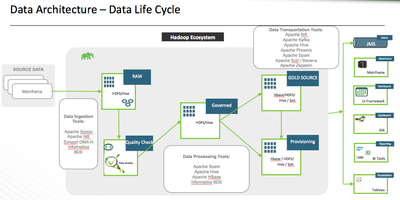

The Below diagram provides the generic Solution Architecture on the how the above mentioned technologies can be utilized or architected in any organizations looking forward to move data from Mainframe Legacy Systems to the Hadoop and would like to take advantage of the Modern Data Architecture.

Option 2: Informatica Powercenter + Apache Nifi + Hadoop (Hortonworks Data Platform)

If the customer is trying to do the Mainframe Offload into Hadoop and also have Informatica in their environment which is used for ETL Processing, then the customer can leverage the capability of Informatica Power Exchange to convert the EBCDIC file format to ASCII file format which is more readable for the Hadoop environment. Informatica PowerExchange makes it easy for data to be extracted , converted (EBCDIC to ASCII or vise versa) , filter and available to any target databased in memory without any program code or in file format in any FTP site or into Hadoop directly for been utilized. Informatica PowerExchange utilizes the VSAM (Cobol Copy Books) directly for the conversion process from EBCDIC to ASCII format.

The ASCII files which are been generated can now be FTPed to any SAN, NFS or local file systems, which can be leveraged by the Hadoop Ecosystem.

There are different ways to move the data into Hadoop, either through the traditional scripting way like Sqoop, flume, java scripting , Pig etc. or by using Hortonworks Data Flow (Apache Nifi) which makes the data ingestion into Hadoop (Hortonworks Data Platform) more easier.

Now that we have the data in Hadoop Environment , we can how use the capabilities of Hortonworks Data Platform to perform the Cleansing, Aggregation, Transformation, Machine Learning, Search, Visualizing etc.

For more details please check the below links:

Informatica Power Exchange - https://www.informatica.com/products/data-integration/connectors-powerexchange.html#fbid=5M-ngamL9Yh

Hortonworks Data Flow (Powered by Apache Nifi) - http://hortonworks.com/products/hdf/

Hortonworks Data Platform (Powered by Apache Hadoop) - http://hortonworks.com/products/hdp/

Option 3: Informatica BDE (Big Data Edition) + Hadoop (Hortonworks Data Platform)

With Big Data being the buzz word in the world, every organization would like to leverage the capability of Big Data and so companies are now trying to integrate their current software into the Big Data Ecosystem, so that they not only be able to pull or push the data to Hadoop, but Informatica has come up with a Big Data Edition that provides an extensive library of prebuilt transformation capabilities on Hadoop, including data type conversions and string manipulations, high performance cache-enabled lookups, joiners, sorters, routers, aggregations, and many more. The customers can rapidly develop data flows on Hadoop using a codeless graphical development environment that increases productivity and promote reuse. This also leverages the capabilities of the distributed computing, fault tolerance, parallelism which Hadoop bring to the table by default.

Customers who are already using Informatica for the ETL process are moving towards Informatica BDE which run on top of hadoop ecosystems like Hortonworks Data Flow and the good part of this is that the customers do not need to built resources with new skills but can leverage their existing ETL resources and build the ETL Process graphically using Informatica BDE which under the covers are converted into Hive Queries and these source code are then moved the the underlying Hadoop Cluster and execute them on Hadoop to make use of its distribute computing and parallelism which enables them to run their process in more efficient and faster way.

For more details please check the below links:

Informatica Big Data Edition - https://www.informatica.com/products/big-data/big-data-edition.html#fbid=5M-ngamL9Yh

Hortonworks Data Platform (Powered by Apache Hadoop) - http://hortonworks.com/products/hdp/

Created on 10-09-2017 02:18 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Podium Data handles native EBCDIC and COBOL Copybook conversion in its ingestion framework. Not only does it convert the EBCDIC to UTF-8, but also handles the COBOL datatype conversions (including things like packed decimals, truncated binary, and other difficult datatypes) as well as complex structures such as REDEFINES, OCCURS DEPENDING ON, and VARIABLE BLOCK.

Created on 08-22-2018 06:58 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Very good survey. I would like, however, to introduce one more alternative for your appreciation.

At ABSA we have been working on COBOL data source for Spark which we call Cobrix

The performance results we have found so far are VERY encouraging and the ease of use is way ahead of Informatica's and similar tools.