Community Articles

- Cloudera Community

- Support

- Community Articles

- Setting up GPU-enabled Tensorflow to work with Zep...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 09-06-2016 12:55 PM - edited 09-16-2022 01:35 AM

Setting up GPU-enabled Tensorflow to work with Zeppelin

Sometimes we want to do some quick Deep Learning prototyping using TensorFlow. We also want to take advantage of Spark for data pre-processing, scaling, feature extraction while keeping it all in the same place for demo. This step-by-step guide will go through process of setting up those tools to work with each other.

My setup:

- AWS GPU-enabled instance (any g2)

- Ubuntu 14.04

- HDP 2.5

This tutorial has been partly based on this script: https://gist.github.com/erikbern/78ba519b97b440e10640, but has been heavily updated and modified.

First, let’s install and set up some pre-reqs:

sudo apt-get update sudo apt-get upgrade -y # choose maintainers version sudo apt-get install -y build-essential python-pip python-dev git python-numpy swig python-dev default-jdk zip zlib1g-dev

Then, disable nouveau and update initramfs

echo -e "blacklist nouveau\nblacklist lbm-nouveau\noptions nouveau modeset=0\nalias nouveau off\nalias lbm-nouveau off\n" | sudo tee /etc/modprobe.d/blacklist-nouveau.conf echo options nouveau modeset=0 | sudo tee -a /etc/modprobe.d/nouveau-kms.conf sudo update-initramfs -u sudo reboot # we do actually need to reboot it sudo apt-get install -y linux-image-extra-virtual sudo reboot

Let's also install linux-headers and linux-source

sudo apt-get install -y linux-source linux-headers-`uname -r`

Now, we need to get CUDA. At the time of writing this article, latest version was 7.5

wget http://developer.download.nvidia.com/compute/cuda/7.5/Prod/local_installers/cuda_7.5.18_linux.run chmod +x cuda_7.5.18_linux.run ./cuda_7.5.18_linux.run -extract=`pwd`/nvidia_installers

cd nvidia_installers sudo ./NVIDIA-Linux-x86_64-352.39.run sudo modprobe nvidia sudo ./cuda-linux64-rel-7.5.18-19867135.run cd ..

It would be too easy for NVidia if that was it. Now we need to get CuDNN from their Accelerated Computing Program. You would need to apply for it here. (https://developer.nvidia.com/cudnn). Approval shouldn’t take more than couple of hours.

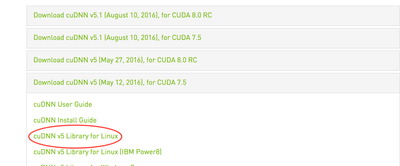

Once approved, go to download page here (https://developer.nvidia.com/rdp/cudnn-download) and get cuDNN v5 for CUDA 7.5. This one:

Then, scp this file from your local machine to remote host. Once it’s there, do:

tar -xf cudnn-7.5-linux-x64-v5.0-ga.tar sudo cp cuda/lib64/* /usr/local/cuda/lib64/ sudo cp cuda/include/cudnn.h /usr/local/cuda/include/ sudo add-apt-repository ppa:webupd8team/java sudo apt-get update sudo apt-get install oracle-java8-installer

We will also need setup tools called Bazel. I used bazel v 0.3.1 but newer version should work too.

wget https://github.com/bazelbuild/bazel/releases/download/0.3.1/bazel-0.3.1-installer-linux-x86_64.sh chmod +x bazel-0.3.1-installer-linux-x86_64.sh ./bazel-0.3.1-installer-linux-x86_64.sh --user

Let's save environment variables to ~/.bashrc

echo 'export PATH="$PATH:$HOME/bin"' >> ~/.bashrc echo 'export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/usr/local/cuda/lib64"' >> ~/.bashrc echo 'export CUDA_HOME=/usr/local/cuda' >> ~/.bashrc source ~/.bashrc

Getting TensorFlow from GitHub

git clone --recurse-submodules https://github.com/tensorflow/tensorflow cd tensorflow

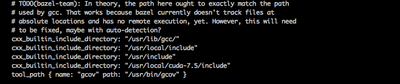

Here we need to do some manual setup. Open third_party/gpus/crosstool/CROSSTOOL and add "/usr/local/cuda-7.5/include" to cxx_builtin_include_directory property. It should look something like that:

Then, start configuration and building:

./configure bazel build -c opt --config=cuda //tensorflow/tools/pip_package:build_pip_package bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg

Finally, installing TensorFlow package from wheel.

sudo pip install /tmp/tensorflow_pkg/tensorflow-0.10.0rc0-cp27-none-linux_x86_64.whl

At this point, we have Tensorflow working with GPU support, but we also need to make it available for Zeppelin. To do that, we need to show Zeppelin where our CUDA is installed.

echo 'export PATH="$PATH:$HOME/bin"' >> /etc/zeppelin/conf/zeppelin-env.sh echo 'export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/usr/local/cuda/lib64"' >> /etc/zeppelin/conf/zeppelin-env.sh echo 'export CUDA_HOME=/usr/local/cuda' >> etc/zeppelin/conf/zeppelin-env.sh

This should work with Zeppelin now. If python throws error that it can't find CUDA while importing TensorFlow, try again after restarting Zeppelin.

Created on 10-11-2016 06:25 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

do you have an example zeppelin notebook to share?

Created on 05-01-2017 04:26 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks a lot for this article. What are you using to run TF on Spark in this configuration?