Community Articles

- Cloudera Community

- Support

- Community Articles

- Support Video: How to configure log4j for Spark on...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

12-10-2019

03:53 AM

- edited on

12-22-2020

11:19 PM

by

VidyaSargur

This video explains feasible and efficient ways to troubleshoot performance or perform root-cause analysis on any Spark streaming application, which usually tend to grow over the gigabyte size. However, this article does not cover yarn-client mode as it is recommended to use yarn-cluster for streaming applications due to reasons that will not be discussed on this article.

Open the video on YouTube here

Spark streaming applications usually run for long periods of time, before facing issues that may cause them to be shut down. In other cases, the application will not even be shut down, but it could be facing performance degradation during certain peak hours. In any case, the amount and size of this log will keep growing over time, making it really difficult to analyze when they start growing past the gigabyte size.

It's well known that Spark, as many other applications, uses log4j facility to handle logs for both the driver and the executors, hence it is recommended to tune the log4j.properties file, to leverage the rolling file appender option, which will basically create a log file, rotate it when a size limit is met, and keep a number of backup logs as historical information that we can later on use for analysis.

Updating the log4.properties file in the Spark configuration directory is not recommended, as it will have a cluster-wide effect, instead we can use it as a template to create our own log4j file that is going to be used for our streaming application without affecting other jobs.

As an example, in this video, a log4j.properties file is created from scratch to meet the following conditions:

- Each log file will have a maximum size of 100Mb, a reasonable size that can be reviewed on most file editors while holding a reasonable time lapse of Spark events

- The latest 10 files are backed up for for historical analysis.

- The files will be saved in a custom path.

- The log4.properties file can be reused for multiple Spark streaming applications, and log files for each application will not overwrite each other. The vm properties will be used as a workaround.

- Both the Driver and the Executors, will have their own log4j properties file. This will provide flexibility on configuring log level for specific classes, file location, size, etc.

- Make the current and previous logs available on the Resource Manager UI.

Procedure

- Create a new log4j-driver.properties file, for the Driver:

log4j.rootLogger=INFO, rolling log4j.appender.rolling=org.apache.log4j.RollingFileAppender log4j.appender.rolling.layout=org.apache.log4j.PatternLayout log4j.appender.rolling.layout.conversionPattern=[%d] %p %m (%c)%n log4j.appender.rolling.maxFileSize=100MB log4j.appender.rolling.maxBackupIndex=10 log4j.appender.rolling.file=${spark.yarn.app.container.log.dir}/${vm.logging.name}-driver.log log4j.appender.rolling.encoding=UTF-8 log4j.logger.org.apache.spark=${vm.logging.level} log4j.logger.org.eclipse.jetty=WARN - In the above content, the use of two JVM properties are leveraged:

- vm.logging.level which will allow to set a different log level for each application, without altering the content of the log4j properties file.

- vm.logging.name which will allow to have different driver log files per application by using a different application name for each spark streaming application.

- Similarly, create a new log4j-executor.properties file, for the Executors:

log4j.rootLogger=INFO, rolling log4j.appender.rolling=org.apache.log4j.RollingFileAppender log4j.appender.rolling.layout=org.apache.log4j.PatternLayout log4j.appender.rolling.layout.conversionPattern=[%d] %p %m (%c)%n log4j.appender.rolling.maxFileSize=100MB log4j.appender.rolling.maxBackupIndex=10 log4j.appender.rolling.file=${spark.yarn.app.container.log.dir}/${vm.logging.name}-executor.log log4j.appender.rolling.encoding=UTF-8 log4j.logger.org.apache.spark=${vm.logging.level} log4j.logger.org.eclipse.jetty=WARN - Next step, instruct Spark to use these custom log4j properties file:

- Applying the above template to a "real life" KafkaWordCount streaming application in a Kerberized environment, it would look like the following:

spark-submit --master yarn --deploy-mode cluster --num-executors 3 \ --conf "spark.driver.extraJavaOptions=-Djava.security.auth.login.config=./key.conf \ -Dlog4j.configuration=log4j-driver.properties -Dvm.logging.level=DEBUG -Dvm.logging.name=SparkStreaming-1" \ --conf "spark.executor.extraJavaOptions=-Djava.security.auth.login.config=./key.conf \ -Dlog4j.configuration=log4j-executor.properties -Dvm.logging.level=DEBUG -Dvm.logging.name=SparkStreaming-1" \ --files key.conf,test.keytab,log4j-driver.properties,log4j-executor.properties \ --jars spark-streaming_2.11-2.3.0.2.6.5.0-292.jar, \ --packages org.apache.spark:spark-streaming-kafka-0-8_2.11:2.2.0.2.6.4.0-91, org.apache.spark:spark-streaming_2.11:2.2.0.2.6.4.0-91 \ --class org.apache.spark.examples.streaming.KafkaWordCount \ /usr/hdp/2.6.4.0-91/spark2/examples/jars/spark-examples_2.11-2.2.0.2.6.4.0-91.jar \ node2.fqdn,node3.fqdn,node4.fqdn \ my-consumer-group receiver 2 PLAINTEXTSASL

- (Template) Spark on YARN - Cluster mode, log level set to DEBUG and application name "SparkStreaming-1":

spark-submit --master yarn --deploy-mode cluster \ --num-executors 3 \ --files log4j-driver.properties,log4j-executor.properties \ --conf "spark.driver.extraJavaOptions=-Dlog4j.configuration=log4j-driver.properties -Dvm.logging.level=DEBUG -Dvm.logging.name=SparkStreaming-1" \ --conf "spark.executor.extraJavaOptions=-Dlog4j.configuration=log4j-executor.properties -Dvm.logging.level=DEBUG -Dvm.logging.name=SparkStreaming-1" \ --class org.apache.spark.examples.SparkPi \ /usr/hdp/current/spark2-client/examples/jars/spark-examples_*.jar 1000

- Applying the above template to a "real life" KafkaWordCount streaming application in a Kerberized environment, it would look like the following:

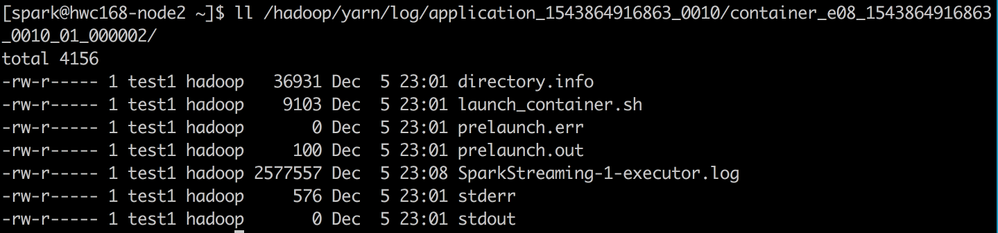

After running the Spark streaming application, the following information will be listed in NodeManager nodes where an executor is launched:

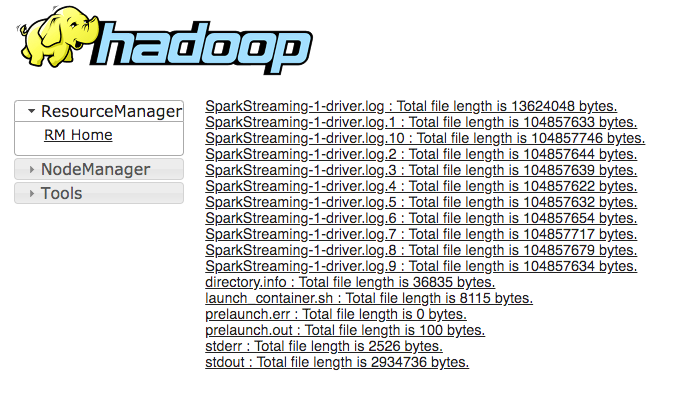

This way it's easier to find and collect the necessary executor logs. Also, from the Resource Manager UI, the current log and any previous (backup) file will be listed:

Created on 05-28-2020 12:04 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

what is the directory of log4j-driver.properties/log4j-executor.properties should I put?

Created on 06-02-2020 07:22 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

The log4j-driver.properties/log4j-executor.properties, can be anywhere in your filesystem, just make sure to reference them from the right location in the files arguments section:

--files key.conf,test.keytab,/path/to/log4j-driver.properties,/path/to/log4j-executor.properties

If you have a workspace in your home directory, then it can safely be located in your current path, upon using the spark-submit spark client, --files will look for both log4j-driver.properties/log4j-executor.properties in the CPW unless otherwise specified.

Created on 07-15-2021 07:40 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

for cluster mode it can be put on hdfs location as well. And can be referenced from there in files argument of spark-submit script.

--files hdfs://namenode:8020/log4j-driver.properties#log4j-driver.properties